Eli the Computer Guy discusses Alibaba’s new trillion-parameter Qwen 3 Max AI model, highlighting the challenges of running such large models due to hardware costs and questioning whether bigger parameter counts truly translate to better performance. He also reflects on the implications for open-source AI accessibility and the broader US-China AI competition, emphasizing that practical usability and cost-effectiveness will ultimately determine success.

In this video, Eli the Computer Guy discusses the recent announcement of a one trillion parameter large language model (LLM) called Qwen 3 Max, developed by Alibaba’s AI research team in China. He reflects on the significance of parameter counts in LLMs, questioning whether bigger always means better, and highlights the complexity of comparing models solely based on such metrics. Eli emphasizes the importance of practical considerations like hardware requirements, cost, and real-world application performance rather than just focusing on raw numbers or technical specs.

Eli explains that smaller models, such as those with a few billion parameters, can run on modest hardware like a Raspberry Pi or a MacBook Pro, while larger models require increasingly powerful and expensive GPUs. He expresses curiosity about the hardware needed to run a trillion-parameter model like Qwen 3 Max, noting that such requirements might make open-source deployment impractical for most users. This raises concerns about the future of open-source AI models, as the cost and complexity of running cutting-edge models could push developers toward relying on paid APIs instead.

The video also touches on the evolving nature of open-source software in the cloud era. Eli points out that while big tech companies have contributed to open-source projects, many improvements are designed for massive infrastructure setups that are inaccessible to most users. He draws a parallel to AI models, suggesting that even if models are open-source, the prohibitive hardware costs could limit their practical use, effectively making them less accessible despite being free in theory.

Eli reviews some technical features of the Qwen 3 Max model, such as its large context window and support for complex reasoning, coding, and multi-language tasks. He also discusses Alibaba Cloud’s tiered pricing for API access, noting that the cost per million tokens is surprisingly low, which could make the model attractive for enterprise and research applications. However, he warns that costs increase significantly with larger input sizes, so efficient prompt design remains important for managing expenses.

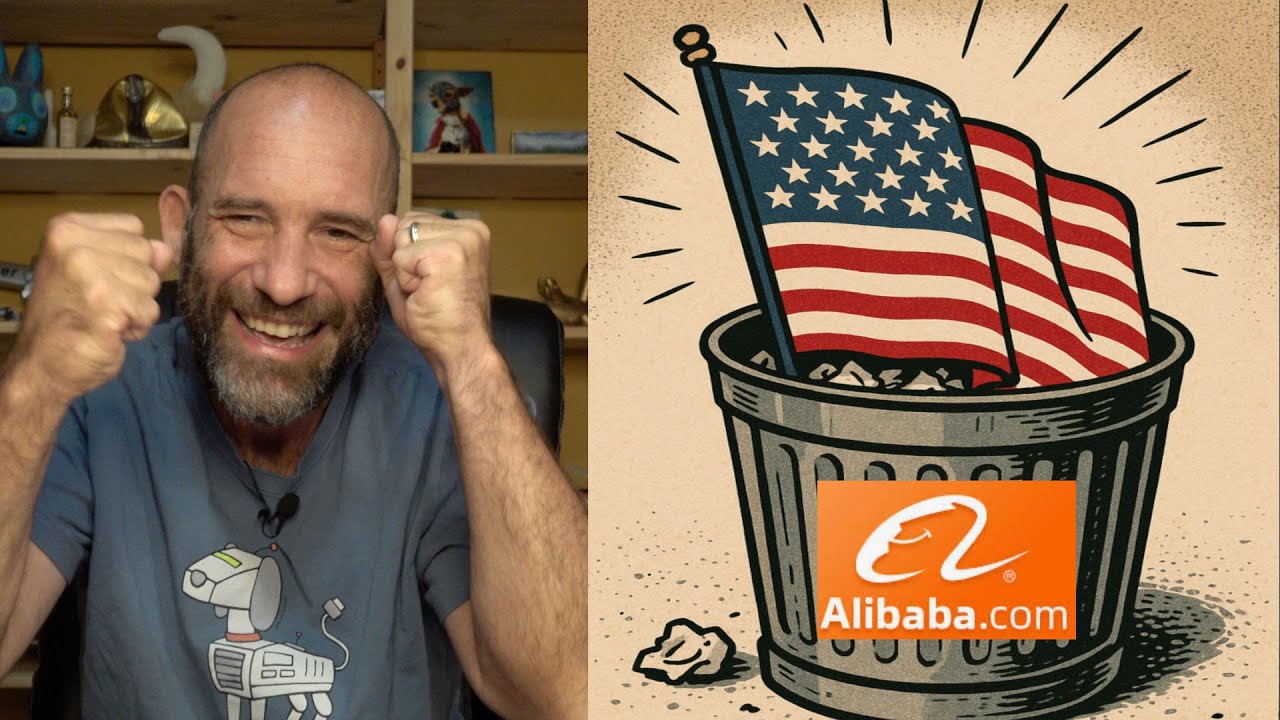

Finally, Eli reflects on the broader geopolitical context, acknowledging the ongoing AI competition between the United States and China. While the trillion-parameter Qwen model is an impressive milestone for China, he questions whether sheer size equates to winning the AI race. He suggests that the ultimate winner will be determined by who can deliver the best business use cases, balancing price, performance, and usability. Eli invites viewers to share their opinions on the development and concludes by promoting his educational platform and upcoming events.