The video discusses the rapid advancements in artificial intelligence, focusing on Ilia Sutskever’s startup Safe Superintelligence, which has raised $1 billion and aims to achieve superintelligence through significant computing power, alongside Elon Musk’s AI initiatives. It also highlights the massive investments in AI infrastructure, including $125 billion data centers, while raising concerns about the environmental impact and the effectiveness of scaling AI models in achieving true artificial intelligence.

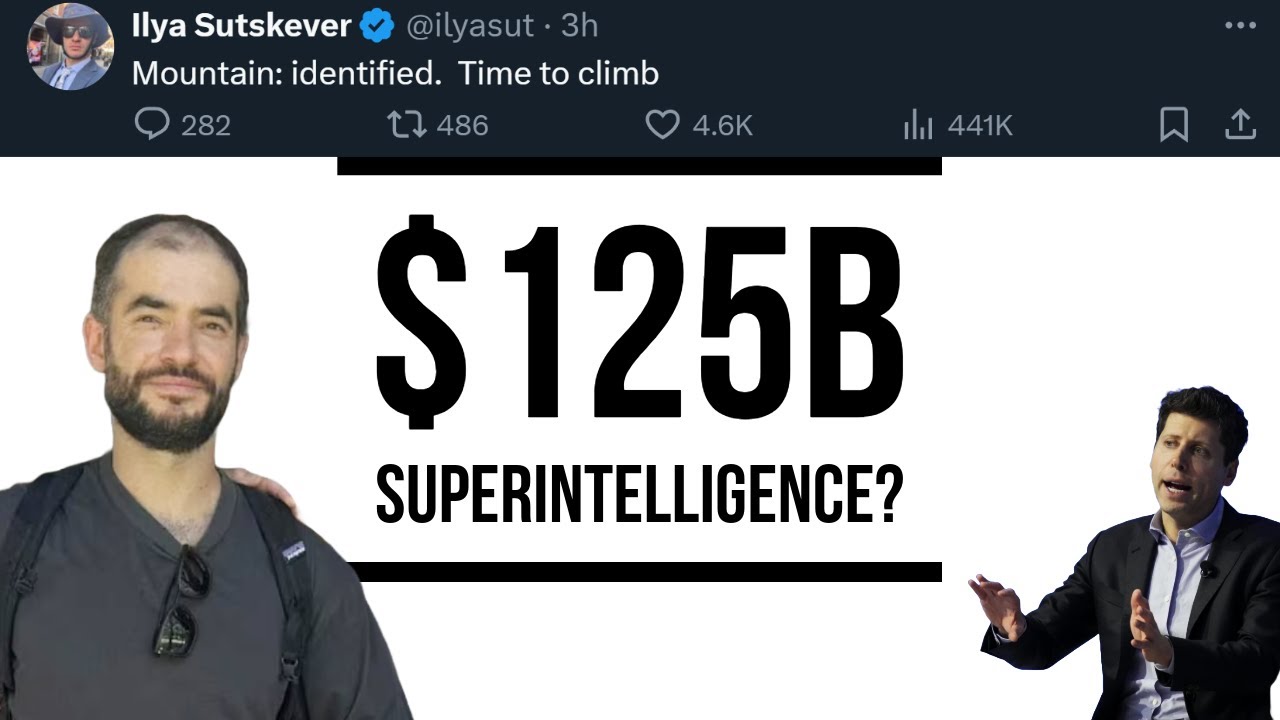

The video discusses the recent developments in the field of artificial intelligence, particularly focusing on the valuation of Ilia Sutskever’s startup, Safe Superintelligence, which has been valued at $5 billion after raising $1 billion in funding. The company, only three months old, aims to achieve superintelligence by acquiring significant computing power, a theme that resonates throughout the video. Sutskever, a former OpenAI leader, hints at a different approach to scaling AI models, emphasizing the importance of what is being scaled rather than just the scale itself.

In addition to Sutskever’s venture, the video highlights Elon Musk’s claims about his AI training system, which is expected to have around 200,000 H100 GPUs, potentially making it one of the most powerful AI systems. Musk’s X AI team has produced Grok 2, a model that competes with GPT-4, and there are concerns from OpenAI’s CEO about Musk’s team gaining more computing power than OpenAI itself. The video raises questions about whether raw computing power alone can lead to true artificial intelligence, given that many teams have struggled to create effective foundation models despite having access to significant resources.

The discussion shifts to the massive investments being made in AI infrastructure, including plans for two new data centers, each costing $125 billion. These centers are expected to consume vast amounts of power, with the potential to scale up to 5 or 10 gigawatts over time. The video emphasizes that the scale of these investments reflects a belief in the scaling hypothesis, which posits that increasing the size and power of AI models will lead to breakthroughs in artificial intelligence. However, there is skepticism about whether this approach will yield the desired results.

The video also touches on the concept of distributed computing, where AI training is spread across multiple data centers to alleviate local power constraints. Companies like Google, OpenAI, and Anthropic are reportedly planning to expand their training operations across multiple campuses. The upcoming releases of models like Gemini 2 and Grok 3 are anticipated, and the video suggests that the performance of these models will be crucial in determining the validity of the scaling hypothesis.

Finally, the video raises concerns about the environmental impact of these massive AI operations, particularly in light of commitments from major tech companies to become carbon neutral by 2030. The potential conflict between the demand for immense power to train AI models and these sustainability goals is highlighted. The video concludes by acknowledging the uncertainty surrounding the future of AI development, emphasizing that while significant resources are being allocated to this endeavor, it remains to be seen whether these efforts will lead to meaningful advancements in artificial intelligence.