Eli the Computer Guy demonstrates running OpenAI’s Whisper voice recognition model locally on a Raspberry Pi 5, showing that it can accurately transcribe simple voice commands and control hardware without relying on cloud services, though it operates more slowly and sometimes struggles with complex phrases. He concludes that Whisper is a viable option for local voice recognition projects, especially for those seeking to avoid cloud dependencies, and recommends using it with the Python “speech_recognition” library.

In this episode of “Stupid Geek Tricks,” Eli the Computer Guy explores the use of OpenAI’s Whisper voice recognition model running locally on a Raspberry Pi 5. He begins by explaining his current setup, where voice commands are sent to Google Cloud Voice Services for processing. However, he raises the question of whether it’s possible to handle voice recognition entirely on the local device, eliminating the need for cloud services and internet connectivity.

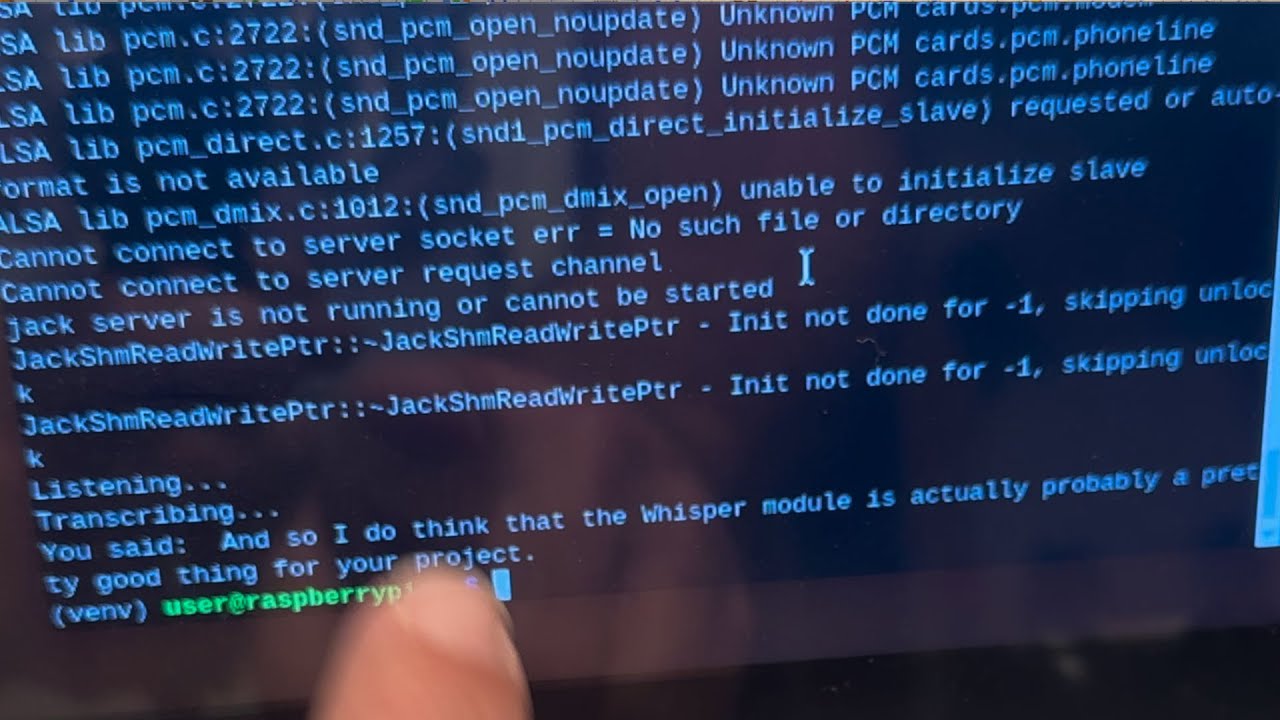

Eli demonstrates the Whisper model running directly on the Raspberry Pi 5, which has 8GB of RAM. He shows how the device can transcribe spoken commands and interact with connected hardware, such as turning on lights or controlling LEDs. While the local processing works, he notes that it is noticeably slower than using cloud-based APIs, as the Raspberry Pi’s hardware is less powerful than cloud servers.

During his tests, Eli tries various voice commands, including the classic tongue-twister “How much wood would a woodchuck chuck if a woodchuck could chuck wood?” He observes that the Whisper model sometimes struggles with unusual or complex phrases, likely due to the statistical nature of language models and their reliance on common word patterns. However, for more straightforward commands, the transcription is accurate and reliable.

Eli also speculates that the accuracy of the model may improve with clearer enunciation. He notices that when speaking directly into the camera and articulating more carefully, the Whisper model produces better results than when he is casually speaking to the device. This suggests that user behavior and speaking style can significantly impact the performance of local voice recognition systems.

In conclusion, Eli finds that the OpenAI Whisper module is a viable option for local voice recognition projects, especially for those who want to avoid cloud dependencies. He recommends using the Python “openai-whisper” module in combination with the “speech_recognition” Python library. Eli is developing this project further for his Silicon Dojo class, which focuses on pushing AI to the edge, and invites viewers to learn more at silicondojo.com.