The video explores the limitations of AI models, particularly focusing on the “compute optimal frontier” and the scaling laws that govern their performance, suggesting that while larger models like GPT-3 can reduce error rates, they may eventually plateau due to inherent uncertainties in natural language. It highlights the ongoing quest for a unified theory of AI, emphasizing that even with infinite resources, models may never achieve perfect accuracy due to the complexity and variability of language.

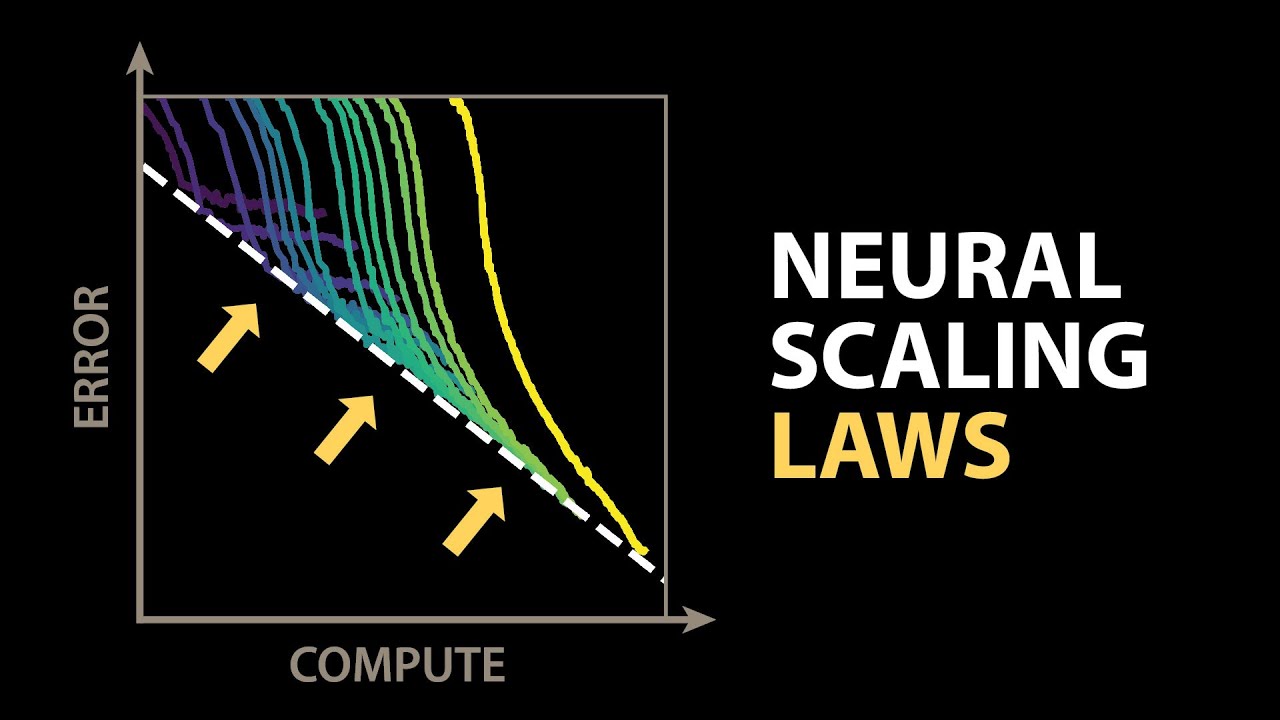

The video discusses the limitations of AI models in terms of performance scaling, particularly focusing on the concept of the “compute optimal frontier.” As AI models are trained, their error rates decrease rapidly at first and then plateau, regardless of the model architecture or algorithm used. This trend suggests a fundamental law governing the relationship between model size, data set size, and compute resources, akin to an ideal gas law for intelligent systems. The video raises questions about whether these scaling laws represent a universal principle or are merely artifacts of the current neural network-driven approach to AI.

In 2020, OpenAI published a paper demonstrating clear performance trends across various scales for language models, fitting power law equations to their results. This led to the development of larger models, such as GPT-3, which followed the predicted scaling trends remarkably well. However, the question remains whether these models can continue to improve indefinitely with increased data and compute resources or if they will eventually reach a performance ceiling. The video highlights that while larger models can achieve lower error rates, there are indications that performance may level off before reaching zero error.

The video explains the mechanics of how language models like GPT-3 predict the next word in a sequence, using cross-entropy loss as a measure of performance. It emphasizes that many sequences do not have a single correct answer, leading to a fundamental uncertainty in natural language. This uncertainty, referred to as the entropy of natural language, suggests that while models can improve, they may never achieve perfect accuracy due to the inherent variability in language.

Further exploration by OpenAI revealed that scaling laws apply across various problems, including image and video modeling. They found that while some tasks showed a leveling off of performance, language data did not exhibit the same behavior, making it difficult to estimate the entropy of natural language. The video discusses how the Google DeepMind team later observed curvature in their scaling experiments, suggesting an irreducible error term related to the entropy of natural text, indicating that even infinitely large models cannot achieve perfect performance.

The video concludes by discussing the theoretical underpinnings of neural scaling laws, linking model performance to the geometry of data manifolds in high-dimensional space. It explains how the density of training points affects model performance and how the intrinsic dimension of data can influence error rates. While the scaling laws have shown predictive power, particularly in smaller datasets, the relationship in natural language remains less clear. The video emphasizes the ongoing quest for a unified theory of AI and the potential for future advancements in understanding the limits of AI performance.