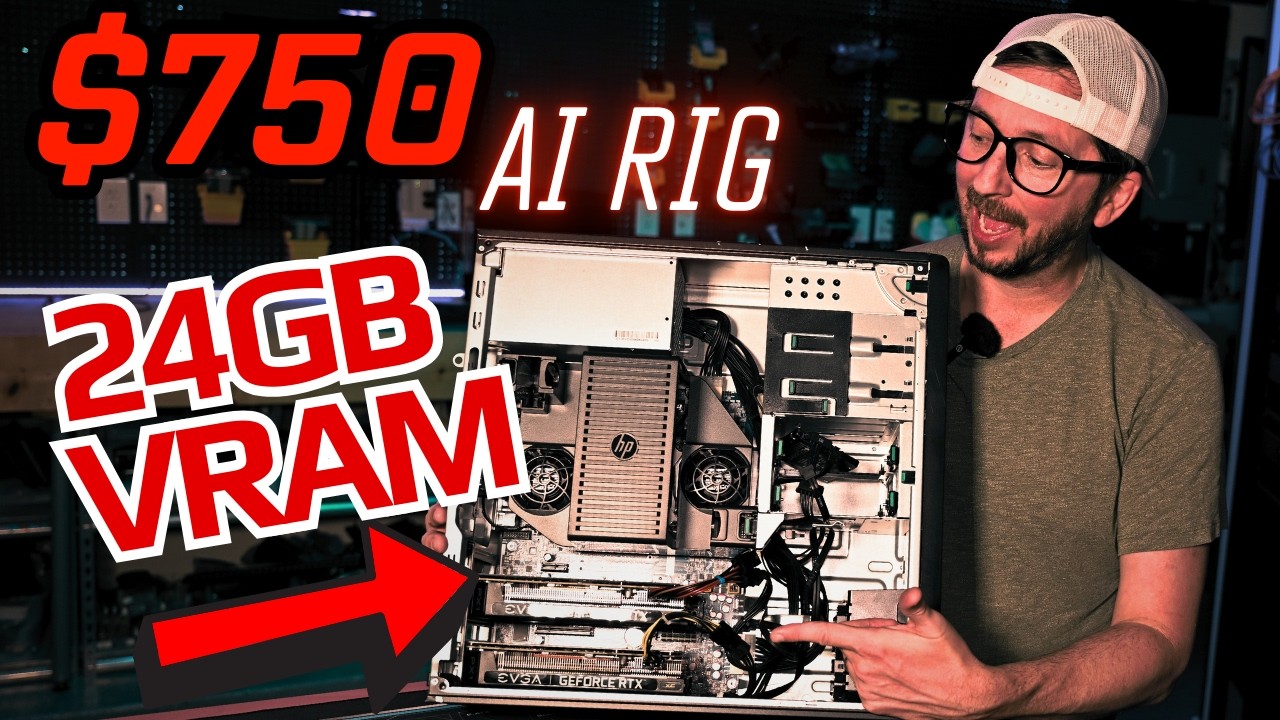

The video demonstrates how to build a budget-friendly AI home server using an HP Z440 workstation with two NVIDIA GeForce RTX 3060 GPUs, totaling 24 GB of VRAM, all for under $750. It covers the assembly process, BIOS configurations, and benchmarks various large language models to showcase the system’s performance and VRAM utilization for AI tasks.

In this video, the presenter explores an affordable way to build a dedicated AI home server with 24 GB of VRAM, specifically using an HP Z440 workstation. The entire setup is budgeted to be under $750, featuring two NVIDIA GeForce RTX 3060 GPUs, each with 12 GB of VRAM. The video emphasizes the importance of VRAM for running larger models and context windows, making this build a cost-effective alternative to more expensive setups like those with multiple high-end GPUs. The presenter also discusses the electrical usage and performance benchmarks of the system, highlighting the significance of optimizing for VRAM within a reasonable budget.

The HP Z440 is praised for its versatility, supporting various processors and offering eight DIMM slots for RAM expansion. The presenter notes that while the stock processor may suffice, opting for a higher core count processor could enhance inference performance. The workstation’s ample PCIe slots facilitate the installation of multiple GPUs, making it suitable for AI tasks. The video includes practical tips for setting up the system, such as using a jumper to connect USB ports and ensuring the power supply meets the requirements for the GPUs.

As the presenter assembles the system, they provide insights into the BIOS settings necessary for optimal performance. Key configurations include enabling legacy BIOS boot options, adjusting power settings, and ensuring proper GPU detection. The video guides viewers through the installation of Proxmox, a virtualization platform, and the subsequent setup of LXC containers for managing AI workloads. The presenter emphasizes the importance of passing through the GPUs to the containers for effective utilization during benchmarking.

Once the system is operational, the presenter benchmarks various large language models (LLMs) to assess performance. They run tests on models like QWQ and Jimma 3, recording tokens per second for both response and prompt processing. The results indicate varying levels of GPU utilization and performance, with some models performing better than others. The presenter notes the importance of VRAM utilization and the impact of context size on performance, highlighting the need for continuous optimization.

In conclusion, the video showcases the HP Z440 as a capable and budget-friendly option for those looking to build an AI home server. With a total cost under $750, the system offers significant VRAM for running advanced models while maintaining low power consumption. The presenter hints at future content exploring additional capabilities of the Z440 and encourages viewers to engage with the channel for more updates. Overall, the video serves as a comprehensive guide for anyone interested in setting up a cost-effective AI workstation.