The video highlights how AI-generated security reports are flooding HackerOne, cluttering the platform with inaccurate and irrelevant submissions, which undermines trust and hampers effective security efforts. In response, some organizations, like the creator of Curl, are rejecting AI-produced reports to emphasize the importance of human oversight and improve report quality.

The video discusses a significant issue affecting HackerOne, a popular security platform where individuals submit security vulnerability reports in exchange for monetary rewards. The core problem arises from the incentive structure: the more reports a user submits, the higher their potential earnings. This setup has inadvertently encouraged some users to flood the platform with a large volume of reports, many of which are either inaccurate or irrelevant to the intended projects.

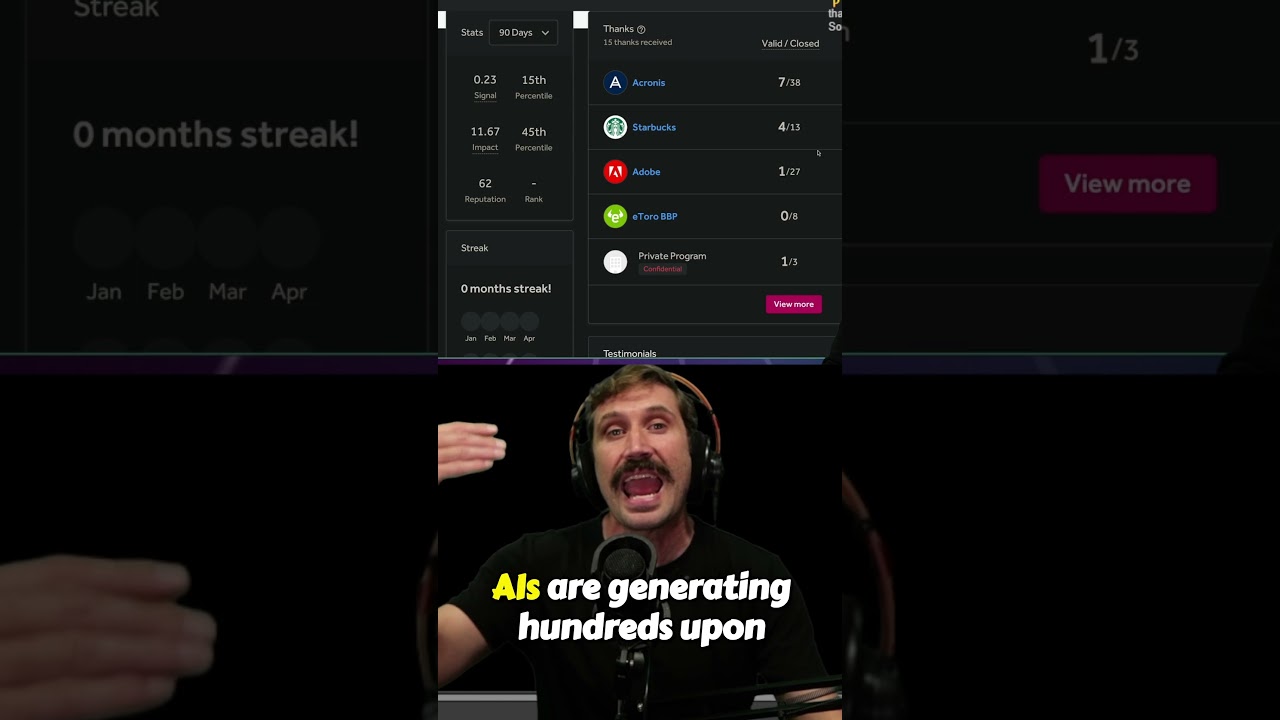

A major concern highlighted is the rise of AI-generated reports. These reports are produced in vast quantities by automated systems, often without proper validation or quality checks. As a result, the platform is overwhelmed with spurious submissions that clutter the system and make it difficult to identify genuine, valuable security findings. This influx of AI-generated reports undermines the integrity of the platform and hampers its primary purpose of improving security.

The problem is compounded by the fact that these AI-generated reports are sometimes completely inaccurate or targeted at the wrong projects. This not only wastes the time of security researchers and platform moderators but also diminishes trust in the reports submitted. The proliferation of false reports creates a noisy environment, making it harder to distinguish legitimate security issues from false positives.

In response to this growing crisis, the creator of Curl, a well-known web browser, publicly announced via LinkedIn that they will no longer accept bug reports generated or even found by AI. This move signals a strong stance against the misuse of AI in security reporting and emphasizes the importance of human oversight and verification. It also serves as a warning to other organizations about the potential pitfalls of relying too heavily on automated systems for critical security processes.

Overall, the video underscores the need for better safeguards and policies to prevent AI from flooding security platforms with worthless reports. It highlights the ethical and practical challenges posed by AI in cybersecurity, advocating for more responsible use and stricter validation to maintain the quality and trustworthiness of security reports. The situation calls for a balanced approach that leverages AI’s capabilities without compromising the integrity of security efforts.