The video explores Moonshot AI’s trillion-parameter model Kimi K2 and its innovative Muon optimizer, which improves training efficiency and stability through momentum moderation and a novel clipping mechanism, enabling smoother large-scale AI training. It also details architectural enhancements in Kimi K2 that boost cost-effectiveness and scalability, highlighting significant advancements in AI pre-training despite the model’s brief reign at the top of open-source benchmarks.

The video discusses Moonshot AI’s latest model release, Kimi K2, a trillion-parameter AI model utilizing a novel optimization technique called Muon. Kimi K2 briefly held the top spot among open-source non-reasoning models, outperforming many benchmarks such as EQ Bench for creative writing and LM Arena for open models. Although it was soon surpassed by Quinn’s latest model update, Kimi K2’s development and the insights gained from its training process offer valuable contributions to AI pre-training methodologies. The video also highlights a free resource sponsored by HubSpot that guides users on effectively deploying AI agents in various organizational contexts.

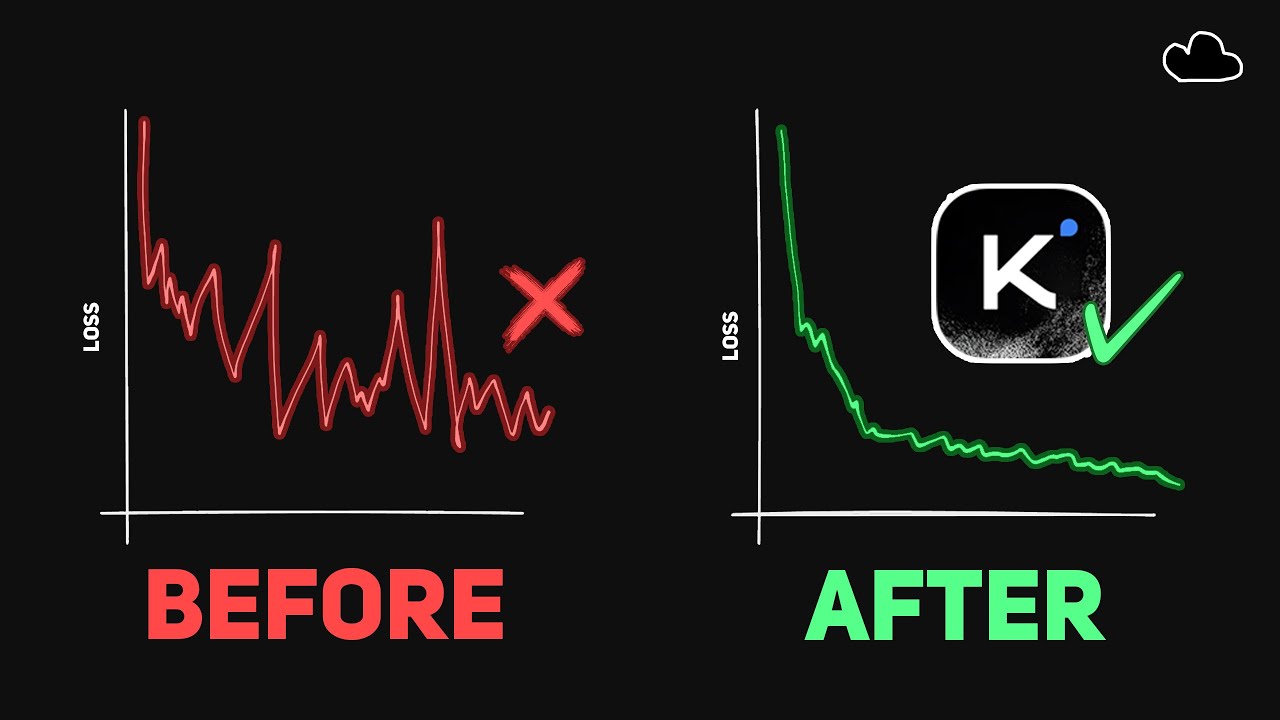

A central focus of the video is the Muon optimizer, introduced in October 2024, which challenges the long-standing AdamW optimizer widely used in AI training. The analogy of descending a landscape in Minecraft is used to explain optimization: AdamW adjusts step size and direction based on slope but can overshoot due to momentum, causing inefficiencies and spiky loss curves. Muon, in contrast, moderates momentum by pausing to assess the landscape before each step, resulting in smoother and more accurate descent. Although Muon adds a slight computational overhead of 0.5% per step, it reduces total training time by up to 35%, making it a promising advancement in training efficiency.

However, scaling Muon to the trillion-parameter scale of Kimi K2 introduced challenges. Early in training, oversized query or key vectors caused runaway updates that Muon could not dampen effectively, leading to unstable training and potential failure. To address this, Moonshot AI introduced “Muon Clip,” a thresholding mechanism that clips extreme values before Muon processes momentum, stabilizing training and enabling successful large-scale model training. This innovation was critical in achieving a smooth, spike-free training loss curve, which cost $20 million to generate, underscoring the importance of getting optimization right in large-scale AI projects.

The video also covers architectural refinements in Kimi K2 compared to the DeepSeek V3 model design. Moonshot AI increased the number of experts per layer by 50% while maintaining the same active parameters per token, leveraging a new sparsity scaling law that ensures no loss degradation with more experts. They reduced attention heads from 128 to 64, cutting parameter count with minimal performance loss, and simplified routing by eliminating expert grouping, allowing tokens to be routed across all experts cluster-wide. These changes improved cost efficiency and training stability at the trillion-parameter scale, which requires 384 GPUs to train simultaneously.

In conclusion, the video provides an in-depth look at Moonshot AI’s technical breakthroughs with Kimi K2 and the Muon optimizer, highlighting their potential to reshape AI pre-training efficiency and scalability. Despite being briefly dethroned by newer models, Kimi K2’s development journey offers valuable lessons in optimizer design, model architecture, and large-scale training logistics. The video encourages viewers to explore further research updates through the creator’s newsletter and acknowledges the support of the AI research community and patrons. Overall, it celebrates the cutting-edge progress in open-source AI development and the ongoing quest for more efficient and powerful models.