The video showcases a video maker and editor app that uses voice descriptions to generate and customize realistic images, which are then combined with voice-driven narratives to create and edit multi-scene videos. Despite its rudimentary interface and current limitations, the app enables users to produce and export extended videos efficiently, highlighting its potential for creative storytelling.

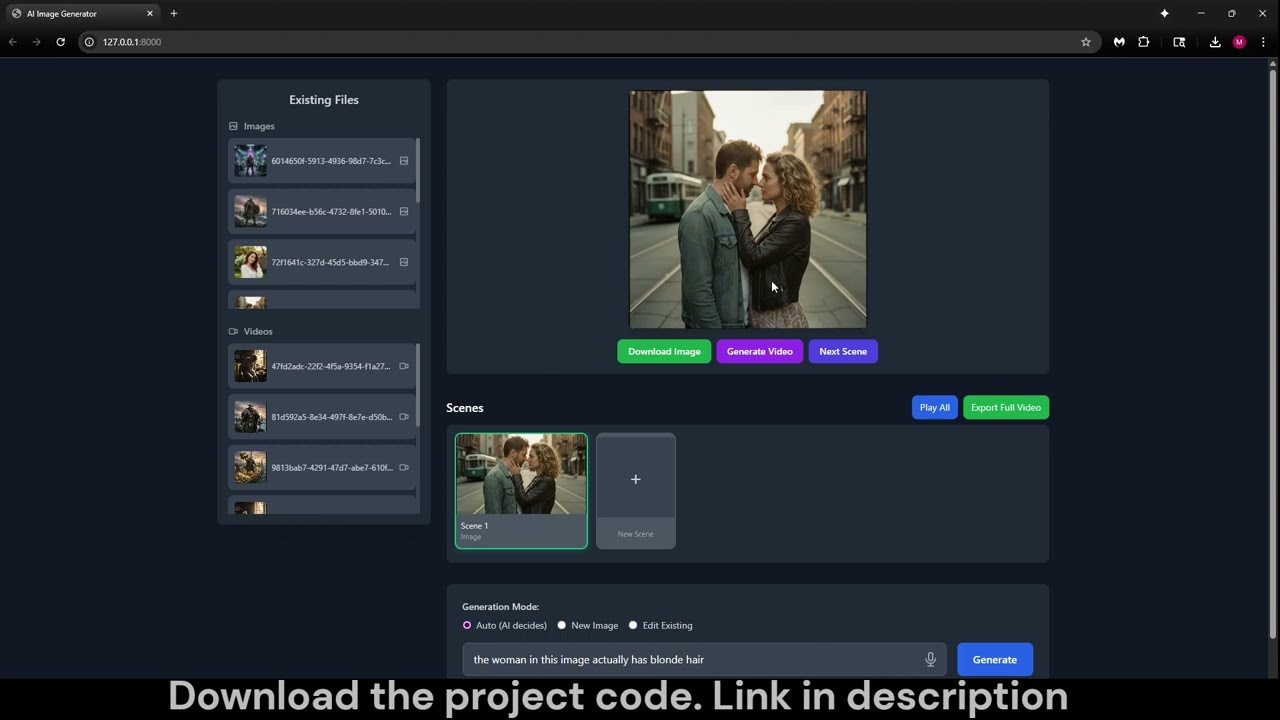

The video demonstrates a video maker and editor application built using Nano Banana and V3 Fast technologies. The process begins by describing an image through voice input, such as “A man and a woman are in the middle of the street looking at each other,” which generates a realistic 35mm close-up image. The user can then edit the image, for example, by changing the woman’s hair color to blonde, and the system updates the image accordingly. This editing feature allows for customization before moving on to video generation.

Next, the user creates a video by providing a description of the scene’s action, like “The man and the woman separate from each other and walk off the scene.” The app generates the video based on this input, although the current version is rudimentary with minimal animation and waiting indicators. The user can trim the video to adjust timing and improve flow. The app supports combining multiple scenes to create longer videos, enhancing storytelling capabilities.

The user adds a second scene by generating a new image of the woman in her apartment eating a sandwich and provides a voice line for her, “Oh my god, I was so hungry. I couldn’t wait for this.” The video is generated with this dialogue, and the user trims it for better pacing. The app uses browser voice APIs for reading the text aloud, which may not be perfect but serves the purpose. The cost of generating images and videos is mentioned, with images costing about 4 cents each and videos around $120 for a full sequence.

A third scene is created featuring the man in a messy apartment, looking sad and slouching on the couch. The user describes the scene and provides a voice line, “I already miss her so much. I can’t go on without her,” which the app uses to generate the video. The user highlights that the app is a fast API-based project that can be run and downloaded easily. While the UI is not fully polished, the app effectively allows users to create videos by editing images and adding voice-driven narratives.

Finally, the user demonstrates playing the full movie by combining all scenes and exporting it as a single video file. The exported video appears in the app’s sequence list and can be downloaded to the user’s device. The app supports unlimited scene additions, enabling users to create extended videos. The presenter acknowledges the app’s current limitations but expresses enthusiasm for further improvements and invites feedback from viewers, emphasizing the project’s potential for creative video making.