The paper “Beyond A*: Better Planning with Transformers via Search Dynamics Bootstrapping” focuses on teaching language models, specifically Transformers, how to excel in planning tasks by mimicking the execution of traditional planning algorithms like A*. By training Transformers to generate optimal plans efficiently with fewer search steps, the researchers demonstrate the potential for language models to improve planning performance and efficiency in tasks requiring strategic thinking and foresight.

The paper titled “Beyond A*: Better Planning with Transformers via Search Dynamics Bootstrapping” explores teaching language models how to perform planning tasks, specifically focusing on teaching them how to think about planning problems and plan ahead. The examples used in the paper are primarily game applications, such as puzzle games where planning ahead is crucial to success. The researchers tackle planning using Transformers and language models, aiming to improve planning efficiency and optimality.

The researchers employ a method called bootstrapping from the A* planning algorithm to train Transformers in planning tasks. By teaching the language model how to mimic the planning algorithm’s execution trace, the model learns to output optimal plans with fewer search steps than traditional planning algorithms like A*. This approach aims to bridge the gap between the power of large language models and the simplicity of planning algorithms, demonstrating the potential for language models to excel in planning tasks.

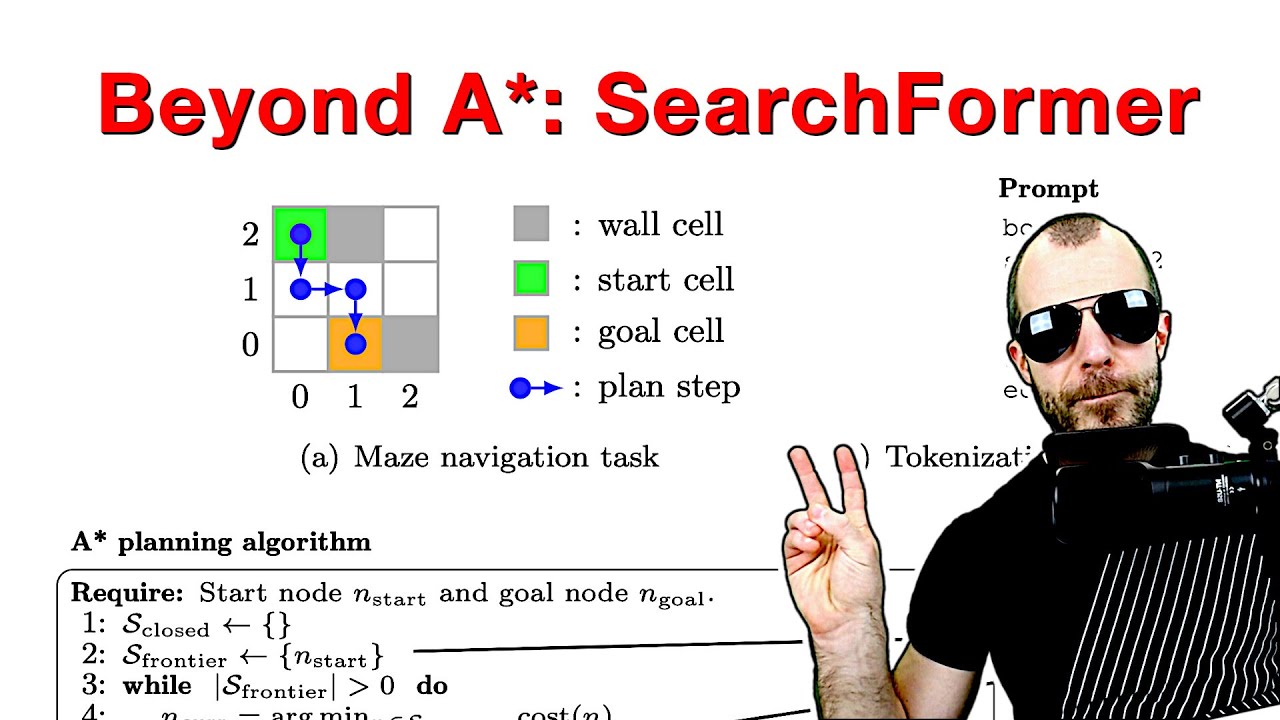

Planning involves mapping a path from a start point to a goal while considering obstacles and constraints in the environment. Language models struggle with planning and reasoning tasks, but by explicitly teaching them how to approach planning problems, the researchers show that Transformers can learn to generate optimal plans efficiently. The study highlights the importance of providing the language model with a structured understanding of how to think about planning problems, leading to improved planning performance.

The research methodology involves training Transformers to solve complex planning tasks, focusing on generating optimal plans with shorter execution traces. By introducing variance in the execution traces and training the model to produce shorter traces for optimal plans, the researchers demonstrate that the search-augmented models outperform the solution-only models. This approach not only enhances planning efficiency but also increases the likelihood of generating optimal solutions in planning tasks.

Overall, the paper explores the potential of teaching language models how to approach planning problems, resulting in improved planning performance with shorter execution traces. The study shows that by training Transformers to understand planning tasks and mimic the execution of traditional planning algorithms, it is possible to achieve better planning outcomes. The research contributes to the field by demonstrating the effectiveness of integrating planning strategies into language model training, paving the way for enhanced capabilities in planning tasks using Transformers.