Professor Alex Orbia discusses the potential of biologically-inspired AI, advocating for a computational framework that mimics the human brain’s energy efficiency and generative capabilities through concepts like embodied cognition and “mortal computation.” He emphasizes the importance of understanding biological systems to develop intelligent AI that learns and adapts similarly to humans, while also addressing ethical considerations and the limitations of traditional machine learning methods.

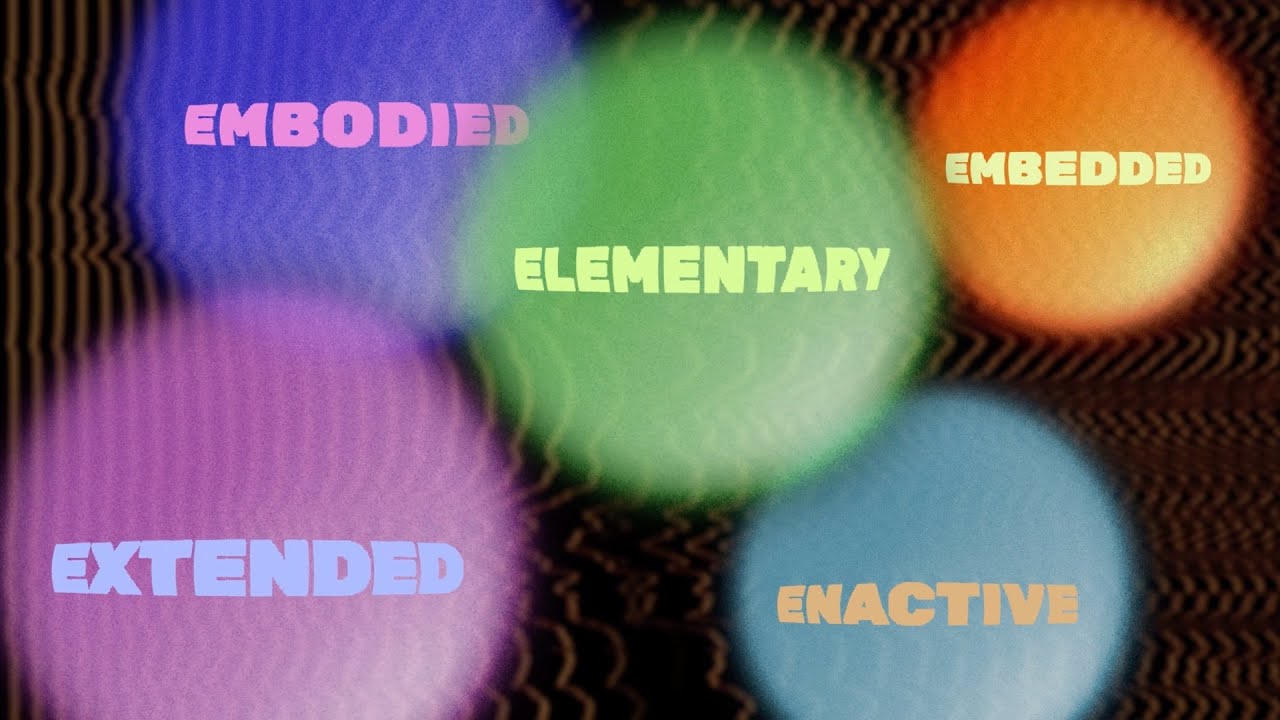

In the quest to develop artificial intelligence (AI), researchers are increasingly looking to biological systems for inspiration, particularly the human brain. Professor Alex Orbia discusses the potential of biologically-inspired AI, emphasizing the need for a computational framework that mimics the brain’s energy efficiency and generative capabilities. While traditional AI models, such as generative pre-trained transformers (GPT), consume vast amounts of energy, the human brain operates on just a few watts. Orbia advocates for an embodied cognition approach, which includes concepts of being inactive, extended, and embedded, to create AI that thinks more like humans.

Orbia introduces the concept of “mortal computation,” which refers to the idea that the software and hardware of AI systems are intertwined and cannot be separated. This perspective challenges the traditional view of computation, where software can be executed independently of the hardware. Mortal computation emphasizes the importance of energy efficiency and the need for AI systems to maintain their identity and organization over time. The discussion also touches on the implications of machines that can think and feel like humans, raising questions about coexistence and ethical considerations.

The conversation delves into the challenges of creating bio-inspired intelligence, particularly the difficulties associated with neuromorphic chips and the nascent technology surrounding them. Orbia highlights the importance of understanding how biological systems operate and how these principles can be translated into computational frameworks. He discusses the role of biomimetic intelligence in building intelligent systems that can adapt and learn from their environments, drawing parallels to natural organisms.

Orbia also explores the concept of “mortal inference,” which encompasses learning and selection processes in AI systems. He outlines three primary timescales for optimizing variational free energy: inference, learning, and structural selection. Inference involves the immediate adjustments made by the system, while learning refers to the slower process of encoding experiences. Structural selection is the slowest timescale, focusing on how the system’s structure changes over time. This framework aims to provide a more comprehensive understanding of how AI can evolve and adapt in a manner similar to biological entities.

Finally, the discussion touches on the limitations of traditional backpropagation methods in machine learning and the potential of alternative approaches, such as predictive coding and forward-only learning. Orbia emphasizes the need for AI systems to learn in a way that reflects biological processes, including the ability to generate and synthesize data. He advocates for the development of tools and frameworks that allow researchers to explore these concepts further, ultimately aiming to create AI systems that are more aligned with human cognition and capable of operating efficiently in real-world environments.