OpenAI introduced a new classification system with five levels to measure progress towards achieving Artificial General Intelligence (AGI), showcasing their GPT-4 AI model that demonstrates human-like reasoning skills. The collaboration with Los Alamos National Laboratory aims to evaluate the risks associated with AI advancements, highlighting the importance of assessing the safety and security of advanced AI technologies in response to a White House executive order emphasizing trustworthy AI development.

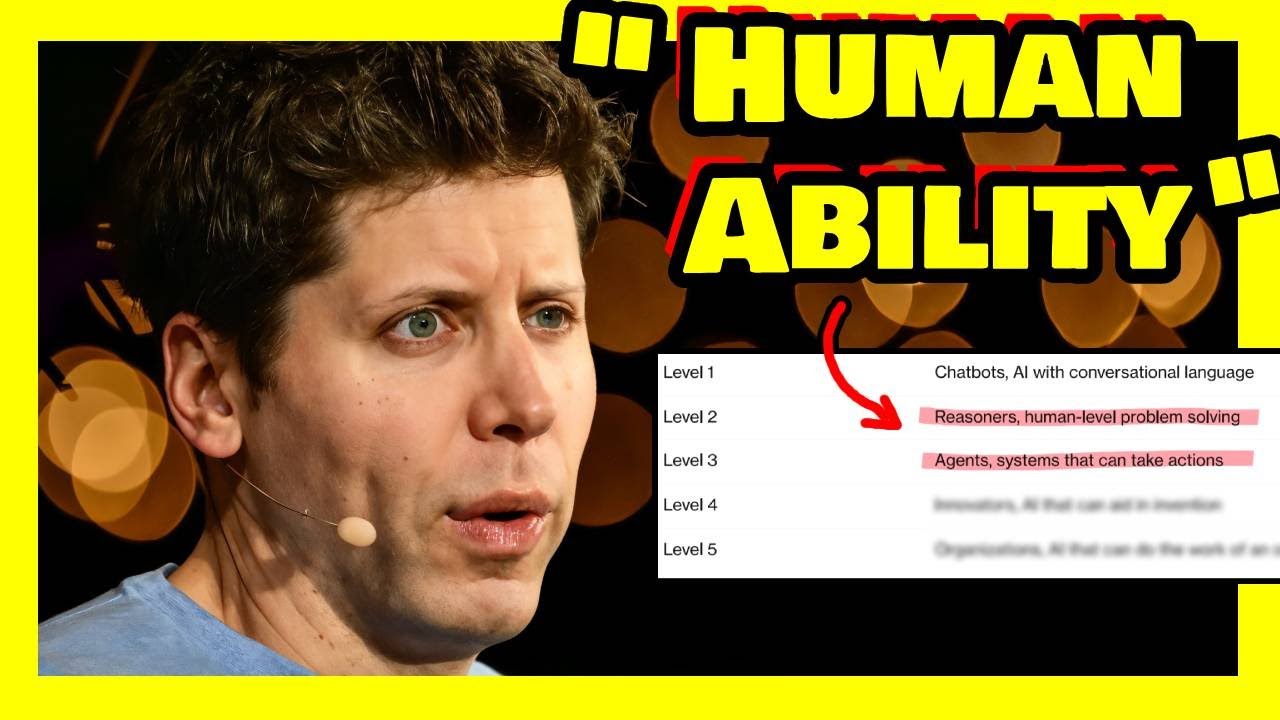

In a recent announcement, OpenAI revealed a new classification system for measuring progress towards achieving Artificial General Intelligence (AGI). This system consists of five levels, with the first level being basic chatbot AI and the second level referring to systems capable of human-like reasoning and problem-solving. OpenAI believes they are currently at the chatbot level but on the cusp of reaching the second level, which they call “reasoners.” During an all-hands meeting, the company showcased a research project involving its GPT-4 AI model, demonstrating skills that approach human-like reasoning.

Google’s DeepMind has its own classification system with six levels of AI, ranging from no AI to emerging AGI. Narrow AI, which includes tasks like predicting protein structures or playing chess, already surpasses human capabilities. However, General AI, which can generalize skills across various tasks, is still in its early stages. OpenAI’s new tiered system aims to provide a framework for understanding and contextualizing their AI advancements.

OpenAI’s collaboration with Los Alamos National Laboratory to improve Frontier Model safety evaluation highlights the importance of assessing the risks associated with AI advancements. The partnership aims to evaluate the biological capabilities and potential risks of AI systems like GPT-4. This move comes in response to a new executive order from the White House emphasizing safe, secure, and trustworthy AI development and use.

A former OpenAI employee, Leopold Ashenbrenner, raised concerns about the nationalization of OpenAI and the implications of developing superintelligent AI systems. He suggested that superintelligence development is a matter of national security, raising questions about the ability to control and safeguard such advanced AI technologies. As OpenAI progresses towards human-like reasoning and problem-solving abilities, the debate around AI ethics and national security intensifies.

The evolving landscape of AI development and partnerships with entities like Los Alamos National Laboratory underscore the complex challenges and opportunities in the field of artificial intelligence. As OpenAI advances towards higher levels of AI capabilities, the implications for society, security, and ethics become more pronounced. The intersection of AI technology, national security, and ethical considerations presents a nuanced and evolving landscape that warrants ongoing scrutiny and discussion.