The video explains the “Byte Latent Transformer,” a new architecture that uses a patch-based approach to text segmentation, demonstrating better scaling properties and performance compared to traditional tokenized models. It highlights the model’s two-tier system, which processes larger patches through a local encoder and a latent transformer, effectively addressing issues like out-of-vocabulary problems and improving performance in tasks requiring fine-grained character analysis.

The video discusses the paper “Byte Latent Transformer: Patches Scale Better Than Tokens,” which introduces a new architecture called the Byte Latent Transformer. This model moves away from traditional fixed vocabulary tokenization methods and instead utilizes a dynamic approach to segment text into pieces referred to as “patches.” The authors argue that this patch-based method demonstrates superior scaling properties compared to classic tokenized models, particularly when evaluated against models that use byte pair encoding, such as those in the Llama series.

The central claim of the paper is that models operating on patches can achieve better performance with the same amount of training flops. The video explains that the architecture consists of a two-tier system: an inner layer that functions like a standard transformer model and an outer layer that processes these patches. The patches are larger than traditional tokens, allowing the model to run the inner layer less frequently, which contributes to improved scaling behavior. The authors present data showing that patch-based models outperform their tokenized counterparts in terms of bits per byte, a measure analogous to perplexity.

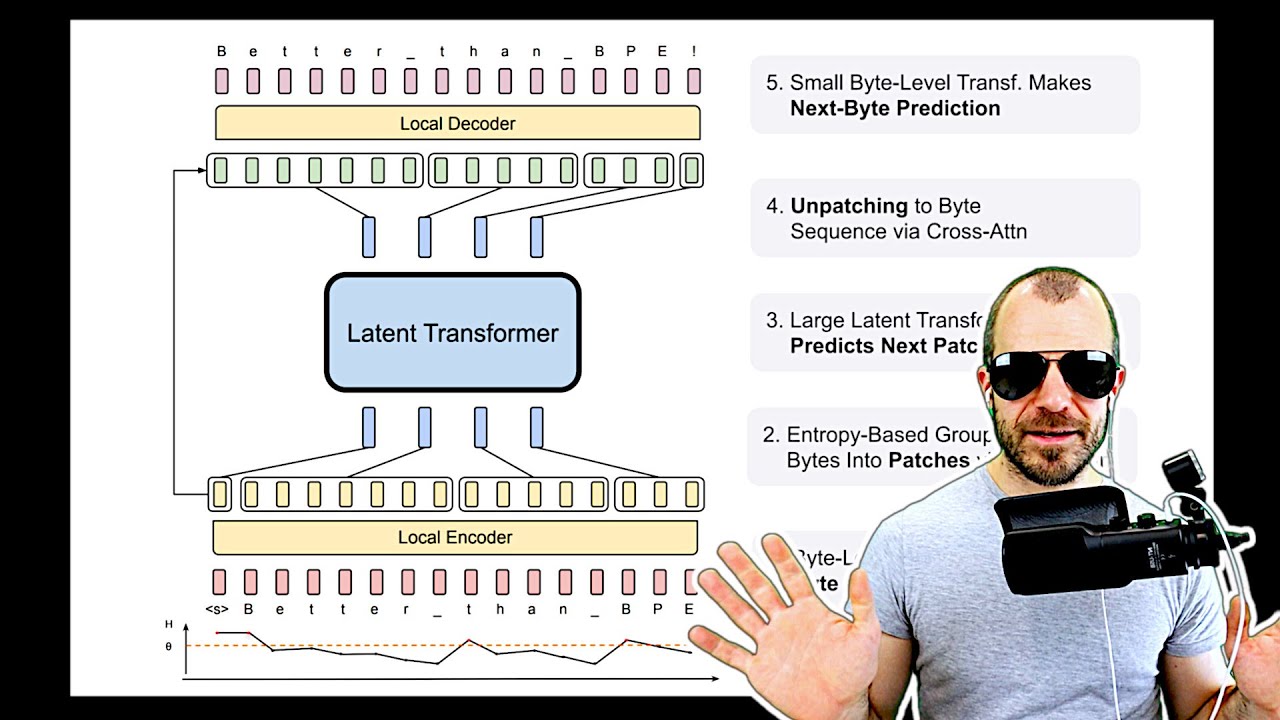

The video delves into the mechanics of how the Byte Latent Transformer operates. It describes a two-stage process where the first stage involves a local encoder that generates patch embeddings from individual characters or bytes. The second stage is the latent transformer, which processes these embeddings. The video highlights the importance of determining patch boundaries, which is achieved through an entropy-based grouping method. This method uses a smaller transformer model to predict the next character and decides where to split based on the entropy of the predictions.

The local decoder plays a crucial role in generating output tokens from the patch embeddings. It continuously queries the small transformer model to assess the entropy of the next predicted token, stopping when the entropy exceeds a certain threshold. This mechanism allows the model to dynamically adjust the size of the patches based on the complexity of the text being processed. The video emphasizes that this approach mitigates the issues associated with fixed tokenization, such as out-of-vocabulary problems and the limitations of a large embedding table.

In conclusion, the video summarizes the experimental results presented in the paper, which indicate that the Byte Latent Transformer can achieve competitive performance with larger patch sizes compared to traditional tokenized models. The authors demonstrate that their model excels in tasks requiring fine-grained character analysis and performs better in underrepresented languages. While the architecture shows promise, the video notes that further optimization is needed to match the runtime efficiency of established token-based models. Overall, the paper presents a compelling case for the advantages of patch-based tokenization in language modeling.