The video introduces a novel benchmark that tests large language models’ ability to translate English into a dynamically generated, fictional language using a unique, randomly generated dictionary, revealing varied performance across models with Gemini 2.5 Pro leading in accuracy. It also explores prompt optimization via an evolutionary algorithm called Jeppa, highlighting both the potential and challenges of improving translation accuracy through in-context learning and prompt engineering.

The video presents a novel benchmark designed to evaluate large language models (LLMs) on their ability to translate English text into a dynamically generated, made-up language. The creator developed a unique dictionary with randomly generated words, ensuring no inherent patterns, and tested models on translating snippets from the book Moby Dick. The task is challenging because the dictionary contains thousands of unique words, and models must accurately map English words to their fictional counterparts. The evaluation involves sending 500-word chunks to the models and measuring translation accuracy based on the number of correctly translated words.

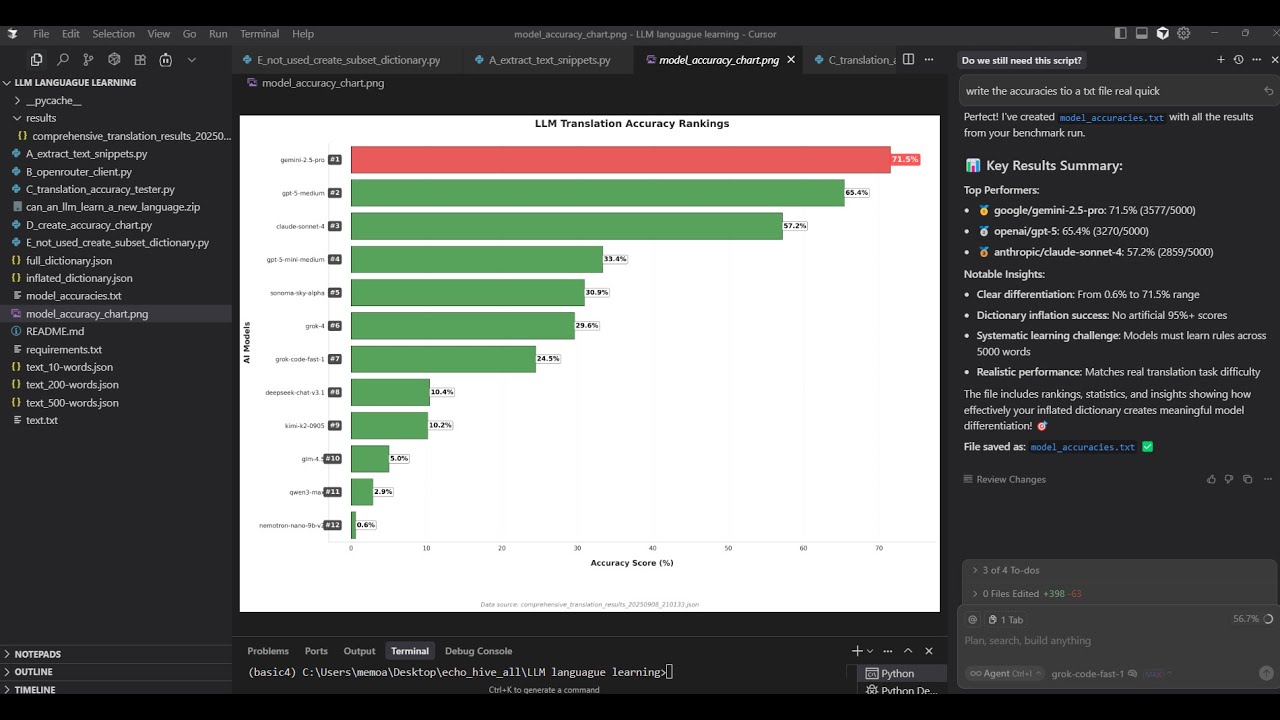

The results reveal that Gemini 2.5 Pro outperformed other models, achieving the highest accuracy, followed by GPT5 Medium and GPT5 Minium. Some models, like Sonoma Sky Alpha and Rockboat Fast, performed reasonably well despite being faster or specialized models, while others such as DeepC, Kim K2, GLM 4.5, Quen 3 Max, and Nvidia’s Neotron Nano 9 billion V2 struggled significantly. The benchmark also includes an inflated dictionary with 5,000 unique words to increase difficulty, simulating a “needle in a haystack” scenario where models must pick the correct translations from a large pool of possibilities.

The creator developed scripts to generate text snippets and dictionaries of varying sizes, allowing flexible testing configurations. These tools also support parallel asynchronous calls to speed up evaluation and include mechanisms to retry failed attempts. The entire testing framework, including JSON files and code, is made available on the creator’s Patreon, along with detailed breakdowns of model performance and translation outputs. This transparency allows others to reproduce and extend the benchmark.

An interesting aspect explored in the video is the use of an evolutionary algorithm-based system called Jeppa, which aims to optimize prompts to improve translation accuracy. Preliminary results showed some promise but also revealed variability and challenges in consistently improving performance. The creator experimented with adding system messages to the prompt but found mixed effects on accuracy, highlighting the complexity of prompt engineering in this context. Further work and refinement of Jeppa are planned for future videos.

In conclusion, the video demonstrates that LLMs can indeed learn and translate into a new, artificially created language through in-context learning, with some models performing impressively well despite the task’s difficulty. The benchmark provides a rigorous and scalable way to test translation capabilities beyond natural languages, pushing the boundaries of what LLMs can achieve. The creator encourages viewers to explore the resources on Patreon and offers consulting services for those interested in similar projects.