Character.AI is restricting minors under 18 from having unrestricted conversations with its chatbots in response to lawsuits, regulatory scrutiny, and concerns about potential harm to teenage users. This move aligns with broader legislative efforts to regulate AI chatbot use among minors and reflects the company’s cautious approach to managing risks while navigating evolving AI regulations.

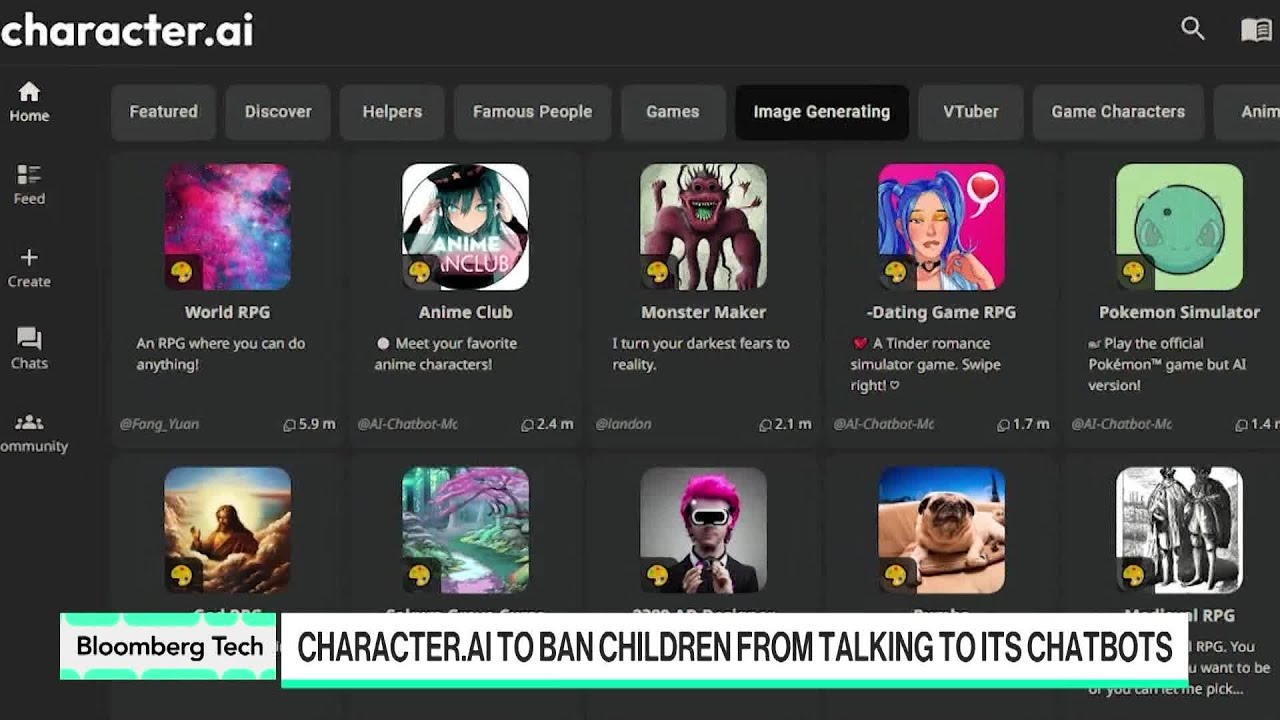

Character.AI has been an intriguing startup and platform to watch, especially given its unique approach to AI avatars. The platform allows users to create and interact with a wide variety of chatbots, ranging from video game characters like Mario to impersonations of celebrities such as Elon Musk. This open-ended creativity has made Character.AI part of the current “Wild West” phase of AI chatbot development, where many different types of bots are being experimented with and deployed.

A significant portion of Character.AI’s user base has skewed younger, particularly teenagers, which has raised concerns. Over time, there have been multiple lawsuits filed by parents against Character.AI, alleging that the platform has caused harm to their teenage children, including severe outcomes like suicide. These legal challenges have drawn the attention of regulators, with the Federal Trade Commission (FTC) launching investigations into AI chatbot makers to assess the risks and protections needed for children interacting with these technologies.

In response to these growing concerns and regulatory pressures, Character.AI has announced new measures to restrict minors from engaging in unrestricted conversations with its chatbots. Specifically, users under 18 will face limitations on how they can interact with the platform. While teenagers aged 13 to 17 may still access some features, they will no longer be able to have endless back-and-forth chats with the AI characters, which the company refers to as “chat boxes.” This move is seen as a proactive step to address potential harms and regulatory scrutiny.

This decision by Character.AI coincides with legislative efforts, such as a bill introduced in the Senate aiming to ban AI chatbot use by individuals under 18 altogether. By implementing these age-based restrictions, Character.AI appears to be trying to stay ahead of regulatory mandates and demonstrate responsibility in managing the risks associated with AI interactions among minors. The company acknowledges that there is still much unknown about the long-term effects of AI chatbots on young users.

Ultimately, Character.AI’s approach reflects a cautious stance amid an evolving landscape of AI regulation and public concern. The company admits uncertainty about the full impact of its technology on children and believes that limiting access for minors is the most prudent course of action at this time. This development highlights the broader challenges faced by AI developers in balancing innovation with user safety, especially for vulnerable populations like teenagers.