In the video, a leading AI researcher explains the limitations of large language models (LLMs) in reasoning and planning, emphasizing that they often rely on memorization and statistical patterns rather than true understanding. He advocates for combining LLMs with external verification systems to enhance their reasoning capabilities and encourages a critical approach to AI research, highlighting the potential for future advancements through hybrid models.

In the video, a leading AI researcher discusses the limitations of large language models (LLMs) in terms of reasoning and planning capabilities. He highlights a common misconception that LLMs can genuinely reason like humans. Using the example of why manhole covers are round, he explains that many responses to reasoning questions may stem from memorization rather than true understanding. The speaker emphasizes that while LLMs can generate impressively coherent text, they often fall short in reasoning tasks, especially when faced with novel situations or questions that require deeper logical connections.

The researcher delves into the mechanics of LLMs, describing them as advanced n-gram models that predict the next word based on vast amounts of training data. He points out that while they can appear to provide well-structured and grammatically correct sentences, this capability does not equate to true reasoning abilities. Instead, they rely on statistical patterns from their training data, leading to a reliance on retrieval rather than genuine deductive reasoning. The discussion includes various tests demonstrating that LLMs struggle with tasks requiring logical inference or planning, particularly when the problem is framed differently from their training examples.

A significant portion of the conversation focuses on the concept of “LLM modulo,” an architecture that combines LLMs with external verification systems to enhance their reasoning capabilities. The speaker suggests that by leveraging the strengths of LLMs for idea generation and pairing them with more traditional systems for verification, it is possible to create a more robust framework for problem-solving. He emphasizes the importance of using external verifiers to check the correctness of generated plans and ideas, noting that self-critique by LLMs often leads to worse outcomes, as they may incorrectly identify errors or hallucinate problems.

As the discussion progresses, the speaker critiques the growing trend of viewing LLMs as generalist models capable of reasoning in a manner akin to human intelligence. He stresses that while LLMs may excel in generating ideas, they lack the necessary grounding in logic and structured reasoning to handle complex planning tasks effectively. The conversation touches on the significance of maintaining skepticism in AI research, particularly regarding claims about the capabilities of emerging models. The speaker concludes with advice for young researchers, encouraging them to maintain a broad understanding of various AI principles and to approach empirical studies with critical thinking.

Finally, the video highlights the potential for future advancements in AI by integrating LLMs with more structured reasoning frameworks. The researcher expresses optimism about the role of hybrid models that combine neural and symbolic approaches, allowing for better handling of reasoning tasks while still utilizing the creative strengths of LLMs. He encourages the exploration of new architectures that could enhance the capabilities of AI systems and underscores the need for rigorous validation of claims in the field. Overall, the discussion serves as a call for a more nuanced understanding of LLMs and their place within the broader landscape of AI research and application.

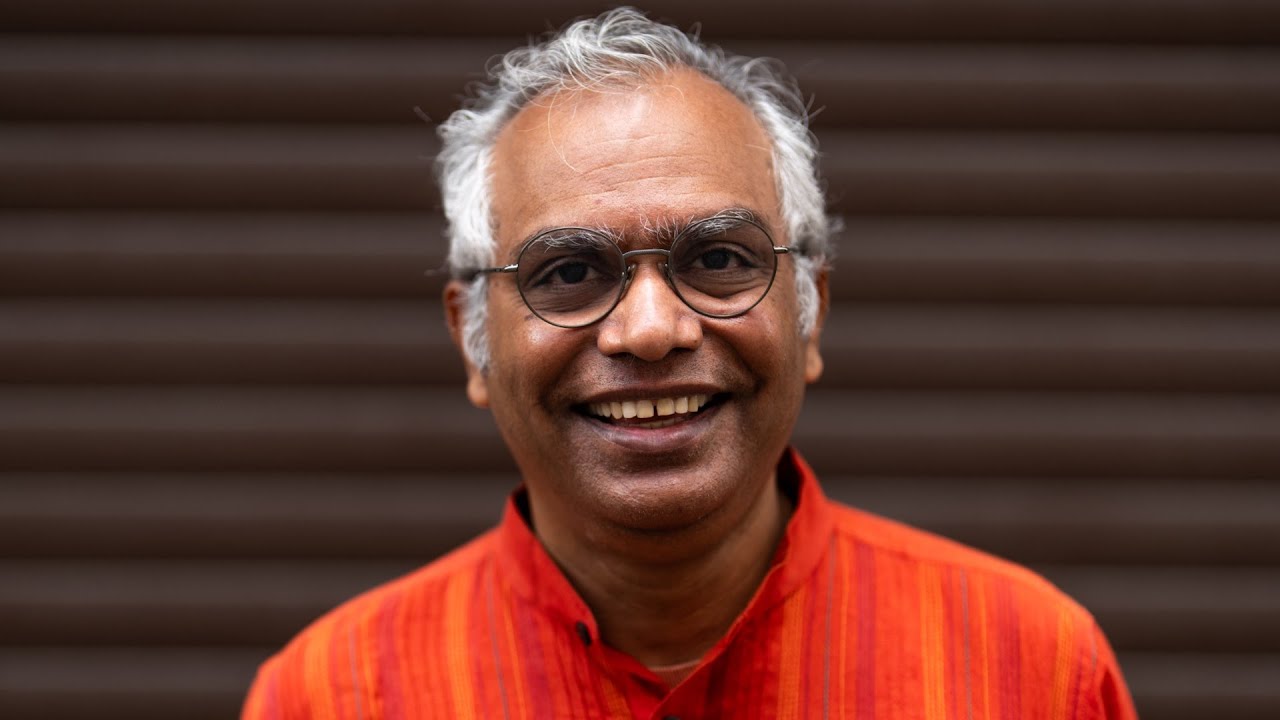

Prof. Subbarao Kambhampati argues that while LLMs are impressive and useful tools, especially for creative tasks, they have fundamental limitations in logical reasoning and cannot provide guarantees about the correctness of their outputs. He advocates for hybrid approaches that combine LLMs with external verification systems.

MLST is sponsored by Brave: The Brave Search API covers over 20 billion webpages, built from scratch without Big Tech biases or the recent extortionate price hikes on search API access. Perfect for AI model training and retrieval augmentated generation. Try it now - get 2,000 free queries monthly at http://brave.com/api.

This is 2/13 of our #ICML2024 series

TOC

[00:00:00] Intro

[00:02:06] Bio

[00:03:02] LLMs are n-gram models on steroids

[00:07:26] Is natural language a formal language?

[00:08:34] Natural language is formal?

[00:11:01] Do LLMs reason?

[00:19:13] Definition of reasoning

[00:31:40] Creativity in reasoning

[00:50:27] Chollet’s ARC challenge

[01:01:31] Can we reason without verification?

[01:10:00] LLMs cant solve some tasks

[01:19:07] LLM Modulo framework

[01:29:26] Future trends of architecture

[01:34:48] Future research directions

Refs:

Can LLMs Really Reason and Plan? Can LLMs Really Reason and Plan? – Communications of the ACM

On the Planning Abilities of Large Language Models : A Critical Investigation https://arxiv.org/pdf/2305.15771

Chain of Thoughtlessness? An Analysis of CoT in Planning https://arxiv.org/pdf/2405.04776

On the Self-Verification Limitations of Large Language Models on Reasoning and Planning Tasks https://arxiv.org/pdf/2402.08115

LLMs Can’t Plan, But Can Help Planning in LLM-Modulo Frameworks https://arxiv.org/pdf/2402.01817

Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve https://arxiv.org/pdf/2309.13638

[2402.04210] "Task Success" is not Enough: Investigating the Use of Video-Language Models as Behavior Critics for Catching Undesirable Agent Behaviors “Task Success” is not Enough

Faith and Fate: Limits of Transformers on Compositionality “finetuning multiplication with four digit numbers” (added after pub) https://arxiv.org/pdf/2305