The video discusses how China’s DeepSeek has developed a new, more efficient AI training method (Manifold Constrained Hyperconnections) that could outperform U.S. approaches reliant on massive hardware investments, highlighting the innovation driven by China’s resource constraints. The host argues that open, resource-efficient AI development may ultimately surpass the proprietary, hardware-intensive strategies favored by American companies, potentially disrupting the current tech industry landscape.

The video, hosted by Eli the Computer Guy, discusses the ongoing competition between the United States and China in the field of artificial intelligence (AI), focusing on a new training method developed by the Chinese startup DeepSeek. Eli emphasizes that the current AI technology stack is still immature, much like web development was in the early 2000s, and that the industry is still figuring out the most efficient and effective ways to build and scale AI systems. He contrasts mature technology stacks, which are well-understood and standardized, with the current state of AI, where best practices and optimal architectures are still being discovered.

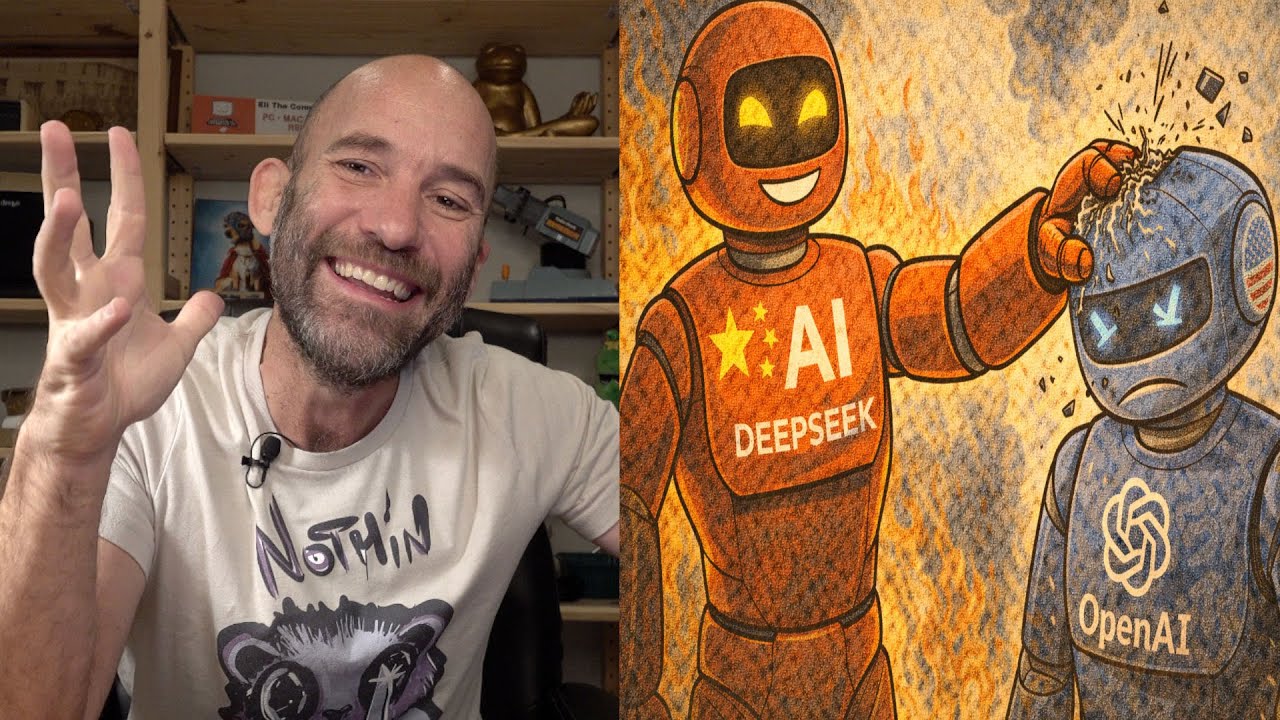

A central theme is the difference in approach between American and Chinese AI development. In the U.S., companies like OpenAI operate under the assumption that better AI requires ever-increasing amounts of data and hardware, leading to massive investments in GPUs, data centers, and infrastructure. Eli points out that Sam Altman, CEO of OpenAI, has reportedly signed contracts for over a trillion dollars in capital expenditures, all based on the belief that scaling hardware is the key to superior AI. This approach is likened to building the fastest, most expensive car, even if most people only need a reliable vehicle for everyday tasks.

In contrast, Chinese companies, constrained by limited access to cutting-edge hardware due to export restrictions, are forced to innovate with fewer resources. DeepSeek’s new method, called Manifold Constrained Hyperconnections (MHC), aims to train larger AI models more efficiently, using less computational power and memory. This architectural rethink is a response to resource scarcity and could potentially lead to more cost-effective and scalable AI systems. Eli notes that such constraints often drive innovation, as seen historically in other areas of technology.

The video also touches on the philosophical and practical differences between the two countries’ approaches to intellectual property and openness. While American companies are increasingly moving towards proprietary, closed-source models, Chinese firms are publishing more of their research openly. Eli suggests that open, resource-constrained development may ultimately prove more sustainable and widely adopted than the resource-unconstrained, proprietary approach favored in the U.S. He warns that if China succeeds in building competitive AI with far fewer resources, it could undermine the massive investments and valuations currently seen in American AI companies.

Finally, Eli expresses concern about the broader economic implications of the current AI boom in the U.S., which he believes is propping up the economy in an unsustainable way. He draws parallels to previous economic bubbles and warns that a sudden shift—such as China releasing highly efficient, low-cost AI solutions—could trigger a collapse in the value of American tech giants and have widespread financial repercussions. He concludes by encouraging viewers to consider which system—open and resource-constrained or proprietary and resource-unconstrained—is more likely to succeed in the long run, and invites them to support his free technology education initiative, Silicon Dojo.