The video demonstrates how to integrate ComfyUI with Blender to create customizable, AI-generated 3D scenes using Nvidia’s RTX GPUs and Nim microservices, allowing for easy style changes and scene variations through prompts. It guides viewers through the setup process, connecting Blender to ComfyUI, and showcases the powerful, flexible capabilities for rapid prototyping and creative experimentation in 3D design.

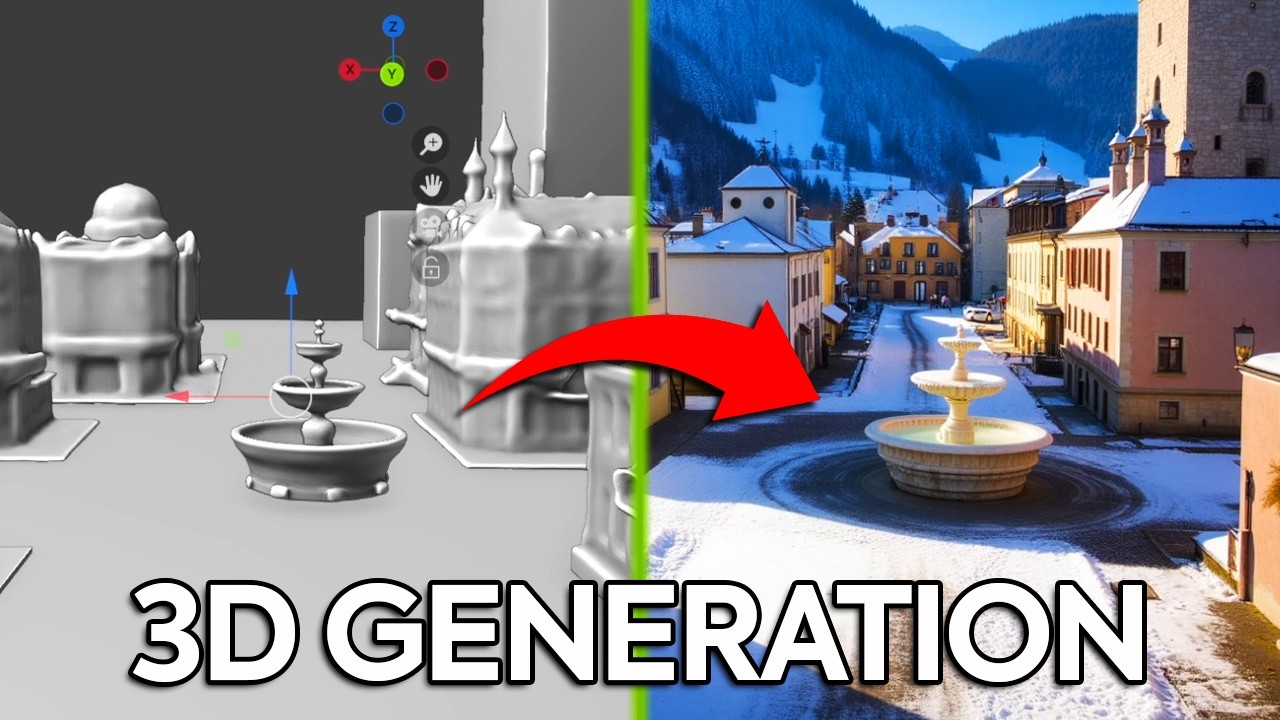

The video introduces ComfyUI integrated within Blender, showcasing its powerful capabilities for creating advanced 3D scenes. With ComfyUI, users can easily apply different skins to 3D environments and fill in blank spaces automatically, all controlled through an intuitive interface. The presenter demonstrates this by transforming a simple town scene into various styles, such as a hyper-modern town or a cartoon version, simply by changing prompts. The scenes are fully manipulable in 3D, allowing for different angles and perspectives, highlighting the endless creative possibilities this setup offers.

The tutorial emphasizes that this system is built around Nvidia’s RTX GPUs and is powered by the Nvidia Nim microservices, which facilitate AI-driven image generation. It is an open-source project that runs locally on the user’s machine, requiring substantial hardware like high-end GPUs and ample RAM. Nvidia partnered with the presenter to showcase how to set up this framework, encouraging viewers to experiment, provide feedback, and contribute to the project by forking the repository. The goal is to enable creators to leverage AI for designing and customizing 3D scenes more efficiently.

The setup process involves several steps, starting with downloading the Nvidia Nim prerequisite installer and installing necessary software such as Git, Visual C++, and Blender. Users must also obtain a Hugging Face API token to access the diffusion models used in the AI pipeline. The presenter guides through cloning the Nvidia AI blueprint repository, running a setup batch file to download models locally, and configuring Blender with the correct paths to connect it to ComfyUI. The process is detailed but straightforward, with the presenter emphasizing that even beginners can follow along with patience.

Once the environment is prepared, users open Blender and load the provided guided scene file. They then connect Blender to ComfyUI by launching the connection, which takes some time initially. After establishing the connection, users can manipulate assets within Blender, adjust prompts, and generate new scene variations through ComfyUI’s interface. The presenter demonstrates how changing prompts influences the generated objects, emphasizing the importance of clear, explicit descriptions to achieve desired results, especially for objects like boats or specific scene elements.

Throughout the tutorial, the presenter highlights the flexibility and power of combining Blender with ComfyUI and Nvidia’s AI models. The system allows for rapid prototyping and creative experimentation, all while being free and running locally. He encourages viewers to explore the open-source project, provide feedback, and build upon it. The video concludes with a reminder of the collaborative nature of this technology, inviting users to share their ideas and improvements, making it a valuable tool for artists and developers interested in AI-assisted 3D scene creation.