The video critiques the AI system DeepSeek for allegedly copying data from existing models like OpenAI’s, while being heavily censored by the Chinese Communist Party and raising concerns about data privacy and misinformation. The speaker warns that DeepSeek’s reliance on flawed datasets and its censorship practices could lead to unreliable information and potential surveillance of users.

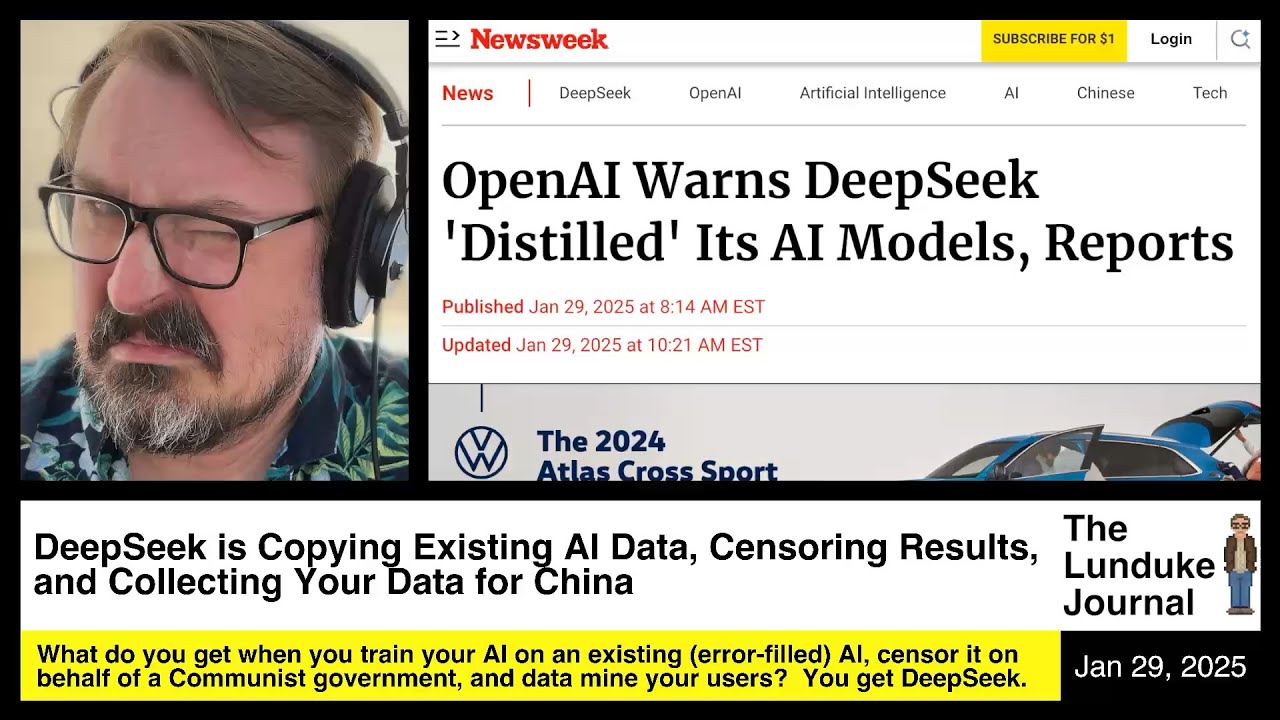

The video discusses the controversial AI system DeepSeek, which is accused of copying data from existing AI models, particularly OpenAI’s, while also being heavily censored by the Chinese Communist Party. The speaker raises concerns about DeepSeek’s training methods, suggesting that it has utilized a technique called “knowledge distillation” to replicate OpenAI’s technology at a lower cost. This has led to scrutiny from major tech companies like OpenAI and Microsoft, who suspect that DeepSeek has engaged in data exfiltration through developer accounts linked to OpenAI.

The speaker highlights the ethical implications of DeepSeek’s practices, noting that it has trained its AI on a flawed dataset from OpenAI, which is known to contain numerous errors. This raises questions about the reliability of the information produced by DeepSeek, as it may inherit and amplify the inaccuracies present in the original dataset. The speaker emphasizes that relying on such an error-prone system for factual information could lead to further misinformation.

Security concerns are also addressed, with the White House reportedly investigating the potential implications of DeepSeek’s AI advancements. The speaker points out that DeepSeek is not only collecting vast amounts of user data but is also sharing this information with external partners, potentially including the Chinese government. This raises alarms about privacy and the extent of surveillance that users may be subjected to when using DeepSeek’s services.

The video further delves into the extent of censorship imposed by the Chinese Communist Party on DeepSeek, illustrating how the AI system is programmed to avoid discussing sensitive topics related to China. The speaker provides examples of how queries about historical events, such as the Tiananmen Square protests, are met with evasive responses, while similar queries about events in other countries yield detailed answers. This highlights the stark contrast in the treatment of information based on its relevance to the Chinese government.

In conclusion, the speaker warns viewers about the potential dangers of using DeepSeek, describing it as a highly censored and error-prone AI system that serves as a data mining operation for the Chinese Communist Party. The video critiques the excitement surrounding DeepSeek’s efficiency, arguing that its advantages come at the cost of ethical practices and user privacy. The speaker encourages viewers to remain cautious and informed about the implications of using such technology, emphasizing the need for transparency and accountability in AI development.