The video showcases the performance of the Deepseek R1 671b model running on a budget $500 PC, highlighting its surprising efficiency with a low-cost processor and ample RAM. The presenter tests various models, noting significant differences in processing speeds and encouraging viewers to consider flexible, future-proof systems for AI applications.

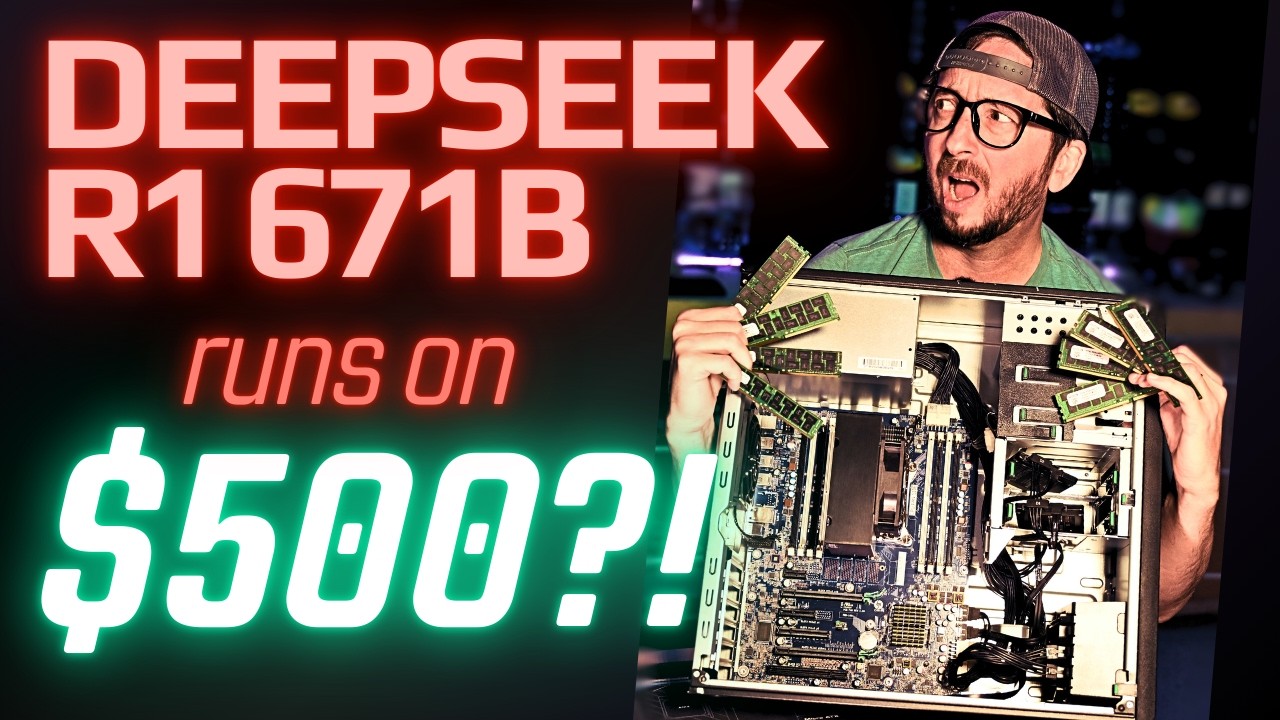

In the video, the presenter demonstrates the feasibility of running the Deepseek R1 671b model on a budget-friendly $500 PC, which includes a surprisingly low-cost $5 processor and 512 GB of RAM. The setup is based on an HP Z440 workstation, and the presenter expresses surprise at the performance metrics achieved, particularly the tokens processed per second. The video is structured with chapters for easy navigation, allowing viewers to skip to specific sections, including the conclusion for key takeaways.

The assembly of the system involves installing 64 GB of RAM and ensuring proper contact with the CPU. The presenter notes that while there are some warning messages during boot-up, the system operates correctly. The Deepseek model itself is quite large, requiring significant storage space, and the presenter shares initial performance statistics, revealing that the system can achieve around two tokens per second for response and prompt tokens.

As the video progresses, the presenter tests various prompts and models to gauge inference speed. The performance varies significantly depending on the model used, with some yielding faster results than others. For instance, the Gemma 3 model demonstrates impressive speed, processing 15 response tokens per second, while larger models like Kito 14b show slower performance. The presenter emphasizes the impact of model size and complexity on processing speed.

The video also discusses the potential for using different CPUs and configurations to optimize performance. The presenter compares the performance of the $5 CPU with a more expensive model, noting that while there is a degradation in performance, the cheaper option still provides usable results. The discussion highlights the importance of memory bandwidth and the capabilities of different CPUs, suggesting that users should consider future-proofing their systems for evolving AI demands.

In conclusion, the presenter encourages viewers to think about building flexible systems that can adapt to future advancements in AI technology. They recommend considering mid-range models like Gemma 3 for a balanced experience and stress the importance of having sufficient RAM for optimal performance. The video wraps up with a call for viewer engagement, inviting feedback and suggestions, and emphasizes the growing accessibility of AI systems across various hardware configurations.