The video introduces DeepSeek’s latest update, the R1-V2 (R10528), highlighting its improved reasoning and capabilities, though it remains less powerful than top models like Claude 4, and emphasizes China’s rapid AI development despite hardware limitations. It demonstrates the model’s versatility in creative and technical tasks, discusses its open-source availability, and explores broader implications for the global AI race, while noting ongoing development and future releases.

The video discusses the recent release and upgrade of DeepSeek’s latest model, referred to as DeepSeek R1-V2 or R10528, which is an incremental update rather than a full new version like R2. The creator explains that this update was hinted at through notifications and social media hints, revealing improvements in reasoning and core functionality. Although the model is not yet benchmarked extensively, initial impressions suggest it performs well in certain tasks, sometimes rivaling models like Claude 4 and Opus, but overall it remains somewhat underwhelming in comparison. The release indicates that DeepSeek has been working behind the scenes on enhancing their AI capabilities since the initial R1 release, which had a significant impact in the AI community.

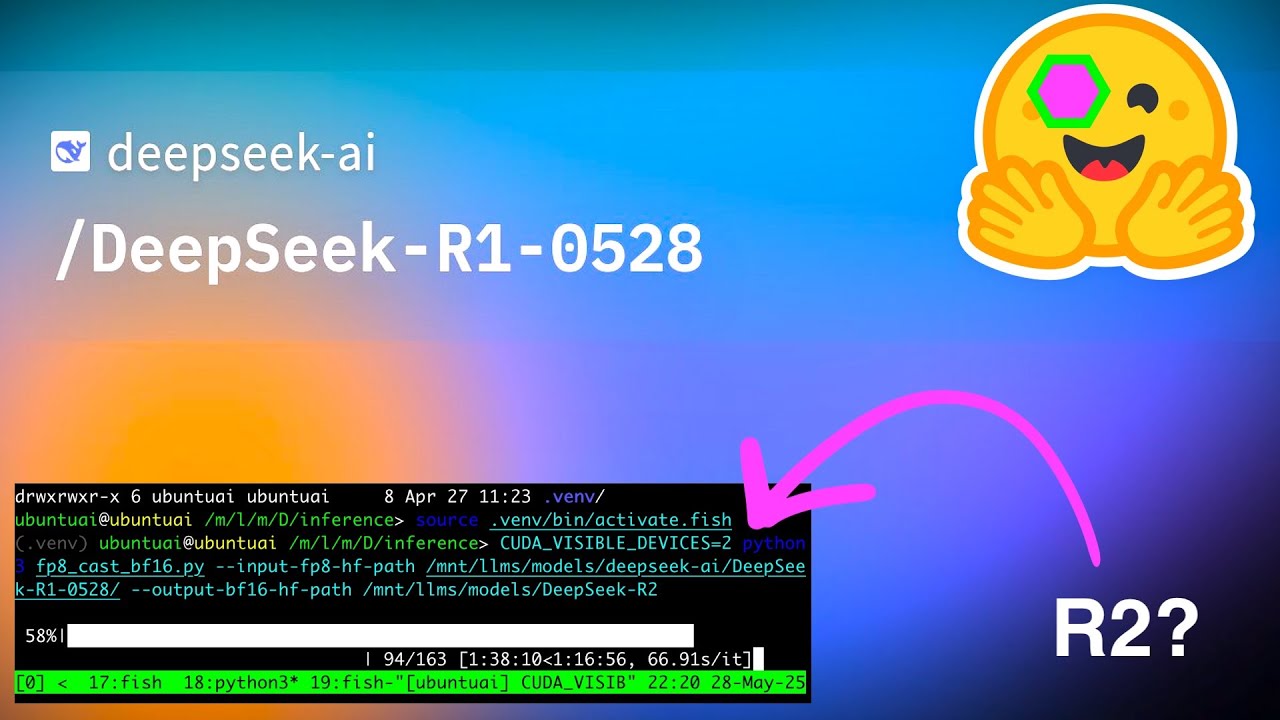

The model is available as an open-weight, MIT-licensed project on Hugging Face, making it accessible for experimentation and potentially run on high-end hardware. The creator notes that running this model requires substantial computational resources, such as large Nvidia GPUs or CPU-based systems with significant RAM, making it currently impractical for most home setups. Initial analyses suggest that the model is less self-aware and may have different memory representations compared to previous versions. DeepSeek is expected to publish research papers explaining their implementation soon, which will shed more light on the technical advancements behind this update.

The video highlights the infrastructure and performance optimizations that Chinese companies like Huawei have achieved, enabling them to develop competitive AI models despite limitations in hardware and access to the latest Nvidia GPUs. The creator emphasizes China’s rapid progress in AI development, driven by innovative use of available compute and networking capabilities. This context helps explain how DeepSeek and similar Chinese models are able to perform at a high level, often surpassing Western counterparts in certain areas, and hints at broader geopolitical and technological implications in the AI race.

Using the new DeepSeek model, the creator demonstrates its capabilities in various tasks, including creative writing, technical reasoning, and generating expressive responses. Examples include asking the model to simulate a 4chan-style discussion about a Chinese fighter jet, and analyzing tariffs for importing goods from China. The model shows a surprising level of expressiveness, humor, and contextual understanding, even producing responses that are more candid and less censored than Western models. It can handle complex prompts and generate detailed internal thought processes, making it a versatile tool for both creative and technical applications.

In conclusion, the creator reflects on the significance of this update as a sign of ongoing development in Chinese AI, and the potential for future releases like R2. They express curiosity about how users are deploying DeepSeek models locally, whether on CPU or GPU, and encourage viewers to share their experiences. The video also promotes Whisper Flow, a voice control tool that integrates with AI workflows, as a new sponsor. Overall, the video provides insights into DeepSeek’s latest advancements, the competitive landscape of AI models, and the exciting possibilities for AI development both in China and globally.