The video reviews a paper proposing energy-based transformers that perform iterative inference through energy minimization, enabling scalable, system 2-like reasoning via unsupervised learning. This approach shows promising scaling benefits and improved prediction with more computation, offering a novel framework that blends energy-based models with transformers for flexible and robust learning and thinking.

The video reviews the paper “Energy-Based Transformers are Scalable Learners and Thinkers,” which proposes combining energy-based models (EBMs) with transformers to create a scalable learning paradigm. The authors explore whether it is possible to develop models that perform system 2 thinking—slow, logical, and explicit reasoning—solely through unsupervised learning. Unlike traditional machine learning models that resemble system 1 thinking (fast, intuitive), energy-based transformers allow multiple inference steps at test time, enabling more computation to improve predictions. This approach is distinct from current reasoning models that rely heavily on supervised data or reinforcement learning with explicit rewards.

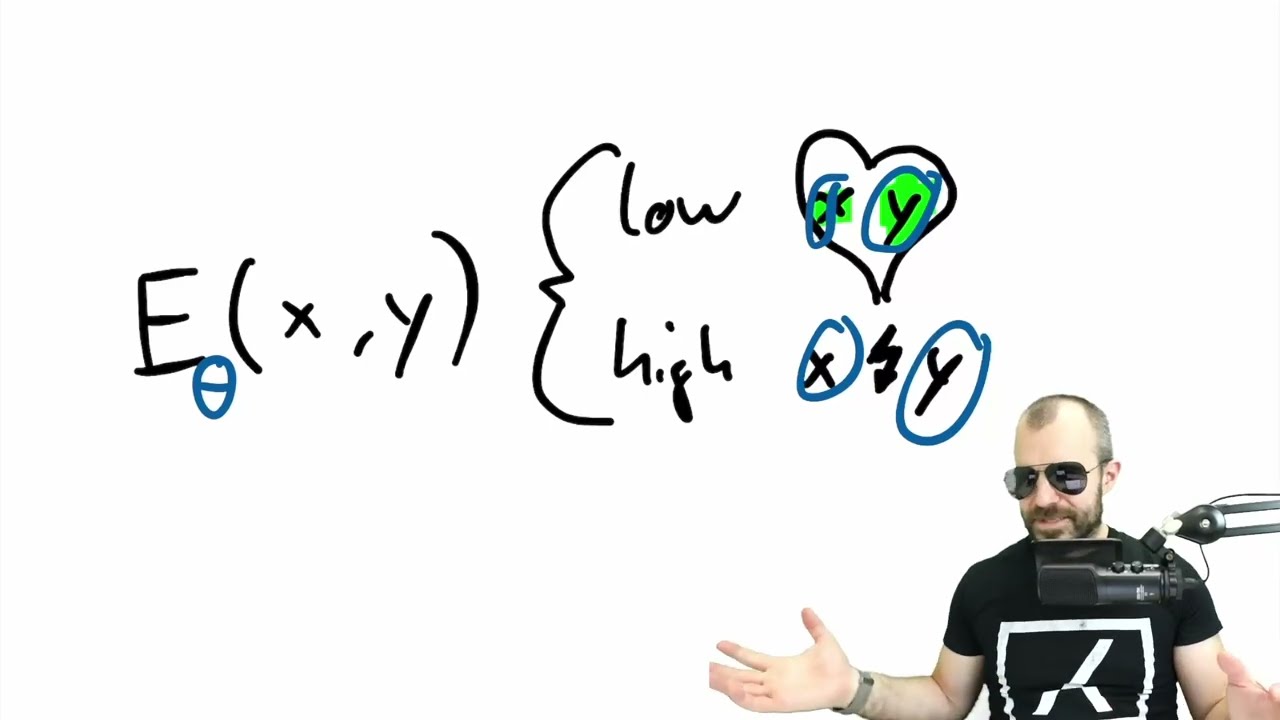

Energy-based models work by defining an energy function that scores the compatibility between inputs and candidate outputs, with lower energy indicating better fit. In the context of language modeling, the model starts with a random distribution over possible next tokens and iteratively refines this distribution by minimizing the energy through gradient descent at inference time. This iterative optimization process allows the model to dynamically allocate computation and express uncertainty, which are key facets of system 2 thinking. The energy function itself is trained to assign low energy to correct predictions and high energy to incorrect ones, using techniques like contrastive training and regularization to ensure a smooth energy landscape.

Training energy-based transformers involves backpropagating through the entire inference optimization process, which requires second-order derivatives but remains computationally feasible through efficient Hessian-vector products. To improve generalization and stability, the authors introduce regularization methods such as adding noise during optimization steps, using a replay buffer, and randomizing the number and size of gradient steps. These techniques help smooth the energy landscape and make the model more robust to variations in inference computation, enabling flexible and scalable thinking at test time.

The paper also addresses engineering challenges in combining EBMs with transformers, particularly ensuring parallelizability and stability during multiple inference passes. Their experiments demonstrate promising scaling trends: energy-based transformers improve more rapidly than classic transformers as training data, batch size, and model depth increase. Although energy-based models require more computation per training step due to the iterative inference, their superior scaling efficiency suggests that at larger scales, they could outperform traditional transformers in both training and inference. Additionally, the model’s ability to improve predictions with more inference steps confirms its potential as a “thinking” model.

Overall, the reviewer finds the paper an exciting and innovative direction, blending longstanding concepts of energy-based modeling with modern transformer architectures. While the philosophical framing around system 2 thinking and reasoning is somewhat speculative and may involve reverse engineering, the technical contributions and experimental results are compelling. The energy-based transformer framework offers a new way to think about inference as an optimization process, with inherent uncertainty modeling and verification capabilities. The reviewer encourages further exploration and scaling of this approach, noting its potential to complement existing reasoning and reinforcement learning methods rather than replace them.

You can find and read the paper “Energy-Based Transformers are Scalable Learners and Thinkers” through the following links:

These sources will provide you with the full text and more detailed information about the research discussed in the video.