Eli the Computer Guy explains that AI, particularly large language models, are complex pattern-recognition tools without true intelligence or intent, and warns against the exaggerated fears promoted by figures like Eric Schmidt. He emphasizes that the real risks come from human misuse and societal impacts, urging education and responsible development rather than sensationalism.

In this video, Eli the Computer Guy discusses the hype and misconceptions surrounding artificial intelligence (AI), particularly large language models (LLMs). He emphasizes that while LLMs and related technologies like vector databases and AI frameworks are real and useful, the term “AI” itself is often overhyped as a marketing buzzword to attract massive investment. Eli explains that these systems are fundamentally neural networks—complex mathematical models inspired by the human brain but not truly intelligent. He highlights the confusion many people have about AI, especially regarding concepts like memory, which LLMs do not inherently possess unless explicitly programmed.

Eli shares his experience teaching AI concepts to students, noting that students often assume LLMs have built-in memory or intelligence, but in reality, these models only process input within a limited context window. He stresses the importance of understanding the technology behind AI rather than relying on preconceived notions shaped by popular culture and media portrayals of AI as sentient or autonomous beings. He contrasts the term “AI” with more specific terms like LLMs to help clarify what the technology actually does, which is essentially pattern recognition and token correlation rather than true understanding.

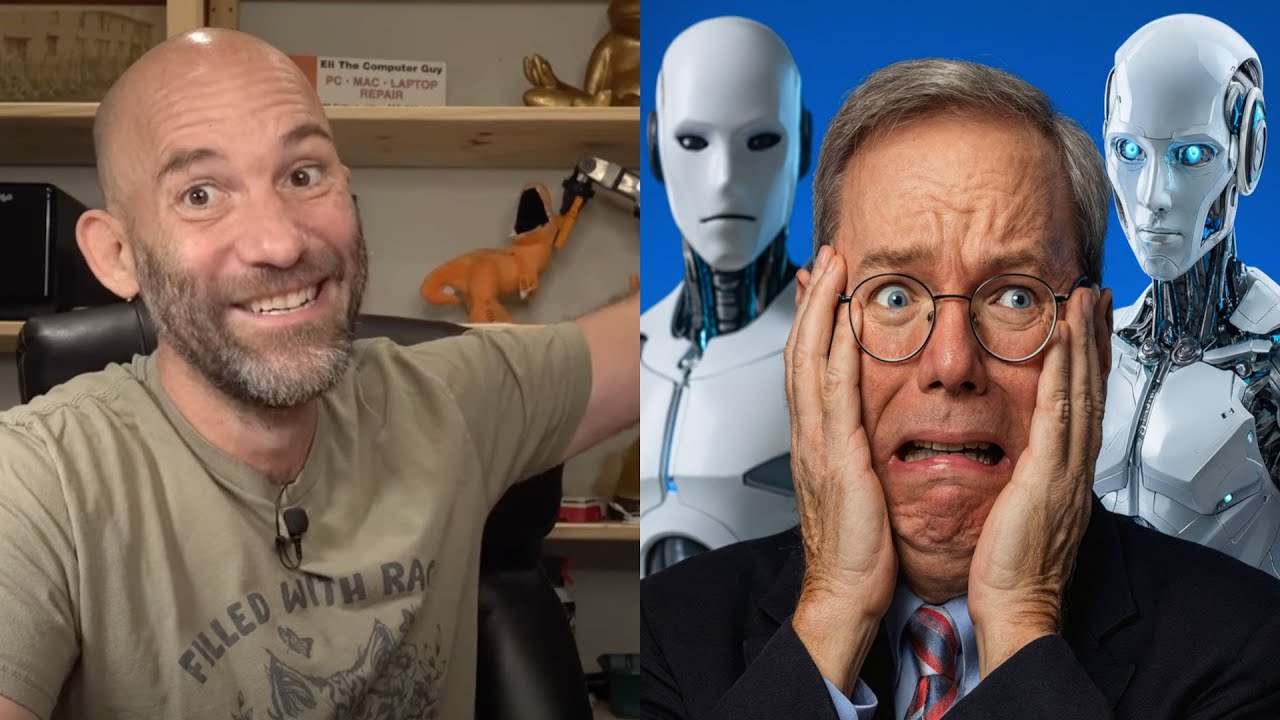

The video also critiques former Google CEO Eric Schmidt’s warnings about AI’s dangers, particularly his claim that AI can learn how to kill people. Eli argues that such statements are exaggerated and misleading, comparing AI to a word processor or web browser that can be used for good or bad purposes depending on the user. He points out that AI models themselves do not have agency or intent; they simply generate outputs based on training data. The real danger lies in how humans use or misuse technology, not in the technology itself. Eli also discusses the importance of guardrails in AI systems to prevent harmful outputs but dismisses the idea that AI is inherently dangerous like nuclear weapons.

Eli further explores the broader societal implications of technology, including AI, social media, and pornography, suggesting that some of the most significant dangers come from human behavior and cultural shifts rather than the technology alone. He raises concerns about younger generations forming relationships with AI entities and the potential impact on human intimacy and social dynamics. He also touches on the automation of dangerous systems, such as autonomous weapons, which combine AI with physical hardware, creating real risks that are separate from the AI models themselves.

In conclusion, Eli urges viewers to approach AI with a clear understanding of what it is and isn’t, warning against sensationalism and fear-mongering by tech elites like Eric Schmidt. He encourages education and hands-on learning, promoting his Silicon Dojo initiative as a way to empower people with practical knowledge about technology. Eli’s overall message is that AI is a tool—powerful but not magical—and that the real challenges lie in how society chooses to develop, regulate, and use it responsibly.