The video explains the concept of flow matching for generative modeling, contrasting it with traditional diffusion models by focusing on learning to morph distributions directly. Flow matching involves defining probability density paths and time-dependent vector fields to guide the movement of points from a source distribution to a target distribution, with the paper exploring how to construct these paths and fields through conditional flows and neural network training.

The video discusses the concept of flow matching for generative modeling, specifically focusing on how it differs from traditional diffusion models. In the context of image generation, diffusion models have been traditionally used, which involve a multi-step process of image generation by iteratively adding noise to an initial noisy image until reaching the target image. On the other hand, flow matching proposes a more generalized approach where instead of defining a fixed noising process, the goal is to learn to morph the initial distribution into the target distribution directly without explicitly specifying the process.

The video explains that in flow matching, the key idea is to learn the flow from one distribution to another by aggregating information from individual samples. This involves defining a probability density path and a time-dependent vector field that guide the movement of points from the source distribution to the target distribution. The concept of conditional flows is highlighted, where efficient learning of how to morph one sample into another sample is key to characterizing the entire flow between the two distributions.

The paper explores how to construct probability density paths and vector fields based on individual samples, leading to the aggregation of these paths and fields to define the overall flow. By marginalizing over conditional probability paths and vector fields, a total probability path and total vector field can be derived, allowing for the movement of the entire source distribution to the target distribution. The approach of conditional flow matching is introduced, where neural networks are trained to predict the conditional vector fields based on individual data points.

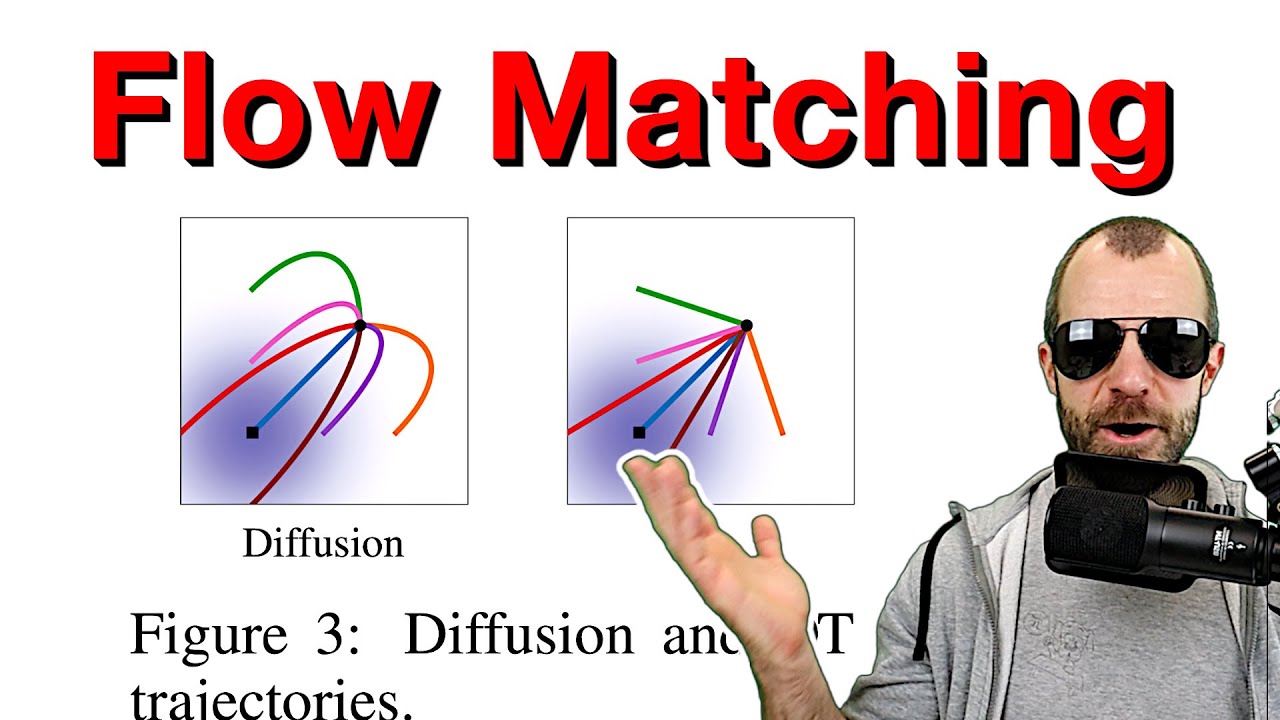

Furthermore, the paper delves into specific instances of Gaussian conditional probability paths, showing how different choices of mean and standard deviation functions can result in various types of flows. By making certain choices, such as using a straight line path between source and target samples, the paper demonstrates how the optimal transport objective can be achieved. The differences between diffusion trajectories and optimal transport trajectories are highlighted, showcasing the advantages of using the optimal transport approach for more efficient and robust sampling in generative modeling.