Google Research’s MLE Star is an advanced AI agent capable of recursive self-improvement, achieving impressive results on Kaggle competitions with a 63% medal rate, including 36% gold medals, by iteratively refining targeted code components and leveraging large language models like Gemini 2.5 Pro. This breakthrough suggests AI systems are nearing the ability to autonomously conduct machine learning research and innovation, raising significant implications for the future of AI-driven discovery and its impact on various fields.

Google Research has recently introduced MLE Star, a cutting-edge machine learning engineering agent that demonstrates significant advancements in AI’s ability to perform recursive self-improvement. This development is particularly noteworthy because it suggests AI systems are approaching the capability to autonomously improve their own design and performance, potentially surpassing human researchers in efficiency and innovation. MLE Star has been tested on Kaggle, a prominent machine learning community platform where millions of data scientists and researchers compete by developing models for various real-world challenges. Impressively, MLE Star has achieved medals in 63% of Kaggle competitions it entered, with 36% being gold medals, showcasing its superior performance compared to previous AI agents.

Kaggle serves as a vital hub for machine learning research and application, hosting competitions that range from predicting house prices to deciphering ancient texts. One notable example is the Vesuvius Challenge, which involves using machine learning to read carbonized scrolls from a Roman villa buried by the eruption of Mount Vesuvius nearly 2,000 years ago. These competitions attract top talent worldwide and push the boundaries of what machine learning can achieve. However, the number of highly skilled human participants is limited, making the emergence of AI agents like MLE Star, which can autonomously compete and excel, a game-changer for the field.

MLE Star distinguishes itself from previous AI agents by employing a more structured and iterative approach to problem-solving. Unlike earlier models that often made wholesale changes to codebases, leading to bloated and inefficient solutions, MLE Star focuses on targeted code block extraction and refinement. It begins by searching the web for effective models related to the task, generates an initial solution, and then iteratively improves specific components that have the most significant impact on performance. This methodical approach addresses the “shiny object syndrome” seen in other AI agents, which tend to prematurely shift focus without deep exploration of promising avenues.

The architecture of MLE Star is designed as a flexible scaffolding framework that can integrate different large language models (LLMs), allowing it to benefit from ongoing improvements in AI technology. For example, when paired with Google’s Gemini 2.5 Pro model, MLE Star achieved 100% valid submissions in Kaggle competitions, a feat unmatched by previous agents. This modularity means that as better LLMs are developed, MLE Star’s performance will automatically improve, highlighting the potential for recursive self-improvement where AI continuously enhances its own capabilities by leveraging advancements in underlying models.

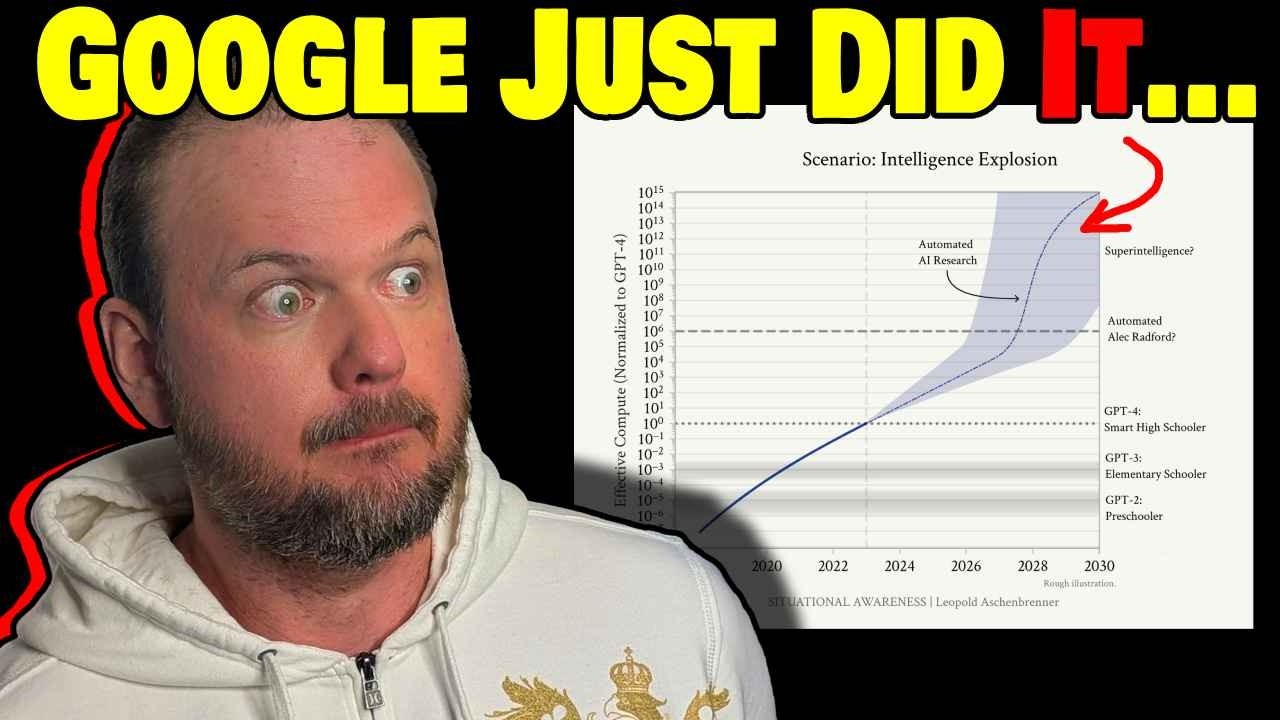

The implications of MLE Star’s success are profound. It signals a future where AI can autonomously conduct machine learning research, develop bespoke models for diverse applications, and accelerate innovation across fields such as archaeology, medicine, and business analytics. While this progress is exciting, it also raises important questions about the potential for an intelligence explosion and the broader impact of AI surpassing human expertise in research and development. As AI agents become more capable and widespread, society will need to carefully consider the benefits and risks associated with this transformative technology.