The video explains how neural networks learn through gradient descent, where weights and biases are adjusted to minimize a cost function and improve accuracy on a training dataset. It highlights the importance of the cost function in guiding learning, the challenge of hidden layers not learning expected features, and ongoing research into optimizing network architectures for better performance.

In the video, the structure of a neural network was explained, with a focus on a classic example of handwritten digit recognition. The network consists of layers of neurons, with activations determined by pixel values in the input layer and weighted sums in subsequent layers. The goal is for the network to learn and improve its performance on a training dataset of handwritten digit images. The concept of gradient descent was introduced as the underlying mechanism for how neural networks learn, involving adjusting weights and biases to minimize a cost function that measures the network’s performance on the training data.

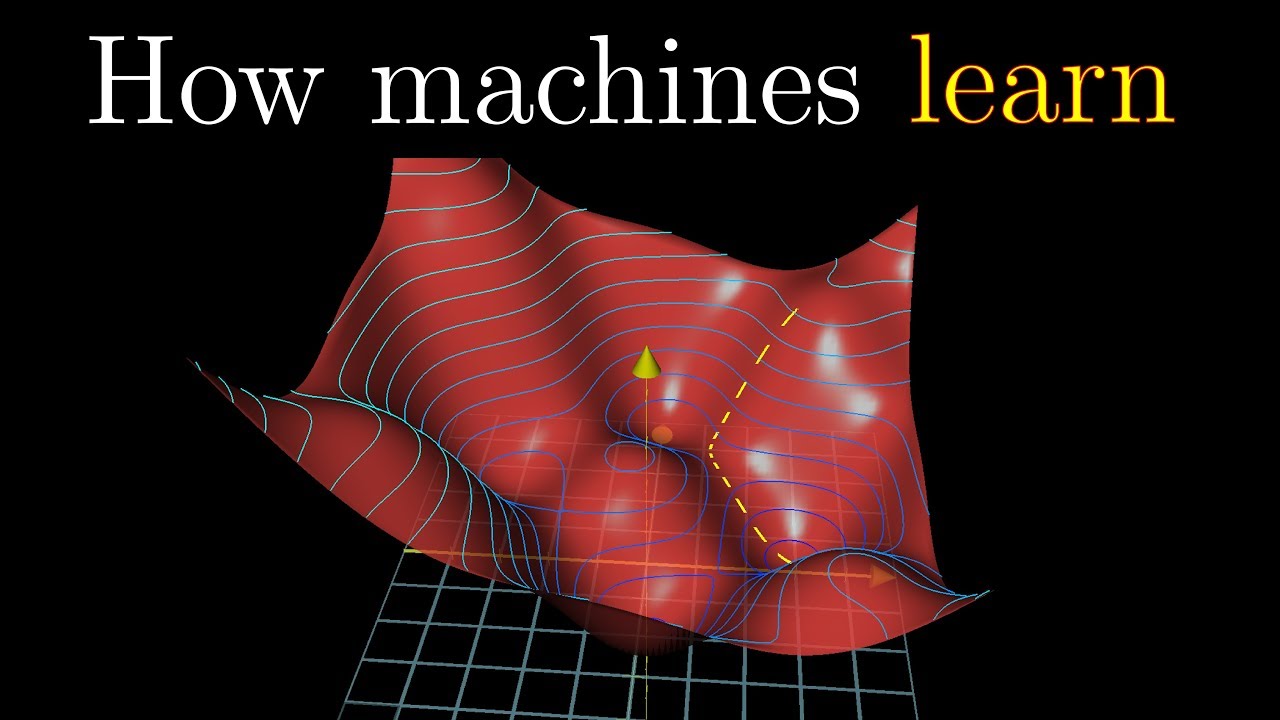

The video delved into the process of gradient descent, which involves computing the gradient of the cost function with respect to the weights and biases of the network. By iteratively adjusting these parameters in the direction that decreases the cost function most rapidly, the network aims to converge towards a local minimum, improving its classification accuracy. The gradient descent process is a way to optimize the network’s performance by making incremental adjustments to the weights and biases based on the calculated gradients.

The video emphasized the importance of the cost function in guiding the network’s learning process, with the goal of minimizing the average cost over the training dataset. By adjusting the weights and biases through gradient descent, the network aims to improve its ability to correctly classify new, unseen images. The video highlighted that the cost function needs to have a smooth output to facilitate finding local minima through gradient descent, showcasing the importance of continuous activations in artificial neurons.

Despite achieving a high classification accuracy on the test dataset, the video revealed that the network’s hidden layers did not necessarily learn the expected features, such as edges and patterns. The network’s performance indicated that it was successful at recognizing digits but lacked an understanding of how to draw them or detect specific features. This discrepancy between expected and actual learning outcomes emphasized the complexity of neural network training and the need for further exploration into optimizing network architectures for better performance.

The video concluded by discussing recent research on modern image recognition networks, which highlighted challenges in understanding whether networks are truly learning meaningful structures from data or simply memorizing patterns. By exploring the optimization landscape and local minima that networks tend to learn, researchers aim to uncover insights into the learning processes of deep neural networks. The video also expressed gratitude to supporters on Patreon and Amplify Partners for their contributions to the video series.