The video discusses a research paper titled “Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools,” which evaluates the reliability of AI-powered legal research tools in preventing inaccuracies or hallucinations. The paper reveals that while these tools claim to be “hallucination-free,” they still exhibit errors, highlighting the importance of users verifying information and understanding the limitations of AI tools in legal research.

In the video, the presenter discusses a research paper titled “Hallucination-Free? Assessing the Reliability of Leading AI Legal Research Tools.” The paper delves into the use of AI-powered legal research tools and evaluates their reliability, particularly in terms of preventing hallucinations - the tendency of AI models to generate incorrect or misleading information. The presenter, who has experience in the legal tech industry, offers insights on the topic, highlighting the challenges of applying AI in the legal field.

The paper focuses on legal research tools that use AI, specifically generative AI like GPT models, to assist in answering legal questions based on publicly available data. These tools aim to provide users with accurate and relevant information by leveraging AI capabilities. However, the paper reveals that even though these tools claim to be “hallucination-free,” they still make significant errors, which can be problematic in the legal field where accuracy is crucial.

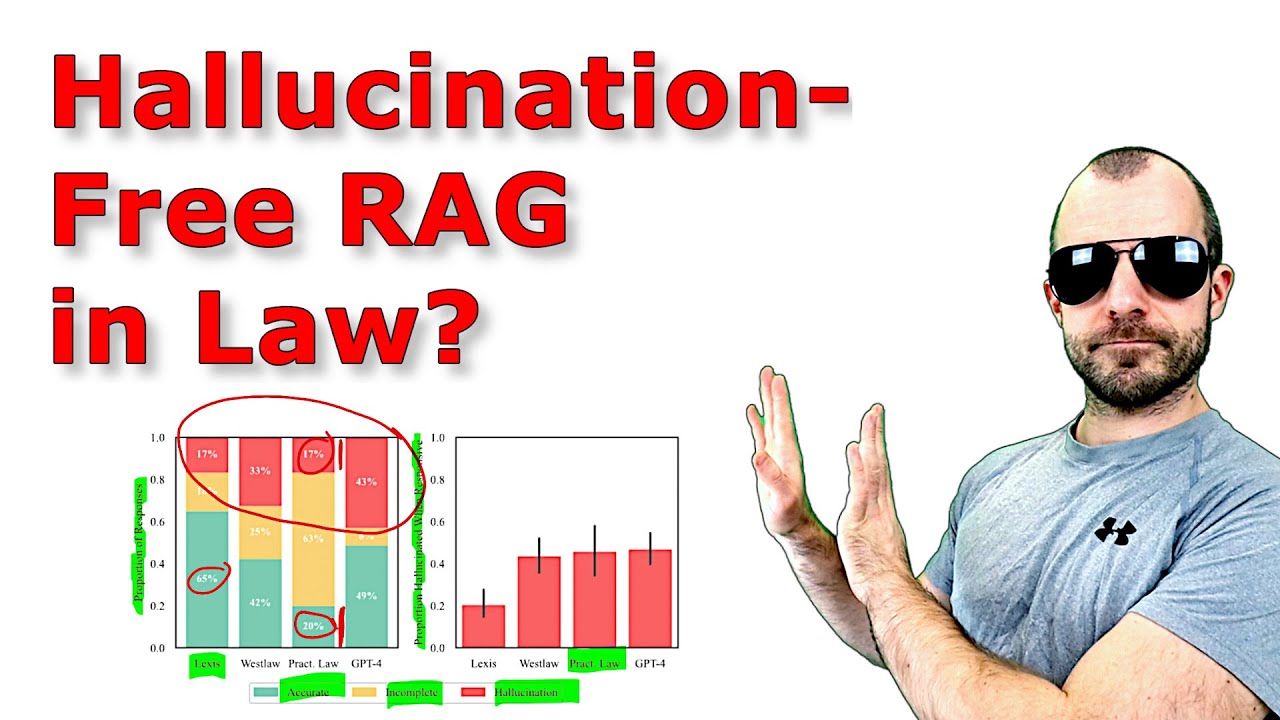

The researchers assess the accuracy of these AI systems, particularly in terms of hallucinations, by testing them on a dataset of complex legal questions. They introduce the concept of retrieval augmented generation (RAG), a technique that enhances AI models by providing them with relevant reference data to improve their responses. RAG aims to reduce hallucinations by ensuring that AI models have access to accurate and reliable information while generating responses.

The paper categorizes hallucinations into correctness and groundedness, where correctness refers to the factual accuracy of the response and groundedness relates to the validity of references cited in the response. The results of the evaluation show that while some AI legal research tools perform well in terms of accuracy and completeness, they still exhibit a significant rate of hallucinations. The paper highlights the importance of users verifying key propositions and citations to ensure the reliability of AI-generated responses in legal research.

Overall, the video discusses the challenges and limitations of AI-powered legal research tools, emphasizing the need for users to critically evaluate the outputs and verify the information provided. The presenter points out that the integration of human expertise and AI technology is crucial in addressing the complexities of legal research tasks. The video underscores the importance of understanding the capabilities and limitations of AI tools in the legal domain to make informed decisions and enhance the reliability of legal research outcomes.