The video discusses the potential plateau in AI development, highlighting that while advancements like ChatGPT and Claude have been significant, the rate of improvement is slowing due to limitations in hardware performance and the diminishing returns of traditional large language models (LLMs). The speaker suggests that the future of AI may rely on exploring alternative architectures and hybrid models, urging the industry to recalibrate expectations and focus on innovative approaches to problem-solving.

In the video, the speaker discusses the idea that artificial intelligence (AI) may be approaching a plateau in its development, despite the rapid advancements seen in recent models like ChatGPT and Claude. They draw parallels to Moore’s Law, which historically predicted that the number of transistors on a microchip would double approximately every two years, leading to significant performance improvements. However, the speaker notes that this trend has slowed due to physical limitations in chip manufacturing, with companies like Intel and AMD struggling to achieve the same level of performance gains as in the past. This stagnation in hardware performance raises concerns about the future of AI development.

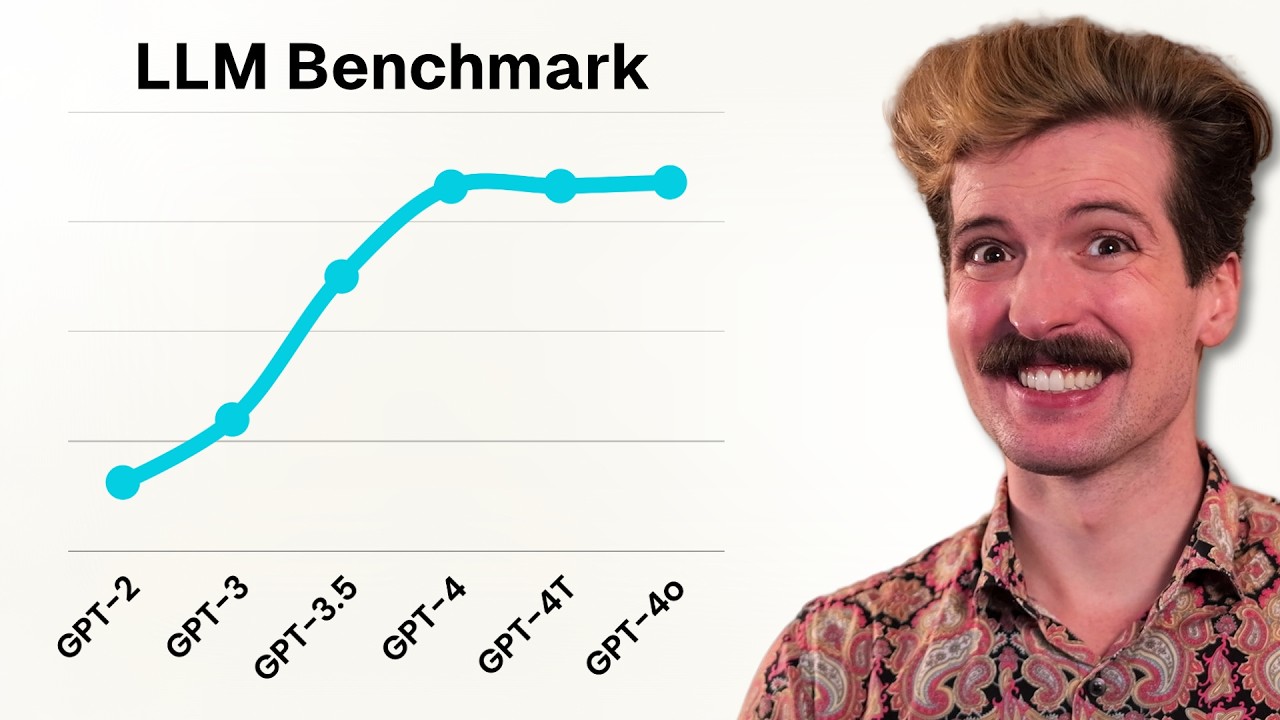

The speaker highlights that while AI models have improved, the rate of these improvements is diminishing. They reference a tweet from Yan LeCun, a prominent figure in AI research, suggesting that students should focus on new architectures rather than traditional large language models (LLMs), indicating a shift in the field. The speaker emphasizes that the advancements in AI models are becoming less significant, with newer iterations requiring more resources and effort for smaller gains. This trend suggests that the AI community may be reaching a theoretical ceiling in model performance.

The discussion also touches on the importance of exploring alternative architectures for AI, such as analog AI chips, which could potentially offer better performance than traditional GPUs. The speaker argues that the future of AI may lie in hybrid models that combine handcrafted code with AI-generated solutions, similar to how modern processors utilize different cores for specific tasks. This approach could lead to more efficient and effective AI systems, as opposed to relying solely on LLMs, which are hitting their limitations.

Additionally, the speaker critiques the current hype surrounding AI, noting that while the technology is gaining popularity, the actual performance improvements are not keeping pace with expectations. They reference Gartner’s hype cycle for AI, which illustrates the cycle of excitement, disillusionment, and eventual realistic expectations. The speaker warns against assuming that AI models will continue to improve exponentially, suggesting that the industry may need to recalibrate its expectations and focus on practical applications of AI technology.

In conclusion, the speaker posits that the AI field is at a crossroads, where continued progress may require a departure from traditional LLMs and a shift towards innovative architectures and methodologies. They argue that the future of AI will depend on finding new ways to leverage existing technologies and developing novel approaches to problem-solving. The video ends with an invitation for viewers to share their thoughts on the topic, emphasizing the importance of dialogue in navigating the evolving landscape of AI.

We’ve seen insane advancements from OpenAI, ChatGPT, Anthropic, and basically every other LLM provider. But it seems like those advancements have slowed. Is this the “moore’s law” of LLMs breaking down? Can we blame NVIDIA? Let’s talk about it

SOURCES