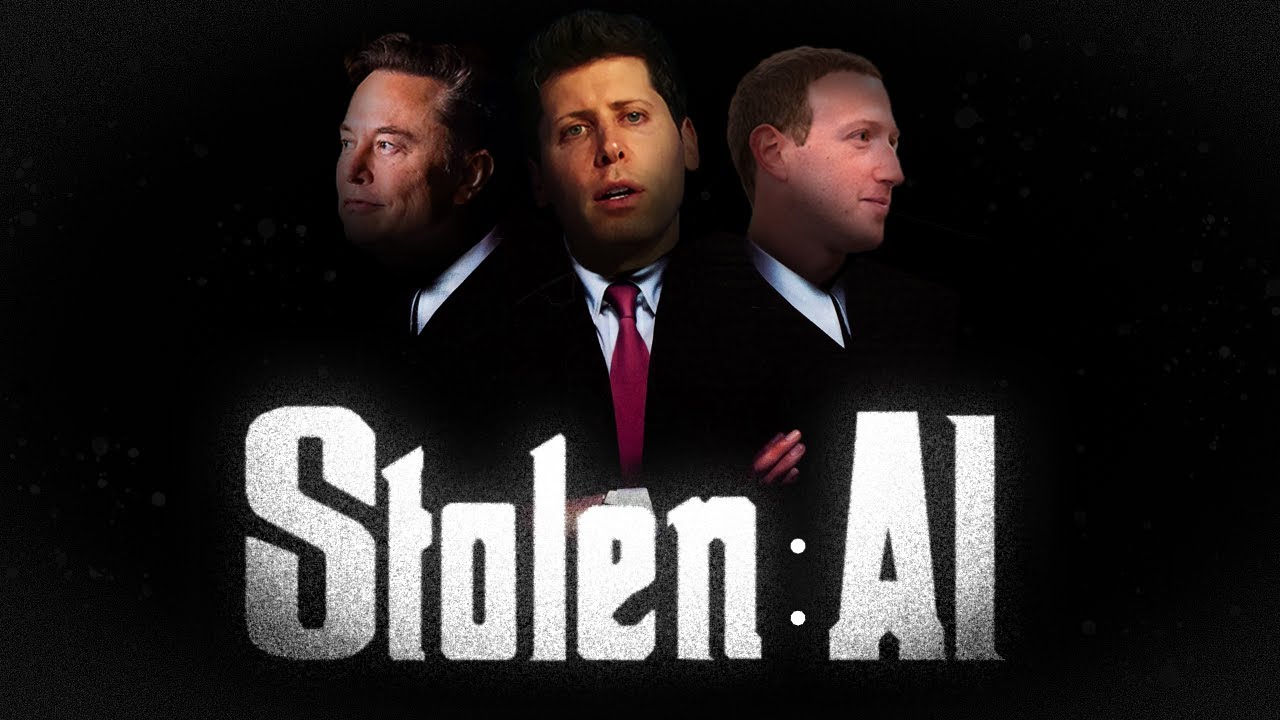

The video “How AI was Stolen” examines the ethical implications of AI development, focusing on the unauthorized use of copyrighted materials for training AI models and the exploitative labor practices involved in curating data. It calls for greater transparency, accountability, and fair compensation for creators, urging society to reflect on the impact of AI on human identity and creativity.

The video titled “How AI was Stolen” delves into the complex history of artificial intelligence (AI) and the ethical implications surrounding its development, particularly focusing on how data has been sourced and utilized. It begins by exploring the longstanding debate around what constitutes intelligence, using the Turing Test and discussions from early AI pioneers like Alan Turing and John McCarthy. The rise of symbolic approaches to AI faced challenges due to the complexities of real-world knowledge, leading to AI winters when progress stalled. New methods, such as machine learning and neural networks, emerged, allowing AI to learn from vast datasets rather than relying solely on predefined rules.

As the narrative progresses, the video highlights the shift towards a data-driven AI landscape, particularly with the advent of large-scale data collection through the internet and social media. It discusses how companies like Google and OpenAI have acquired and trained AI models on massive datasets, including copyrighted materials, often without proper consent from the original creators. This raises important ethical questions regarding ownership, copyright infringement, and the exploitation of artists and writers whose works are used to train AI systems. The video presents various lawsuits filed by authors and creators against AI companies for unauthorized use of their content, emphasizing the growing concern over intellectual property rights.

The video also addresses the labor dynamics behind AI development, revealing how many tasks involved in curating and labeling data for AI models are outsourced to low-wage workers, often in developing countries. This “ghost work” is characterized by repetitive and underpaid tasks that are crucial for AI training but often overlooked. The video highlights the exploitative nature of this labor, where workers face precarious conditions and limited compensation, leading to ethical concerns about the human cost of AI advancements. This is contrasted with the immense wealth and benefits reaped by tech companies and their executives.

Furthermore, the video discusses the potential consequences of AI surpassing human capabilities, exploring the philosophical implications of intelligence and creativity in an age dominated by machines. It raises questions about the future of work, the potential for mass unemployment, and the role of humans in a world where machines may outperform them in nearly every task. The video suggests that while AI can generate content and perform tasks quickly, it lacks the nuanced understanding of human emotions and experiences, invoking fears of obsolescence and redundancy for many professions.

In conclusion, the video calls for a reevaluation of the relationship between AI, labor, and ethics, arguing for greater transparency, accountability, and fair compensation for creators whose works contribute to AI training. It posits that as AI continues to evolve, society must address the implications of its capabilities on human identity, creativity, and the fundamental nature of knowledge. The video emphasizes the need for collective societal reflection on how AI can be harnessed for the benefit of all, rather than allowing it to perpetuate existing inequalities and exploitative practices.