In the video, Jan and Daniel discuss their successful approach to the ARC Prize using large language models (LLMs), which involved a two-step training process and the implementation of depth-first search (DFS) to efficiently generate candidate solutions. They also highlighted the importance of augmentations in training and evaluation, which enhanced the model’s self-reflection and scoring capabilities, ultimately showcasing the potential of LLMs in tackling complex reasoning tasks.

In the video, Jan and Daniel discuss their winning solution for the ARC (Abstraction and Reasoning Challenge) Prize, which involved leveraging large language models (LLMs) to tackle complex tasks. They began with a basic LLM fine-tuning approach, achieving decent results, but later incorporated additional computational steps outside the LLM to significantly enhance their performance. Their initial model, a 12 billion parameter LLM, was trained on tasks represented as grids of colored pixels, where they tokenized each color and input them directly into the LLM. This straightforward approach yielded better-than-expected results, prompting them to explore further enhancements through test-time fine-tuning and other techniques.

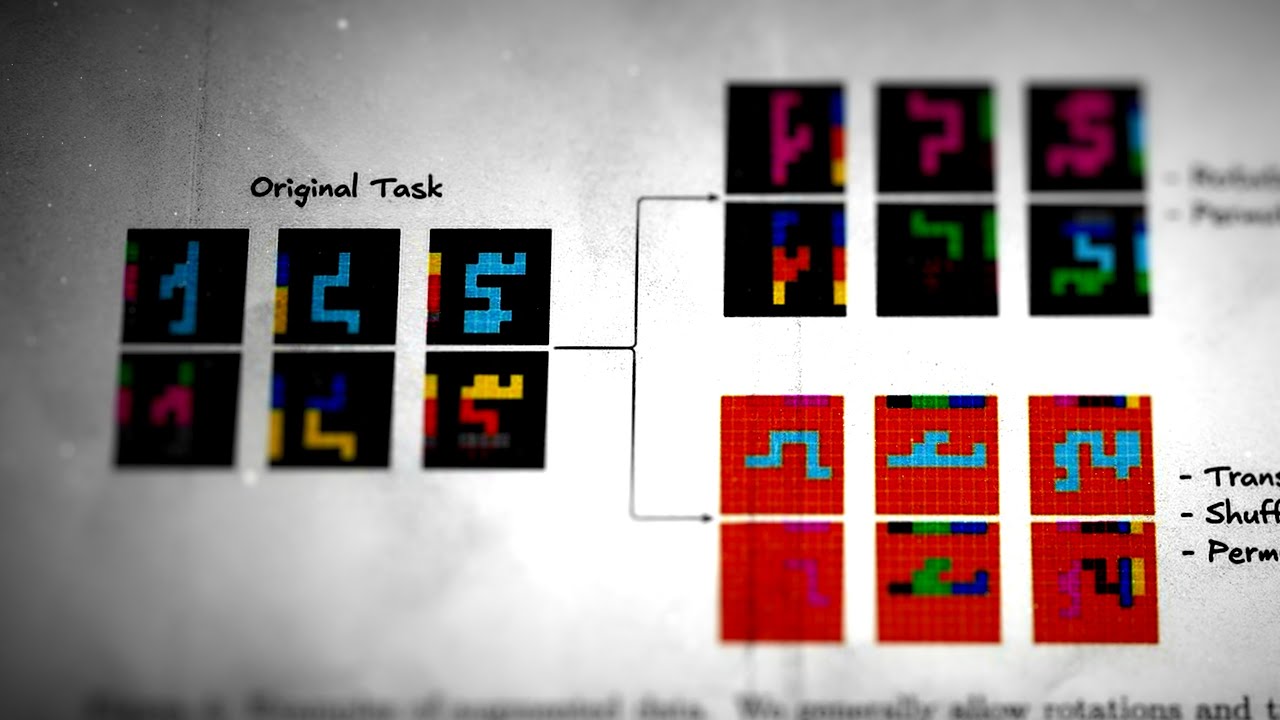

The duo explained their methodology, which included a two-step training process: first, a long pre-training on the official ARC training set, followed by a test-time training on validation examples. They discovered that the reasoning capabilities of LLMs could be improved through this additional training, which allowed them to adapt the model to the specific challenges presented in the ARC tasks. They also experimented with various improvements during the competition, some of which were successful while others did not yield the desired results.

A key aspect of their approach was the use of depth-first search (DFS) to generate multiple candidate solutions efficiently. Unlike traditional sampling methods, which often produced suboptimal results, their DFS algorithm allowed them to explore the solution space more effectively by focusing on paths with higher probabilities. This method not only improved memory efficiency but also enabled them to generate a variety of potential solutions, increasing their chances of finding the correct one. They emphasized the importance of this algorithm in their overall strategy, as it worked synergistically with their scoring process.

Jan and Daniel also discussed the significance of augmentations in their training and evaluation processes. By applying symmetry transformations and other augmentations to the input data, they were able to enhance the model’s ability to evaluate its own outputs. This self-reflection mechanism allowed the LLM to score its candidate solutions more accurately, leading to better selection of the final outputs. They noted that the model’s performance improved significantly when they incorporated these augmentations into their scoring process, which was crucial for achieving high scores in the competition.

Finally, they reflected on the broader implications of their work, highlighting the potential for LLMs to tackle complex reasoning tasks. They acknowledged that while LLMs have demonstrated impressive capabilities, there are still challenges to overcome, particularly in ensuring that the models do not fall into the trap of catastrophic forgetting during continual learning. Their experience in the ARC challenge showcased the power of combining LLMs with innovative search and evaluation techniques, paving the way for future advancements in AI and reasoning tasks.