The video explores how the size of language models (LLMs) affects their performance during test time computation, particularly in the context of the ARC prize, highlighting that smaller models can outperform larger ones when given a fixed compute budget due to their ability to generate and filter outputs more effectively. It emphasizes the importance of optimizing computational strategies, suggesting that even smaller models can achieve competitive results by leveraging efficient evaluation processes.

The video discusses the relationship between the size of language models (LLMs) and their performance during test time computation, particularly in the context of the ARC (AI Research Challenge) prize. It highlights that while larger models generally have better capabilities, they also require significantly more computational resources. The speaker suggests that when operating under a fixed compute budget, smaller models can often outperform larger ones due to their ability to generate, evaluate, and filter more outputs effectively.

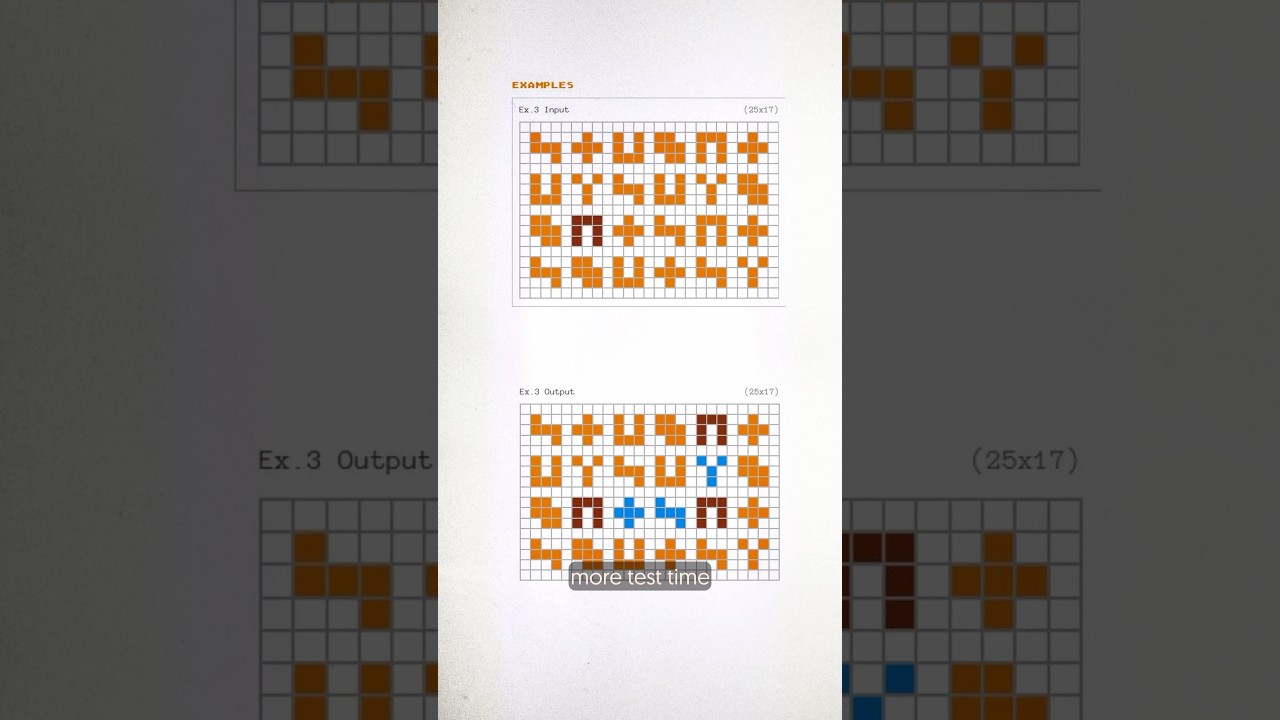

The speaker emphasizes the importance of test time computation, noting that with a smaller model, one can afford to conduct more extensive sampling and evaluation. This increased computational effort allows for better filtering of potential solutions, which can lead to improved outcomes. The trade-off between model size and computational efficiency is a central theme, as larger models may not always yield proportional benefits in performance relative to the additional resources they consume.

In the context of depth-first search (DFS) strategies, the video explains that larger models can be advantageous despite their higher computational demands. This is because larger models tend to have a better understanding of the problem space, allowing them to make more informed decisions about which paths to prune early in the search process. As a result, while the overall computation time may increase, the efficiency gained from better pruning can mitigate some of the expected delays.

The speaker also touches on the implications of these findings for future research and model development. The insights suggest that researchers should consider not only the size of the model but also the computational strategies employed during testing. By optimizing the evaluation process, even smaller models can achieve competitive results, which could influence how models are designed and utilized in various applications.

Overall, the video presents a nuanced view of the trade-offs involved in using LLMs for complex tasks. It encourages a deeper understanding of how model size and computational strategies interact, ultimately advocating for a balanced approach that maximizes efficiency and effectiveness in achieving desired outcomes in AI challenges like the ARC prize.