The video discusses how large language models (LLMs) store factual knowledge, particularly through the operations of multi-layer perceptrons (MLPs) within their transformer architecture. It explains that facts are encoded in high-dimensional vectors through matrix multiplications and non-linear transformations, while also highlighting the complexity of knowledge representation due to the phenomenon of superposition.

In the video, the speaker explores how large language models (LLMs) store factual knowledge, using the example of predicting that “Michael Jordan plays the sport of basketball.” This prediction suggests that the model has internalized specific information about individuals and their associated facts. Researchers from Google DeepMind have investigated this phenomenon, concluding that such facts are likely stored within the multi-layer perceptrons (MLPs) of the model. While a complete understanding of how facts are stored remains elusive, the video aims to explain the workings of MLPs and how they might encode specific knowledge.

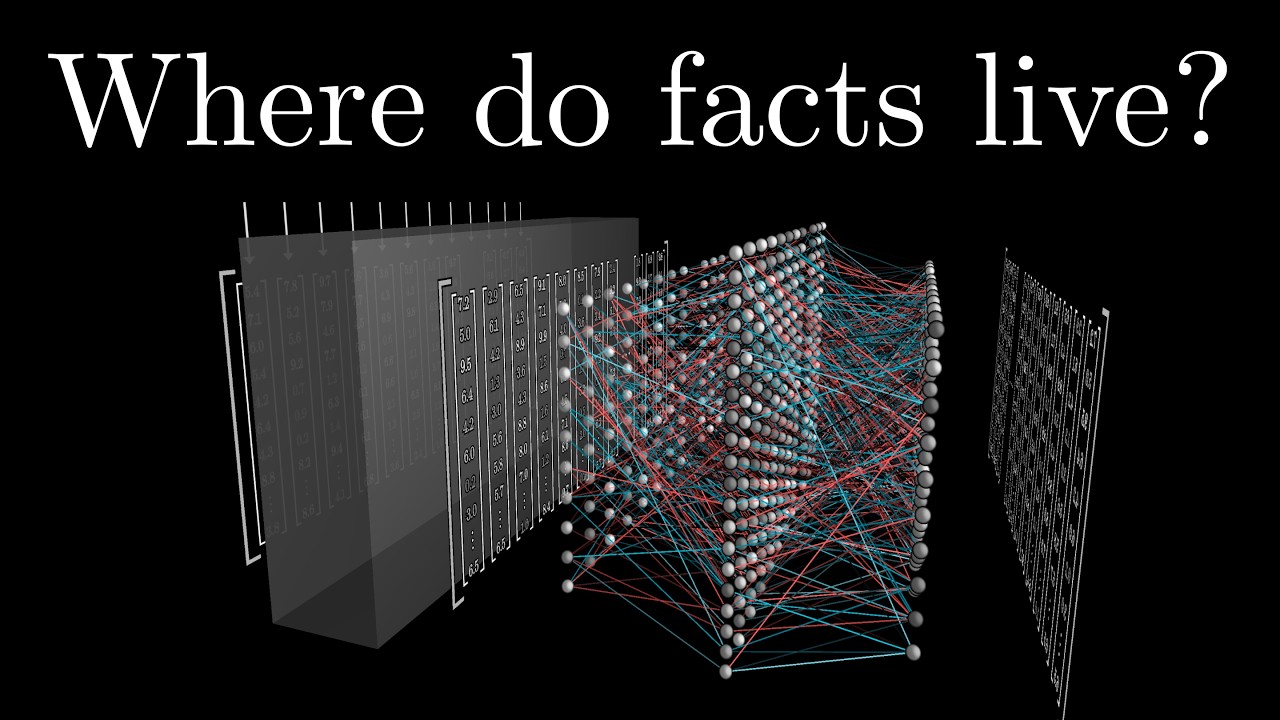

The speaker provides a brief overview of the transformer architecture, which underpins LLMs. The input text is tokenized into high-dimensional vectors that pass through attention mechanisms and MLPs. The goal is for these vectors to absorb contextual information and general knowledge, enabling the model to predict the next token accurately. The speaker emphasizes that these vectors exist in a high-dimensional space, where different directions can represent various meanings, such as gender or other features.

To illustrate how MLPs can store specific facts, the speaker presents a simplified example involving the names “Michael” and “Jordan” and the sport “basketball.” The video assumes that certain directions in the high-dimensional space correspond to these concepts. When a vector representing “Michael Jordan” flows through the MLP, it undergoes a series of operations, including matrix multiplications and non-linear transformations, ultimately resulting in an output that encodes the fact that Michael Jordan plays basketball.

The MLP operations consist of two main steps: an up-projection and a down-projection, each involving matrix multiplication and bias addition. The first step aims to determine whether the input vector aligns with the desired features, while the second step adds relevant information based on the activation of specific neurons. The speaker explains that this process occurs in parallel for all input vectors, allowing the model to efficiently encode complex relationships and facts.

Finally, the speaker reflects on the implications of how facts are stored in LLMs, noting that individual neurons may not represent single, clean features due to a phenomenon called superposition. This concept suggests that LLMs can store more independent ideas than there are dimensions in their embedding space, leading to a more complex representation of knowledge. The video concludes by hinting at future discussions on the training process of LLMs, including backpropagation and reinforcement learning with human feedback.