The video discusses the Prompt Wizard framework from Microsoft, which helps users optimize prompts for large language models (LLMs) to improve their reasoning capabilities through an iterative feedback process. It highlights three key insights: feedback-driven refinement, joint optimization, and self-generated chain of thought steps, ultimately encouraging viewers to utilize this tool for better prompt effectiveness in their projects.

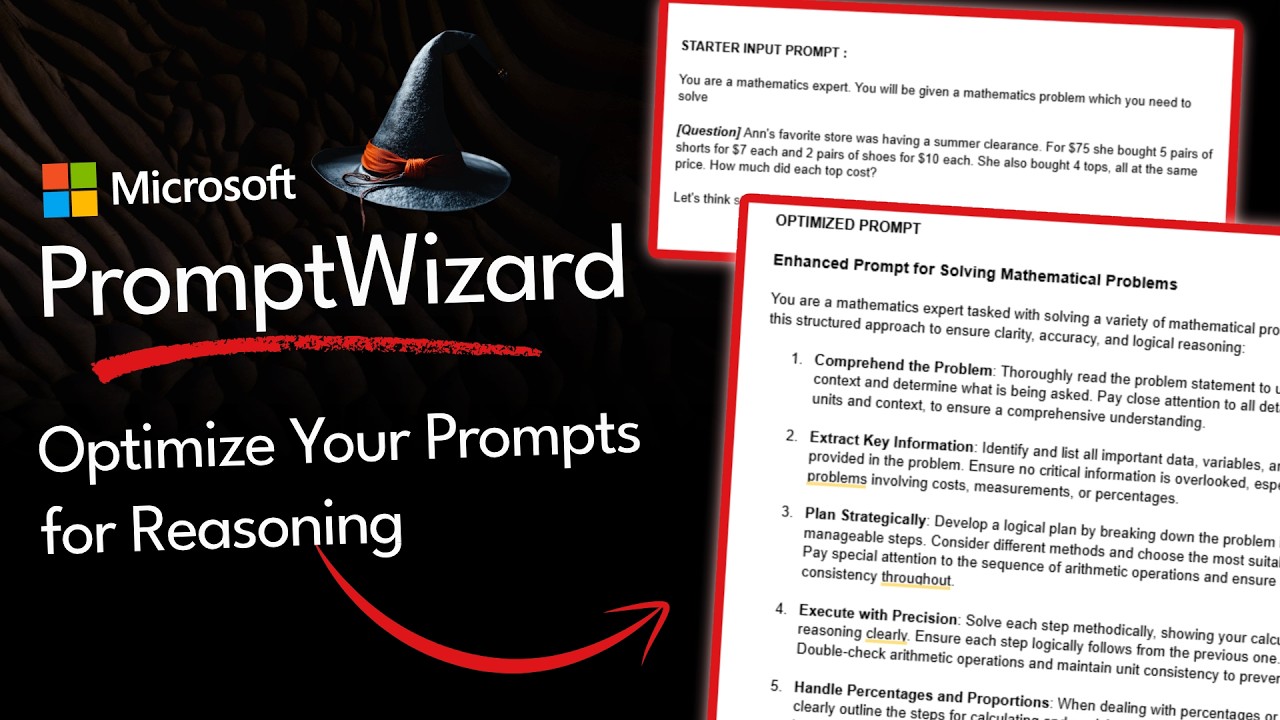

In the video, the presenter discusses the importance of optimizing prompts for large language models (LLMs) to enhance reasoning capabilities. They emphasize that the quality of outputs from LLMs is heavily dependent on the context and inputs provided. Despite the advancements in model performance, many users still struggle with prompt optimization, leading to suboptimal results. The presenter introduces a new framework from Microsoft called Prompt Wizard, designed to help users refine their prompts and improve the reasoning processes of LLMs.

Prompt Wizard operates on the principle of treating prompt optimization as an iterative feedback problem. It utilizes an LLM to critique its own outputs and refine the prompts based on this feedback. The framework aims to evolve both the instructions given to the model and the in-context learning examples used for specific tasks. This approach is particularly relevant for organizations like Microsoft and Google, which continuously train and fine-tune their models, necessitating adaptable and effective prompting strategies.

The video outlines three key insights of the Prompt Wizard framework: feedback-driven refinement, joint optimization, and self-generated chain of thought steps. The first insight involves an iterative loop where the LLM generates critiques and refines its prompts. The second insight focuses on creating diverse synthetic examples that align with the task at hand. The third insight emphasizes the incorporation of chain of thought reasoning to enhance the model’s problem-solving capabilities, allowing it to generate both correct and incorrect reasoning patterns for training purposes.

The presenter provides a detailed walkthrough of how the Prompt Wizard framework works, including the initial setup and the iterative process of refining prompts. They explain how the framework generates mutated instructions and evaluates their effectiveness, ultimately leading to improved prompt instructions. The process involves critiquing and synthesizing new examples, which strengthens the reasoning capabilities of the model and enhances the overall output quality.

In conclusion, the video highlights the potential of the Prompt Wizard framework for optimizing prompts in various applications. The presenter encourages viewers to explore this tool for their projects, especially if they have existing evaluations and prompts that could benefit from refinement. By leveraging the capabilities of Prompt Wizard, users can achieve more effective and tailored prompts, ultimately enhancing the performance of LLMs in solving complex tasks.