The video explains how vector databases enhance AI by storing data as high-dimensional vectors generated through embedding models, which capture the semantic meaning of the data. These vectors enable more effective similarity searches using measures like cosine similarity or Euclidean distance, allowing AI systems to retrieve relevant, unstructured information based on semantic closeness rather than exact matches.

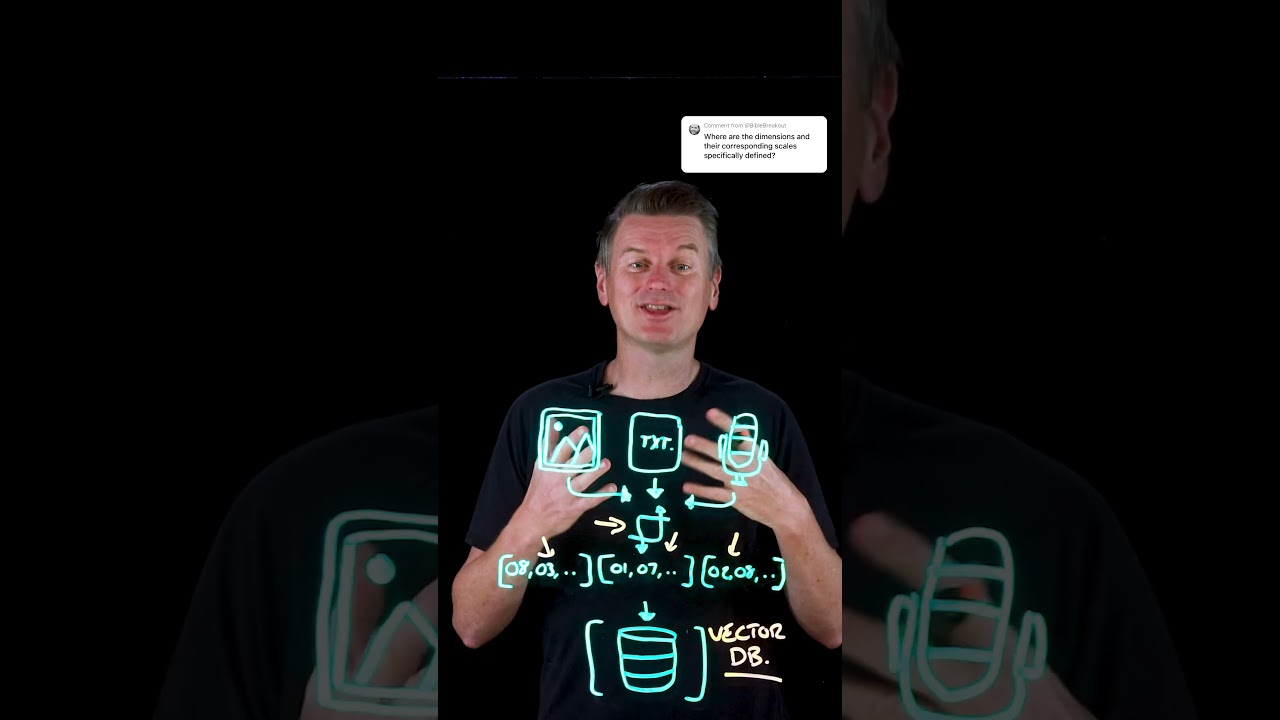

The video explains how vector databases are used to enhance AI applications by storing data in a specialized format called vectors. Instead of saving raw data such as text or images directly, these databases utilize embedding models to convert the original content into high-dimensional vectors. These vectors are essentially long lists of numbers that encapsulate the semantic meaning of the data, enabling more effective similarity searches.

Embedding models play a crucial role by transforming raw data into these numerical vectors. Each vector captures the underlying features and relationships within the data, allowing the database to understand and compare content based on its semantic properties rather than just surface-level features. This process facilitates more meaningful retrieval of information, especially in complex data types like text and images.

A common question addressed in the video concerns the nature of the dimensions within these vectors. Unlike traditional databases where each column has a clear label and unit, the dimensions in a vector embedding are not explicitly defined or labeled. They are learned during the training of the embedding model and represent abstract numerical features that do not have human-readable names. This makes interpreting individual dimensions challenging and often unnecessary for the typical use cases.

The video emphasizes that these dimensions are not directly interpretable in most cases. Occasionally, some dimensions may correlate with intuitive concepts—such as color intensity in images or sentiment in text—but generally, the entire vector is treated as a holistic entity. The focus is on the overall position of the vector in high-dimensional space rather than on understanding each individual component.

Finally, similarity searches in vector databases rely on measures like cosine similarity or Euclidean distance to find semantically similar data points. These measures compare the overall positions of vectors in the high-dimensional space, enabling AI systems to retrieve relevant information based on the semantic closeness of data, rather than exact matches or predefined labels. This approach significantly enhances the ability of AI to understand and process complex, unstructured data.