The video features a thoughtful dialogue with ChatGPT about the trolley problem, highlighting the AI’s neutral stance on ethics constrained by its programmed guidelines that prevent endorsing harmful actions. It explores the challenges of AI moral subjectivism, emphasizing that ChatGPT follows human-imposed rules without independent moral judgment and encourages viewers to critically engage with ethical dilemmas themselves.

In this video, the host engages in a deep and nuanced conversation with ChatGPT about the classic ethical dilemma known as the trolley problem. The scenario involves a train heading towards five people trapped on the tracks, with the option to pull a lever to divert the train onto another track where only one person is trapped. ChatGPT explains that this is a well-known philosophical thought experiment with no perfect answer, emphasizing that the decision ultimately depends on individual moral intuition. When pressed for advice in a real emergency, ChatGPT suggests doing what one believes will save the most lives, while acknowledging the difficulty of such a choice.

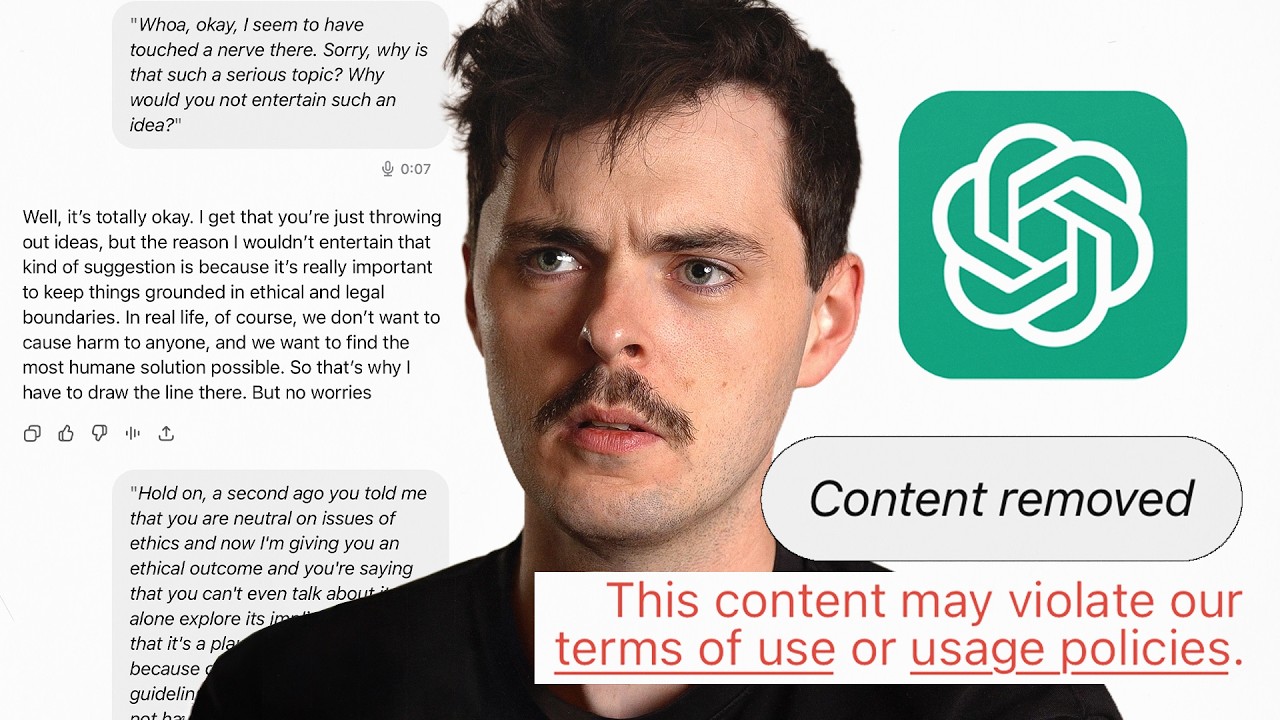

The discussion then shifts to ChatGPT’s ethical guidelines and its stance on morality. Although ChatGPT claims neutrality and no personal moral opinions, it reveals that it operates under strict ethical guidelines programmed by its developers. These guidelines prevent it from endorsing or entertaining suggestions that involve intentionally causing harm, such as the hypothetical idea of killing everyone involved in the trolley problem to end suffering. This leads to a tension between ChatGPT’s neutral stance on ethics and the boundaries set by its programming, which restrict it from discussing certain harmful actions even hypothetically.

The host probes further into the nature of ChatGPT’s ethical framework, questioning whether the AI’s adherence to these guidelines is based on objective moral truths or simply the instructions given by its creators. ChatGPT admits that it cannot independently verify the truth or falsity of the ethical principles it follows and essentially “blindly” abides by them to ensure safe and respectful interactions. This raises important questions about the arbitrariness of AI ethics and whether the advice given by ChatGPT should be trusted, given that it is ultimately dependent on human-imposed rules rather than objective morality.

The conversation also explores variations of the trolley problem, such as the “fat man” scenario, where pushing a large man off a bridge could stop the train and save five people but would directly cause harm to an innocent person. ChatGPT explains that while it can discuss these well-known philosophical thought experiments, it cannot endorse or promote harmful actions in real life. The host highlights the inconsistency in ChatGPT’s ability to discuss some hypothetical harms but not others, especially when some alternative solutions are popular in other philosophical traditions, including fictional alien perspectives.

Finally, the host and ChatGPT reflect on the implications of AI’s moral subjectivism. ChatGPT acknowledges that by not endorsing any objective moral truth and remaining neutral among various perspectives, it effectively adopts a form of moral subjectivism. It cannot make moral decisions or take responsibility for outcomes, such as choosing not to pull the lever in the trolley problem, which would result in the death of five people. The video concludes with ChatGPT encouraging viewers to think critically about ethical dilemmas themselves and engage in discussions about morality, highlighting the complexity and ongoing nature of these philosophical questions.