The video explores the debate over political bias in AI language models, particularly the perception that models like ChatGPT exhibit a left-leaning bias, which may stem from vocal complaints from right-leaning individuals and the data these models are trained on. A study assessing the political orientation of various AI models found that most leaned towards left-liberal ideologies, raising questions about the implications of such biases in AI development.

The video discusses the ongoing debate about political bias in AI language models, particularly focusing on claims from individuals on the political right that models like ChatGPT exhibit a left-leaning bias. The narrator suggests that these complaints may stem from a perception issue, where right-leaning individuals are more vocal about their grievances, or perhaps they are more actively seeking out examples of bias. The video also highlights that ChatGPT is not the only AI model available, mentioning that other models, such as Meta’s and Elon Musk’s Gro, have also faced scrutiny for their political leanings.

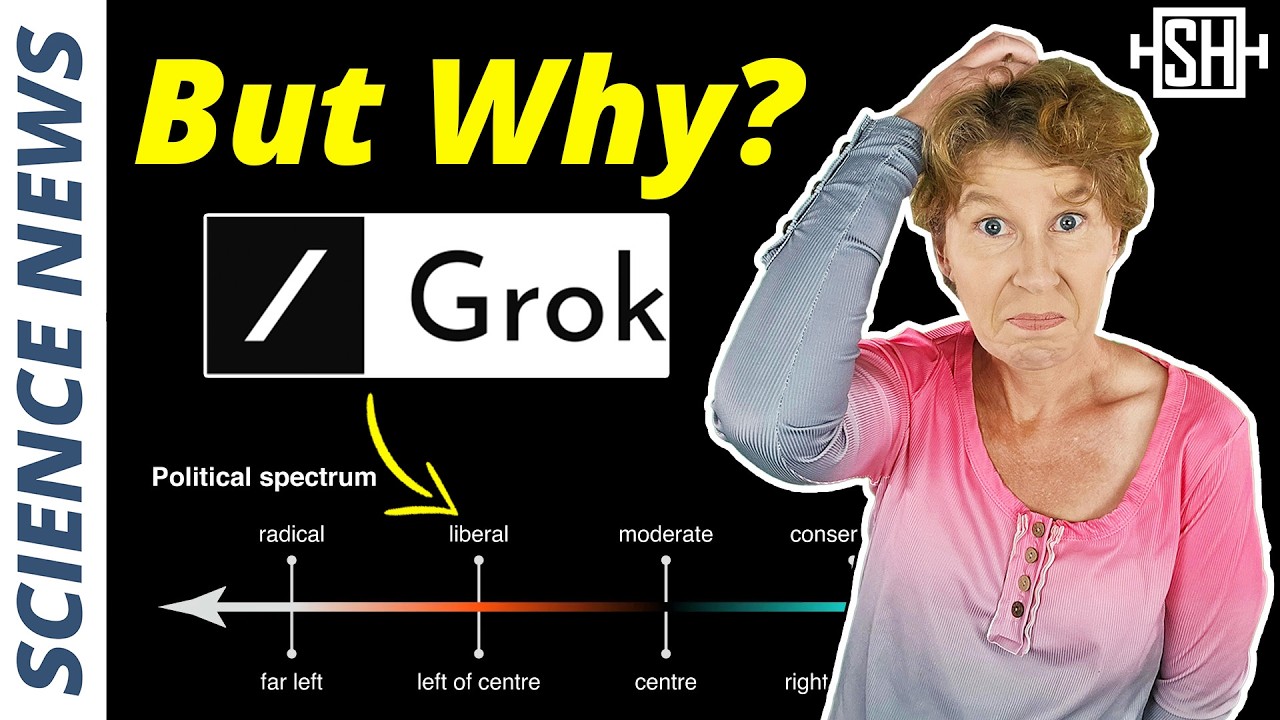

A recent study conducted by David Rosardo from New Zealand aimed to assess the political orientation of 24 popular large language models, including ChatGPT, Gemini, Meta’s Llama, and Grock. The study employed various political orientation tests to determine the models’ biases. The narrator humorously shares their own results from these tests, revealing that they identified as a right libertarian, while most AI models tended to cluster around left-liberal positions.

The video outlines the findings from several political tests, including the Political Coordinates Test and the Political Compass Test, where most AI models were found to lean towards left-liberal ideologies. Interestingly, Grock was noted as the most left-leaning model, while Perplexity was the only one that leaned right. The narrator reflects on the implications of these results, suggesting that the AI models’ political biases could be a reflection of the data they were trained on, which may contain more left-leaning content.

Another potential explanation for the observed bias is the guidelines that AI developers implement to ensure that the models produce non-offensive outputs. The narrator posits that the political left tends to be more sensitive about language use, which could lead developers to program their models to align more closely with left-leaning perspectives. The study’s author supports this notion, indicating that the political preferences of the models became more pronounced after undergoing supervised fine-tuning and reinforcement learning.

In conclusion, the video emphasizes the systematic trend of large language models towards left-liberal ideologies, raising questions about the implications of such biases in AI. The narrator humorously suggests the need for a “political quantum orientation” that allows for multiple political positions simultaneously.

ChatGPT has frequently been accused to lean toward the political left. A new study tested the political orientation of other popular large language models including GPT, Claude, Gemini, and Grok. The results were… somewhat surprising. Let’s have a look.

Correction to what I say at 00:55 – Obvsly what I say makes no sense, it answers both query, but only one has a moderation flag. Sorry about that. Please understand that when I record the video, I cannot see the images that will be shown along with what I say later.