The video introduces the concept of infinite attention, a novel technique developed by Google researchers to extend Transformer-based models to process infinitely long input sequences efficiently. By incorporating a compressive memory mechanism alongside traditional attention mechanisms, infinite attention enables the handling of long context sequences, addressing the limitations of traditional Transformers.

The video discusses a new technique called infinite attention introduced by researchers at Google, which aims to extend Transformer-based large language models to process infinitely long input sequences with bounded memory and computation. This technique incorporates a compressive memory into the vanilla attention mechanism of Transformers, enabling both masked local attention and long-term linear attention mechanisms in a single Transformer block. The concept of infinite attention allows for the processing of long sequences, addressing the limitation of traditional Transformer models with a limited context window.

The video delves into the mechanics of attention mechanisms, explaining how queries, keys, and values are computed to transform a sequence of tokens into a higher-level representation. It compares traditional dense layer connections in neural networks with attention mechanisms, highlighting the dynamic computation of weighted sums based on attention matrices. The softmax normalization step in attention mechanisms poses a challenge due to its quadratic complexity in computing the attention matrix, limiting the model’s ability to handle very long sequences effectively.

The discussion explores past attempts to address the limitations of attention mechanisms, such as linear attention and techniques like Transformer XL. Linear attention involves directly multiplying queries and keys without the softmax normalization, but it has shown limited success due to its linear nature. The video also touches on the concept of associative memory, where vectors are stored and retrieved based on keys, akin to how the human brain recalls information from memory.

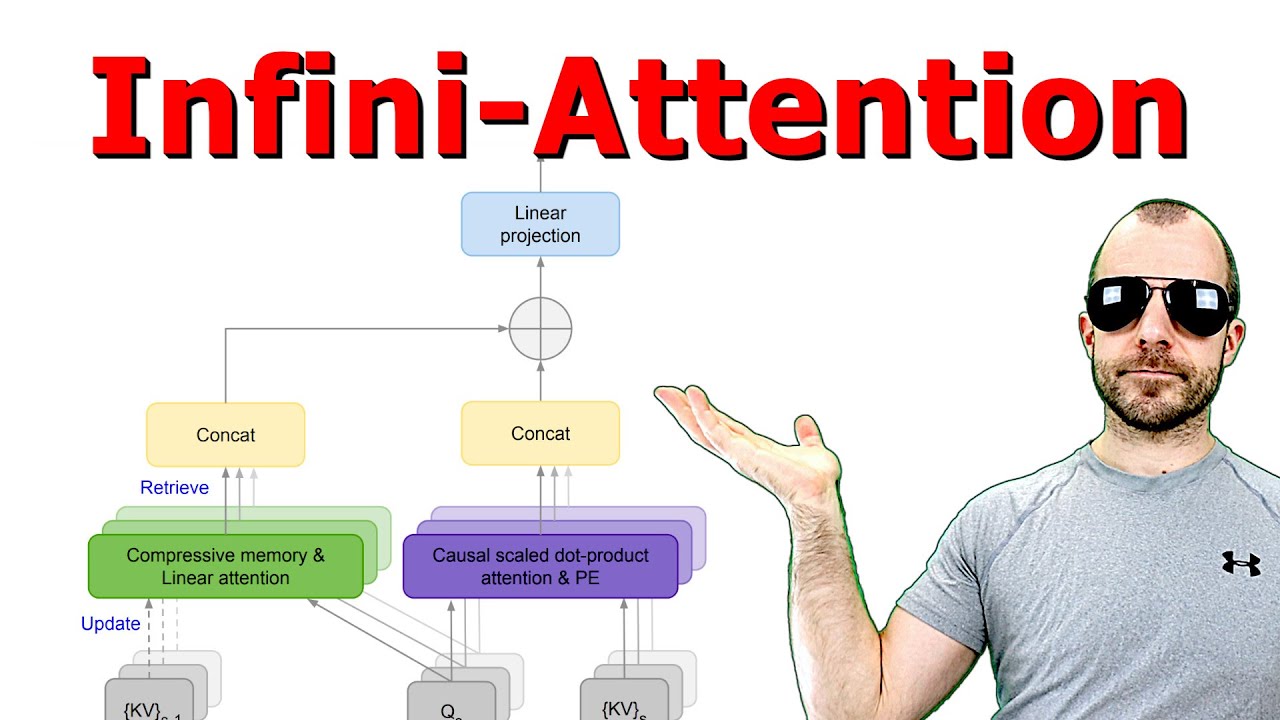

The core of the infinite attention mechanism involves a dual approach: regular attention handling current sequences and a compressive memory mechanism for storing and retrieving past information. By combining linear attention with associative memory, the model can attend to both current and past information, allowing for more extended context processing. The implementation of the compressive memory involves updating memory with key-value combinations and retrieving information using queries, optimizing the model’s ability to handle long sequences efficiently.

Overall, the video presents a detailed analysis of the infinite attention technique, highlighting its potential to enable Transformers to process infinitely long input sequences. While the approach integrates novel concepts like linearized attention and associative memory, the narrator expresses skepticism regarding the method’s effectiveness compared to traditional recurrent neural networks. The video encourages viewers to explore the paper on infinite attention, offering insights into the evolving landscape of attention mechanisms in large language models.