The video discusses Llama Index’s workflows for building asynchronous AI agents, emphasizing its event-driven approach and higher-level abstractions compared to LangChain, which focuses on interconnected nodes. The presenter demonstrates the practical setup of an AI research agent, highlighting the importance of asynchronous programming for efficiency and scalability while acknowledging the learning curve for developers new to this paradigm.

In the video, the presenter explores Llama Index’s workflows for building asynchronous AI agents, highlighting its event-driven approach to constructing agentic flows. The presenter compares Llama Index to LangChain, noting that while both libraries have their strengths, Llama Index offers higher-level abstractions that may be better structured for certain developers. This structural difference is significant, as Llama Index focuses on defining steps and events that trigger those steps, whereas LangChain emphasizes building a graph of interconnected nodes and edges.

The presenter emphasizes the importance of asynchronous programming in Llama Index, arguing that it enhances the performance and scalability of AI agents. By using asynchronous code, developers can avoid blocking operations while waiting for responses from language models (LLMs), allowing the code to perform other tasks simultaneously. This is particularly beneficial for agents that rely on LLMs, which can take time to process requests. However, the presenter acknowledges that the learning curve for developers unfamiliar with asynchronous programming may be steeper.

The video then transitions into a practical demonstration of building an AI research agent using Llama Index. The presenter outlines the necessary prerequisites and walks through the setup process, including initializing connections to OpenAI and Pinecone for embedding and indexing. The agent will utilize various tools, such as a RAG search tool and a web search tool, to gather information and conduct research. The presenter emphasizes the importance of defining functions and tools in an asynchronous manner to maximize the agent’s efficiency.

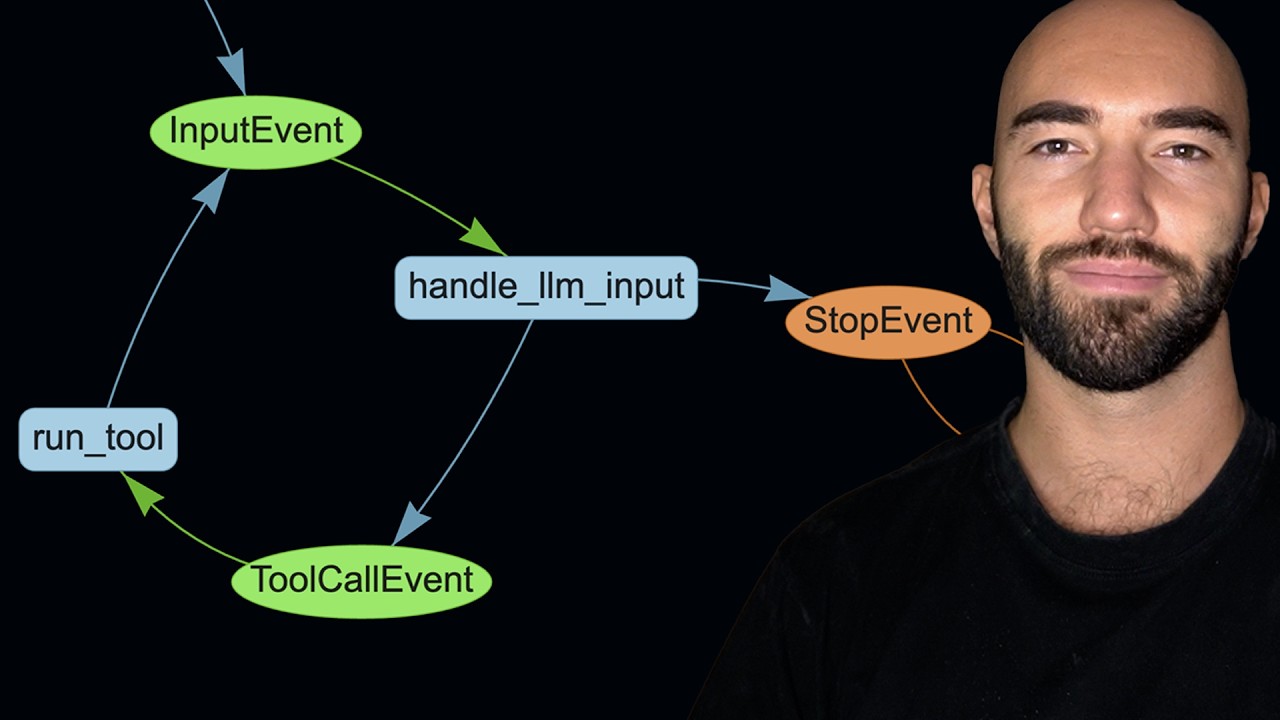

As the presenter builds the workflow, they explain the significance of events in Llama Index, such as the start and stop events that trigger and end the workflow. The presenter also discusses how to handle input events and tool calls, detailing how the agent processes user queries and generates responses. The workflow is designed to loop through various steps, allowing the agent to gather information iteratively until it can provide a final answer to the user.

In conclusion, the presenter reflects on the capabilities of Llama Index workflows, noting their potential for building efficient and scalable AI agents. While they acknowledge that both Llama Index and LangChain have their unique advantages, the asynchronous nature of Llama Index stands out as a valuable feature. The presenter expresses a desire to explore Llama Index further before making any definitive preferences between the two libraries, ultimately highlighting the importance of understanding the specific needs of a project when choosing a framework for building AI agents.