The video showcases the LlamaOCR project by Together AI, which utilizes the Llama 3.2 Vision model for Optical Character Recognition (OCR) through an easy-to-use npm package and Python implementation, demonstrating its ability to convert images into editable text while retaining formatting. The presenter also discusses the potential for local model deployment and applications in web scraping, encouraging viewers to experiment with OCR technology in their own projects.

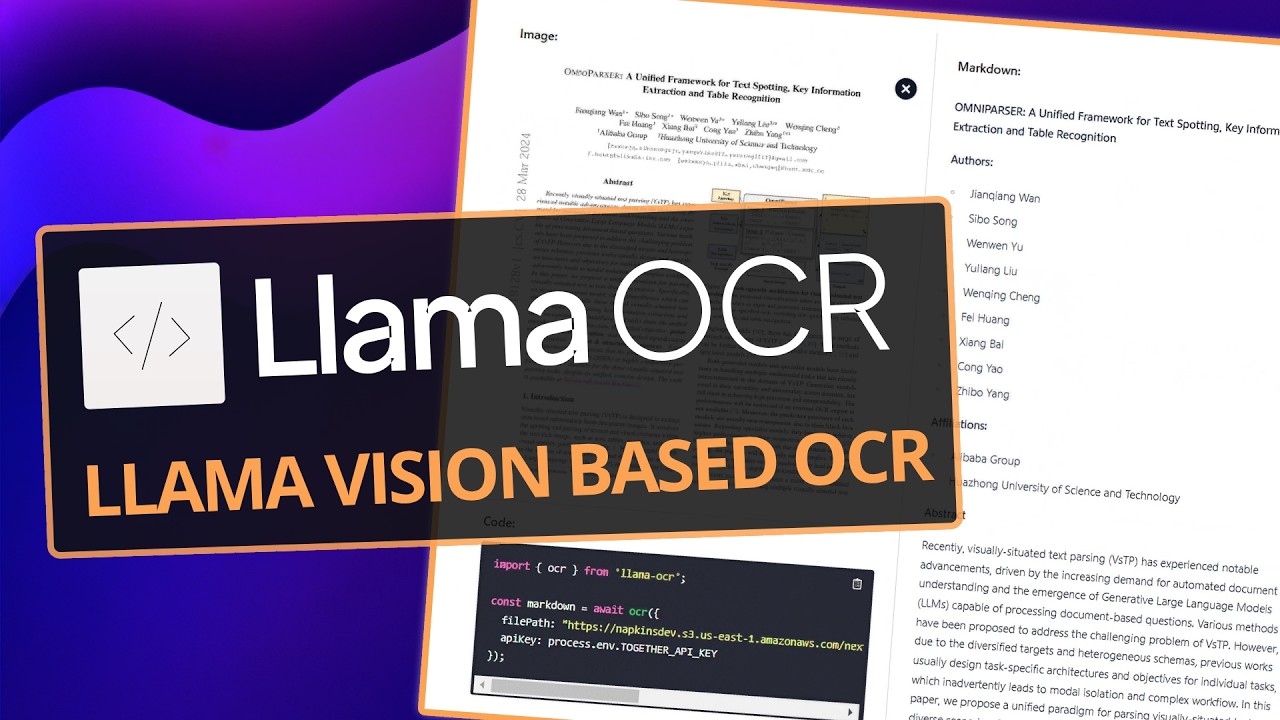

The video discusses the LlamaOCR project developed by Together AI, which utilizes the Llama 3.2 Vision model, specifically the 90 billion parameter version. The project provides an easy-to-use npm package that allows users to perform Optical Character Recognition (OCR) by simply importing LlamaOCR, passing in a file path, and using a Together API key. The presenter demonstrates how the system works by converting screenshots and receipts into editable text, showcasing the model’s ability to retain formatting and produce varying results due to its stochastic nature.

The video then transitions into a coding segment where the presenter recreates the LlamaOCR functionality in Python. By utilizing the Together API, the presenter explains how to access different vision models and how to handle image inputs, either through URLs or local files. The importance of encoding images correctly based on their format (JPEG, PNG, etc.) is emphasized, along with the need to calculate token usage based on image size, which affects the cost of using the API.

As the presenter delves deeper into the code, they highlight the differences in prompts used for extracting text from images. The initial implementation often returned additional descriptive text rather than just the OCR output. To address this, the presenter creates a custom image processor class that allows for more precise control over the prompts and the output format, resulting in cleaner markdown outputs. The video also discusses the potential for using multiple passes of OCR to improve accuracy by leveraging consensus from different outputs.

The discussion then shifts to the possibility of running the model locally using the AMA version, particularly the 11 billion parameter model, which is more feasible for home computers. The presenter suggests that this local setup can be beneficial for users who want to avoid cloud dependency while still performing OCR tasks. Additionally, they explore the application of OCR in web scraping, where images from web pages can be processed to extract relevant information alongside the text content.

In conclusion, the video emphasizes the advancements in OCR technology through the integration of large language models, making it easier to extract information from various formats, including charts and diagrams. The presenter encourages viewers to experiment with the provided code and consider how they might incorporate OCR into their own projects, particularly in relation to retrieval-augmented generation (RAG) scenarios. The video wraps up with an invitation for feedback and suggestions for future content, reinforcing the community aspect of learning and development in this field.