The video explores an experiment using large language models (LLMs) to perform in-context adaptive learning on a complex mathematical classification task, where models iteratively generate and refine natural language predictive rules based on sampled training data. While some models showed modest improvements in accuracy, overall results were mixed, highlighting challenges in consistent learning and instruction-following, with the creator providing code and ongoing developments for further research.

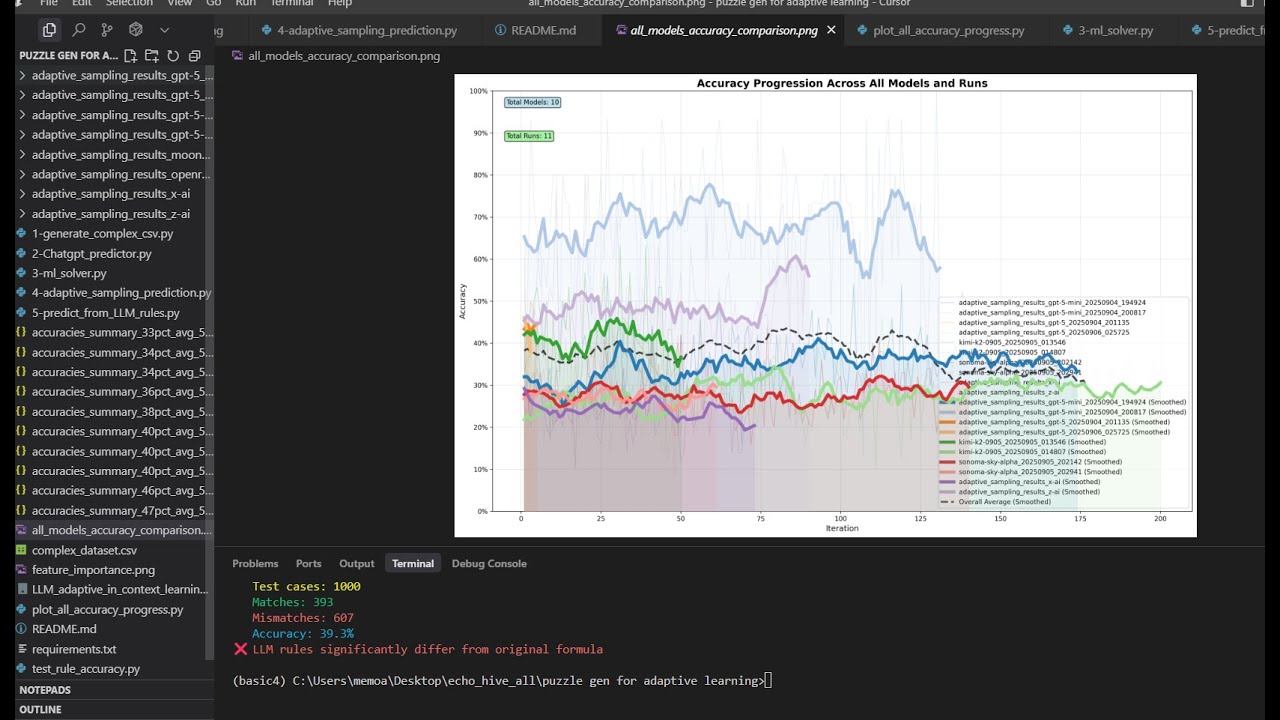

In this video, the creator presents a new learning script designed to test in-context adaptive learning using large language models (LLMs). The experiment involves a complex dataset generated from five numerical inputs that produce categorical outputs through intricate mathematical rules. The goal is to see if LLMs can learn and predict these categories accurately by iteratively refining their understanding based on sampled training data. While initial results with models like Sonoma Sky Alpha showed flat learning curves, some models such as GPT5 mini demonstrated a clearer upward trend in accuracy over multiple runs.

The dataset creation process is explained in detail, highlighting the complexity of the underlying logic that combines sums, products, modulo operations, and conditional statements to generate the output categories. The creator also shares attempts to have GPT5 generate Python scripts to predict outcomes, which included machine learning approaches rather than purely reasoning through natural language. These scripts achieved moderate accuracy, with the machine learning algorithm reaching up to 95%, showing that the problem is solvable but challenging for LLMs to crack purely through reasoning.

The core of the project is an adaptive sampling prediction script that tests various models by feeding them small batches of training data and asking them to generate predictive rules in natural language. These rules are then used as prompts for subsequent predictions, with accuracy tracked over iterations. The results show mixed success: some models improve slightly, but many plateau or even decline after a certain point. The creator notes that feeding too many rows at once might cause this performance drift and that instruction-following capabilities vary across models, affecting their consistency.

A key feature of the project is the ability to convert the natural language rules generated by the models into executable scripts, allowing deterministic evaluation of the rules’ effectiveness. This dual approach—using models to generate rules and then testing those rules programmatically—provides insight into the models’ reasoning capabilities versus their ability to follow instructions reliably. The creator also mentions ongoing work on a new version of the system that shows more promise and invites viewers to explore the code and results available on Patreon.

Overall, the video offers a thorough exploration of using LLMs for adaptive learning on complex mathematical classification tasks. While the results are somewhat disappointing in terms of clear, consistent learning progress, the project demonstrates that LLMs can surpass random chance and sometimes improve accuracy through iterative prompting. The creator encourages experimentation and collaboration through Patreon, providing extensive resources and support for those interested in advancing this line of research.