The video introduces Mamba, a new neural network architecture that claims to outperform transformers in language modeling due to its computational efficiency and innovative handling of memory transfer. It also discusses the controversy surrounding Mamba’s rejection from the ICLR 2024 conference, highlighting the ongoing debates in the academic community regarding evaluation metrics and peer review practices.

The video introduces Mamba, a new neural network architecture that claims to outperform transformers in language modeling. After dominating the field for seven years, transformers are potentially being replaced by Mamba, which has shown promising results even at smaller model sizes. Notably, Mamba is more computationally efficient, requiring O(n log(n)) operations for input sequences, compared to the O(n²) needed by transformers. This efficiency opens the door for larger context sizes in language modeling, making Mamba an exciting development in the field.

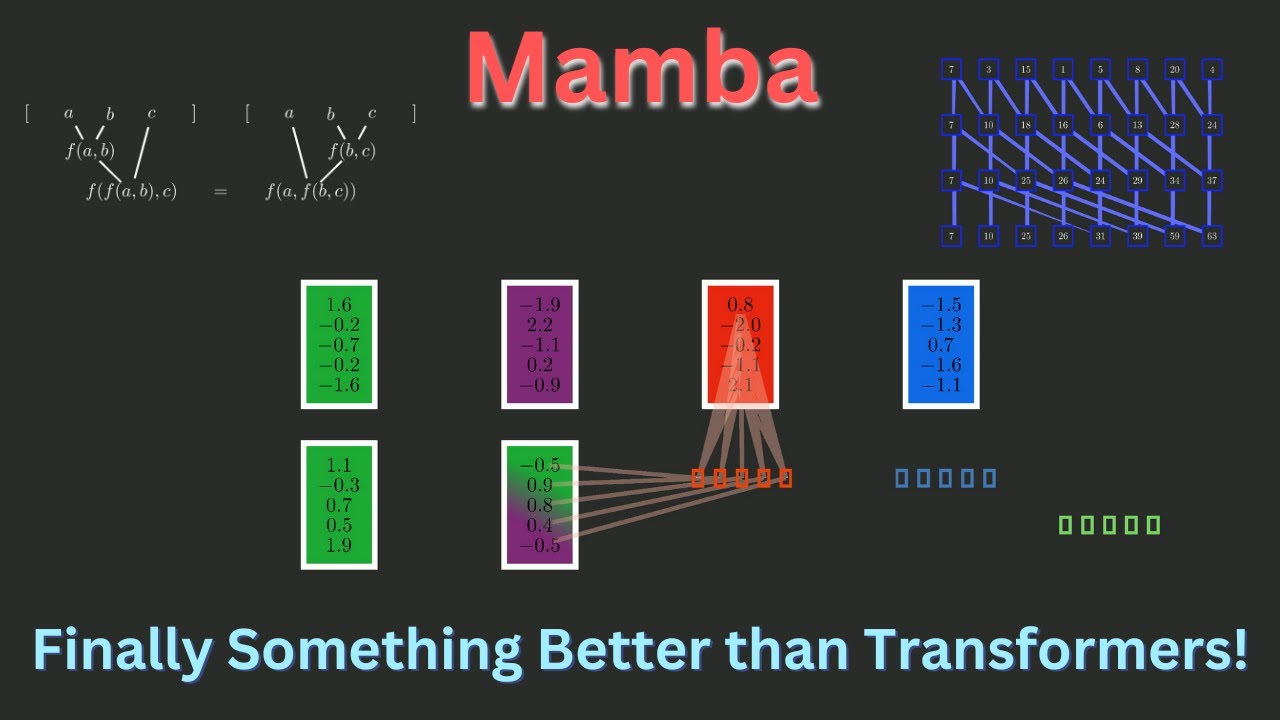

To explain Mamba, the video first explores recurrent neural networks (RNNs) and their limitations, including difficulties with parallel computation and training challenges due to vanishing and exploding gradients. While RNNs allow for long-range dependency modeling, they have largely fallen out of favor due to their inefficiencies. A recent advancement, linear RNNs, has addressed some of these issues by simplifying the architecture, making it faster and more effective for long sequences. The video also highlights how linear RNNs can be computed in parallel using a cumulative sum algorithm, enhancing their performance.

Mamba builds on the principles of linear RNNs by introducing dynamic weight generation based on input vectors. This allows the model to selectively forget or retain information, which is crucial for effective language modeling. Mamba also expands output vector sizes, enhancing its capacity to store information without significantly increasing computational overhead. The video emphasizes how Mamba cleverly manages memory transfer in GPU computations, leveraging the architecture to maintain efficiency.

The discussion transitions to the controversy surrounding the Mamba paper’s rejection from a prestigious machine learning conference, ICLR 2024. Despite successful reproductions of Mamba’s results by various research groups, the paper faced criticism for not being tested on the long-range arena benchmark and for its evaluation metrics. The video argues that these criticisms are misplaced, as they conflate different types of tasks and overlook the model’s demonstrated capabilities in language modeling and downstream tasks.

In conclusion, the video captures the excitement surrounding Mamba as a potential breakthrough in neural network architecture while addressing the challenges and debates in the academic community regarding its peer review process. Mamba’s ability to outperform transformers and its innovative approach to RNNs could signal a significant shift in language modeling, though the controversy over its acceptance highlights ongoing issues in academic evaluation practices. The presenter encourages viewers to share their thoughts on both Mamba and the peer review system in machine learning research.

Mamba is a new neural network architecture that came out this year, and it performs better than transformers at language modelling! This is probably the most exciting development in AI since 2017. In this video I explain how to derive Mamba from the perspective of linear RNNs. And don’t worry, there’s no state space model theory needed!

Mamba paper: Mamba: Linear-Time Sequence Modeling with Selective State Spaces | OpenReview

Linear RNN paper: Resurrecting Recurrent Neural Networks for Long Sequences | OpenReview

mamba

#deeplearning

#largelanguagemodels

00:00 Intro

01:33 Recurrent Neural Networks

05:24 Linear Recurrent Neural Networks

06:57 Parallelizing Linear RNNs

15:33 Vanishing and Exploding Gradients

19:08 Stable initialization

21:53 State Space Models

24:33 Mamba

25:26 The High Performance Memory Trick

27:35 The Mamba Drama