The video discusses the Mamba architecture for sequence modeling, which offers a linear-time approach with Selective State Spaces, allowing it to efficiently handle long sequences while competing with traditional Transformers and RNNs. Mamba’s design and performance metrics suggest it excels in tasks requiring the processing of long contexts, making it a promising advancement in sequence modeling technologies.

In the video, the presenter discusses the Mamba architecture for sequence modeling, which stands for “Linear-Time Sequence Modeling with Selective State Spaces,” authored by Albert Guo and Tre Da. Mamba is positioned as a potential competitor to Transformers, particularly due to its capacity to efficiently scale with long sequences, which is a significant advantage compared to traditional models like recurrent neural networks (RNNs) and previous state-space models like S4. The presenter outlines the limitations of existing models, highlighting the quadratic computational and memory requirements of Transformers and the restrictive nature of RNNs, which can only access the last hidden state and current input at each time step.

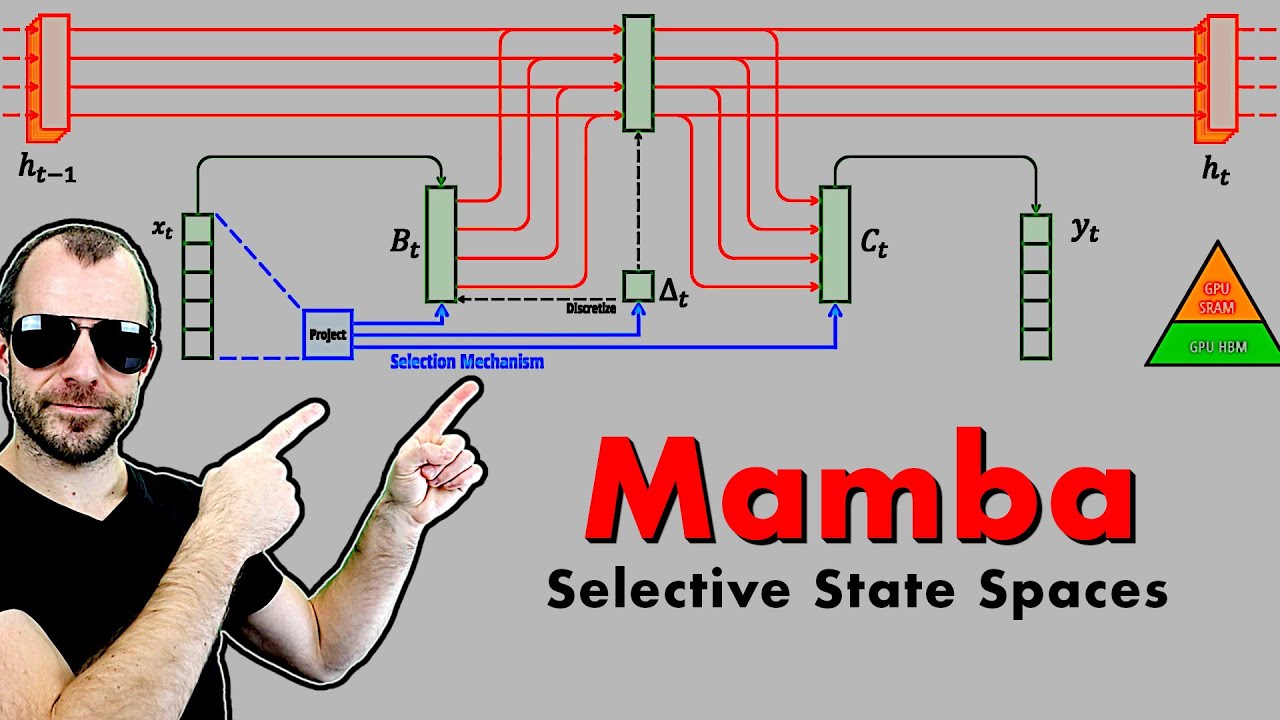

The video explains how Mamba introduces Selective State Spaces, which allow for a more flexible transition of states that still retains computational efficiency. Unlike traditional structured state-space models that maintain fixed transitions across time steps, Mamba’s architecture allows transitions to be influenced by the current input while keeping the backbone of state transitions linear. This means that, during training, Mamba can compute the entire forward pass for a sequence all at once, resembling the efficiency of Transformers, while during inference, it functions more like traditional language models.

The presenter delves into the details of the architecture, emphasizing its ability to handle long sequences—up to 1 million tokens—effectively. Mamba employs techniques such as prefix sums for efficient computation and is designed to leverage GPU memory effectively, reducing the data transfer bottleneck that often hampers performance. This efficiency is crucial for applications in dense modalities like language processing and genomics, where long sequences are common.

In the experimental results, the video highlights that Mamba has shown promising performance metrics, matching or surpassing other attention-free models and competing effectively against large Transformer models. The authors of the paper claim that Mamba excels particularly in tasks that require processing long sequences, such as DNA modeling and audio waveforms, suggesting that its design is well-suited for scenarios where long context length is of utmost importance.

Finally, the presenter concludes by emphasizing the potential of Mamba as a general backbone for sequence modeling and invites viewers to explore the implementation details available on GitHub. The video illustrates the intricate balance Mamba strikes between computational efficiency and modeling power, suggesting that it could lead the way for future advancements in sequence modeling architectures. Overall, Mamba represents an exciting step in the evolution of models designed for long-sequence tasks.

mamba #S4 #SSM

OUTLINE:

0:00 - Introduction

0:45 - Transformers vs RNNs vs S4

6:10 - What are state space models?

12:30 - Selective State Space Models

17:55 - The Mamba architecture

22:20 - The SSM layer and forward propagation

31:15 - Utilizing GPU memory hierarchy

34:05 - Efficient computation via prefix sums / parallel scans

36:01 - Experimental results and comments

38:00 - A brief look at the code

Paper: [2312.00752] Mamba: Linear-Time Sequence Modeling with Selective State Spaces

Abstract:

Foundation models, now powering most of the exciting applications in deep learning, are almost universally based on the Transformer architecture and its core attention module. Many subquadratic-time architectures such as linear attention, gated convolution and recurrent models, and structured state space models (SSMs) have been developed to address Transformers’ computational inefficiency on long sequences, but they have not performed as well as attention on important modalities such as language. We identify that a key weakness of such models is their inability to perform content-based reasoning, and make several improvements. First, simply letting the SSM parameters be functions of the input addresses their weakness with discrete modalities, allowing the model to selectively propagate or forget information along the sequence length dimension depending on the current token. Second, even though this change prevents the use of efficient convolutions, we design a hardware-aware parallel algorithm in recurrent mode. We integrate these selective SSMs into a simplified end-to-end neural network architecture without attention or even MLP blocks (Mamba). Mamba enjoys fast inference (5× higher throughput than Transformers) and linear scaling in sequence length, and its performance improves on real data up to million-length sequences. As a general sequence model backbone, Mamba achieves state-of-the-art performance across several modalities such as language, audio, and genomics. On language modeling, our Mamba-3B model outperforms Transformers of the same size and matches Transformers twice its size, both in pretraining and downstream evaluation.

Authors: Albert Gu, Tri Dao