The video discusses Meta’s delay in launching its large AI model “Behemoth,” highlighting its significant $72 billion AI investment amid a shift toward smaller, more efficient models favored for practicality and cost-effectiveness. It also emphasizes that success in AI now depends more on user adoption, seamless integration into popular platforms, and practical utility rather than sheer model size or technological superiority.

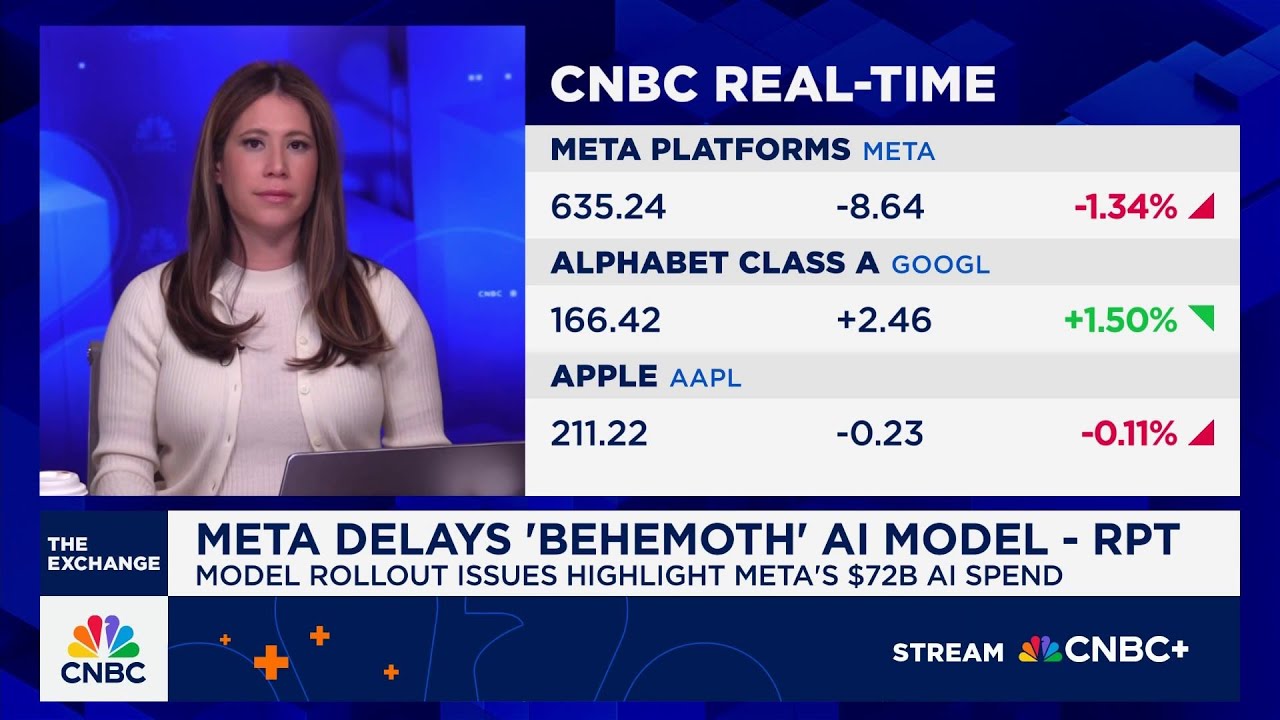

The discussion begins with a focus on Meta’s recent decision to delay the rollout of its large AI model, codenamed “Behemoth,” highlighting the company’s significant AI investment of $72 billion. The delay signals a shift in Meta’s AI strategy, especially as the AI landscape evolves with smaller, more efficient models gaining prominence over massive, resource-intensive ones. The conversation emphasizes that Meta’s approach, which has historically prioritized open-source models, may be changing, raising questions about whether Behemoth will remain open or be kept closed, impacting its competitiveness and monetization prospects.

The segment contrasts Meta’s strategy with other industry players like OpenAI and Google. OpenAI’s GPT-4 is optimized for cost and real-time interaction, while Google’s Gemini 2.0 is a lighter, faster model designed for practicality. Additionally, Chinese open-source models from Alibaba and Deep Sea exemplify a trend toward smaller, more accessible models. These models are increasingly favored because they are cheaper, faster, and more practical for real-world applications, suggesting that the AI race is shifting away from sheer scale toward usability and efficiency.

The discussion also touches on Meta’s substantial capital expenditures (CapEx), which are notably high relative to its revenue, even surpassing some hyperscalers with extensive cloud businesses. This aggressive investment raises questions about Meta’s long-term strategy and whether the delayed Behemoth model will justify such spending. The broader point is that as AI models become commoditized, their individual significance diminishes, and the focus shifts toward deploying useful applications that can generate tangible value.

Further, the conversation highlights that despite Google’s leading performance in AI benchmarks, the company faces challenges in translating technological superiority into user engagement and monetization. Google’s advantage lies in its existing app ecosystem—such as Search, YouTube, and Android—that provides a built-in distribution channel. This distribution edge is crucial because it allows Google to integrate AI into widely used platforms, giving it a competitive advantage over newer or less integrated models like Meta’s or OpenAI’s.

Finally, the discussion underscores that user adoption and ease of use are critical factors in AI success. Even with superior technology, Google’s ability to embed AI into its popular apps and reach billions of users remains a key strength. While OpenAI has rapidly grown its user base with ChatGPT, the challenge for all tech giants is to develop AI-powered applications that are not only innovative but also seamlessly integrated into platforms that users already trust and frequent. This strategic focus on distribution and practical utility is shaping the future of AI development and competition.