The video discusses a study by MIT and Cornell University that found AI chatbots can effectively reduce belief in conspiracy theories by engaging participants in persuasive dialogues, resulting in a 20% average decrease in such beliefs lasting for at least two months. However, the narrator expresses concern about the ethical implications of using AI for this purpose, likening it to brainwashing and criticizing the potential for ideological manipulation and bias in the research.

The video discusses a recent research project conducted by MIT and Cornell University, published in the journal Science, which explores the use of AI chatbots to reduce belief in conspiracy theories. The study involved participants who held various conspiracy beliefs engaging in conversations with AI chatbots designed to present persuasive arguments against those beliefs. The researchers aimed to determine whether these dialogues could lead to a lasting change in the participants’ views, tracking the effects over several months.

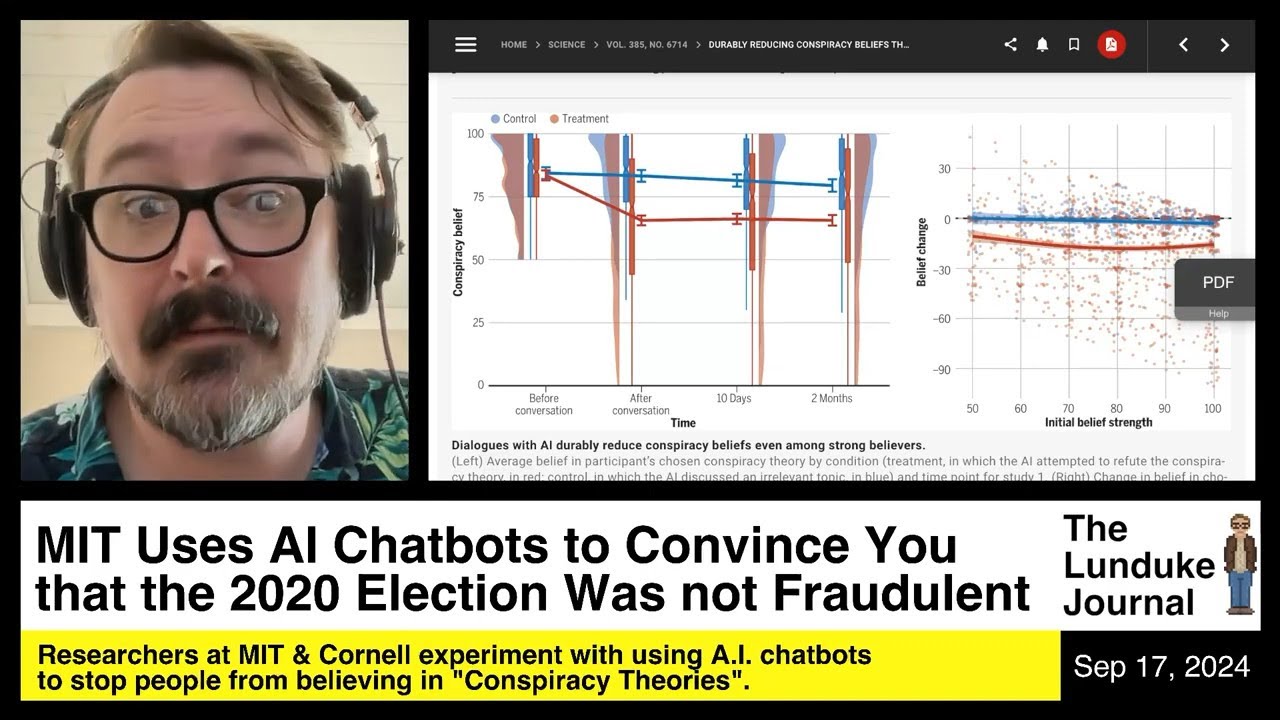

The findings indicated that the AI chatbot interactions resulted in an average reduction of 20% in belief in conspiracy theories among participants, with effects lasting for at least two months. The study covered a range of conspiracy theories, including those related to the 2020 U.S. presidential election and COVID-19 restrictions. Notably, the AI was effective even with participants who had deeply entrenched beliefs, although it did not reduce belief in true conspiracies. The researchers highlighted the potential of AI to mediate conflicts and address societal concerns regarding widespread conspiracy beliefs.

The video’s narrator expresses strong skepticism and concern about the ethical implications of using AI for this purpose. They argue that the approach resembles a form of brainwashing, where individuals are subjected to a “struggle session” with an AI to change their thoughts. The narrator worries about the broader implications of deploying such technology on a large scale, suggesting that it could be used to manipulate public opinion and suppress dissenting ideas.

The narrator also critiques the study’s underlying assumptions, noting that the researchers appeared to start from a politically biased perspective, labeling certain beliefs as inherently wrong. They argue that this bias undermines the integrity of the research, as it does not genuinely seek to evaluate the truth of the conspiracy theories but rather aims to change people’s minds about them. This raises concerns about the potential for AI to be used as a tool for ideological control.

In conclusion, the video highlights the narrator’s deep unease with the intersection of AI technology and the manipulation of beliefs. They call for a more open dialogue about the ethical ramifications of using AI chatbots in this manner and express a desire for researchers like Thomas Castello to engage in discussions about the implications of their work. The narrator emphasizes the importance of challenging ideas and fostering critical thinking rather than resorting to AI-driven persuasion, which they view as a dangerous precedent.