The video explains the Mixture of Experts (MoE) approach in machine learning, which enhances AI efficiency by activating only specialized subnetworks, or experts, for specific tasks, rather than engaging the entire model. Key components of MoE include sparsity, routing, and load balancing, which collectively optimize performance and resource usage, making it particularly valuable for complex applications like natural language processing.

The video discusses the concept of Mixture of Experts (MoE), a machine learning approach that enhances AI efficiency by utilizing modular models. In this framework, an AI model is divided into separate subnetworks, referred to as experts. Each expert specializes in a specific subset of the input data, allowing the system to activate only the relevant experts for a given task. This targeted activation contrasts with traditional models that engage the entire network for every operation, leading to improved efficiency and performance.

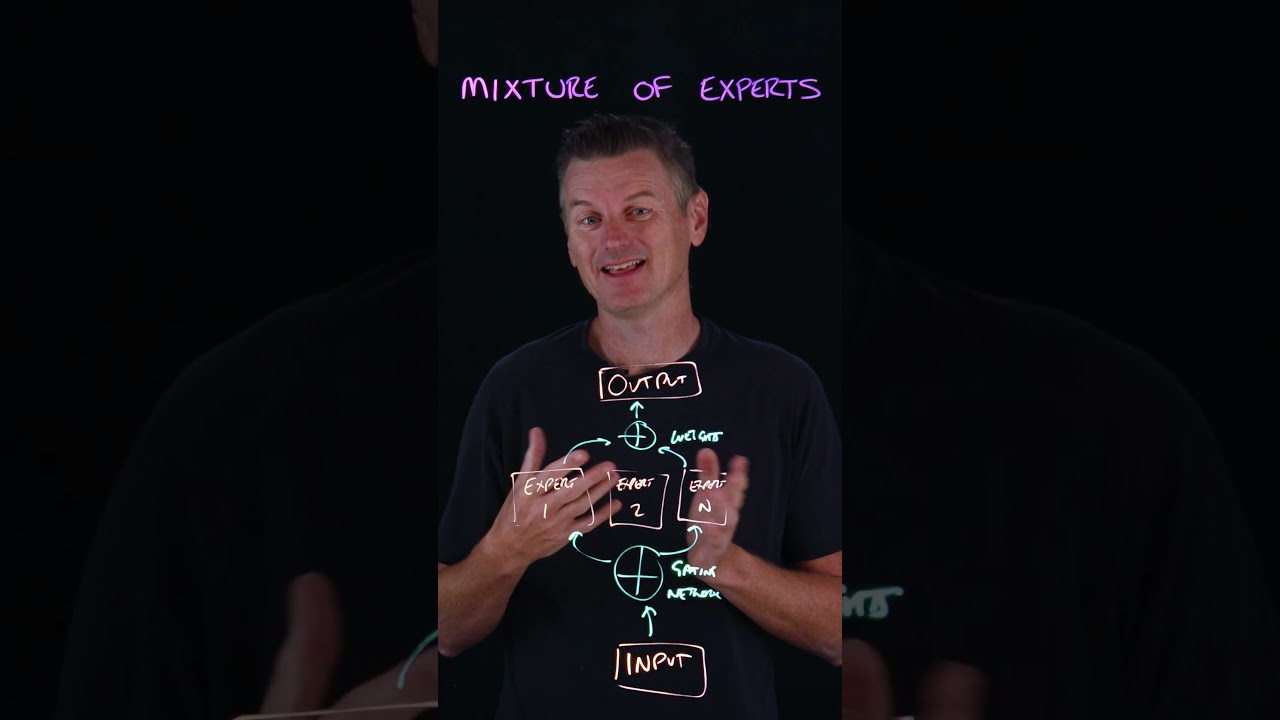

A key component of the MoE architecture is the gating network, which functions as a coordinator. This network intelligently determines which expert should be activated for each subtask, ensuring that the most suitable expert is engaged based on the input data. This selective activation is crucial for optimizing the model’s performance and resource usage, as it allows for a more focused approach to problem-solving.

The video highlights three essential components of the MoE framework: sparsity, routing, and load balancing. Sparsity refers to the activation of only a limited number of experts at any given time, which reduces computational overhead. Routing involves the decision-making process of selecting which experts to utilize for specific tasks, ensuring that the most relevant experts are engaged. Load balancing is important for maintaining efficiency, as it ensures that all experts are utilized effectively over time, preventing any single expert from becoming a bottleneck.

Although the concept of Mixture of Experts dates back to 1991, it is experiencing a resurgence in modern AI applications, particularly in Large Language Models (LLMs). The ability of MoE to handle complex data, such as human language, makes it particularly valuable in the context of natural language processing. By leveraging the strengths of individual experts, these models can achieve better performance while managing computational resources more effectively.

In conclusion, the Mixture of Experts approach represents a significant advancement in AI efficiency, allowing for more specialized and effective processing of data. By activating only the necessary experts for each task, the MoE framework not only enhances performance but also optimizes resource usage. As AI continues to evolve, the principles of MoE are likely to play a crucial role in the development of more sophisticated and efficient models.