The video discusses Together AI’s research paper on the Mixture of Agents (MOA) approach, which leverages the collective intelligence of multiple open-source language models to outperform leading models like GPT-4o. By integrating diverse models as agents in a collaborative framework, MOA demonstrates enhanced performance in generating high-quality responses to prompts, showcasing the potential of collaborative agent-based frameworks in advancing language model capabilities.

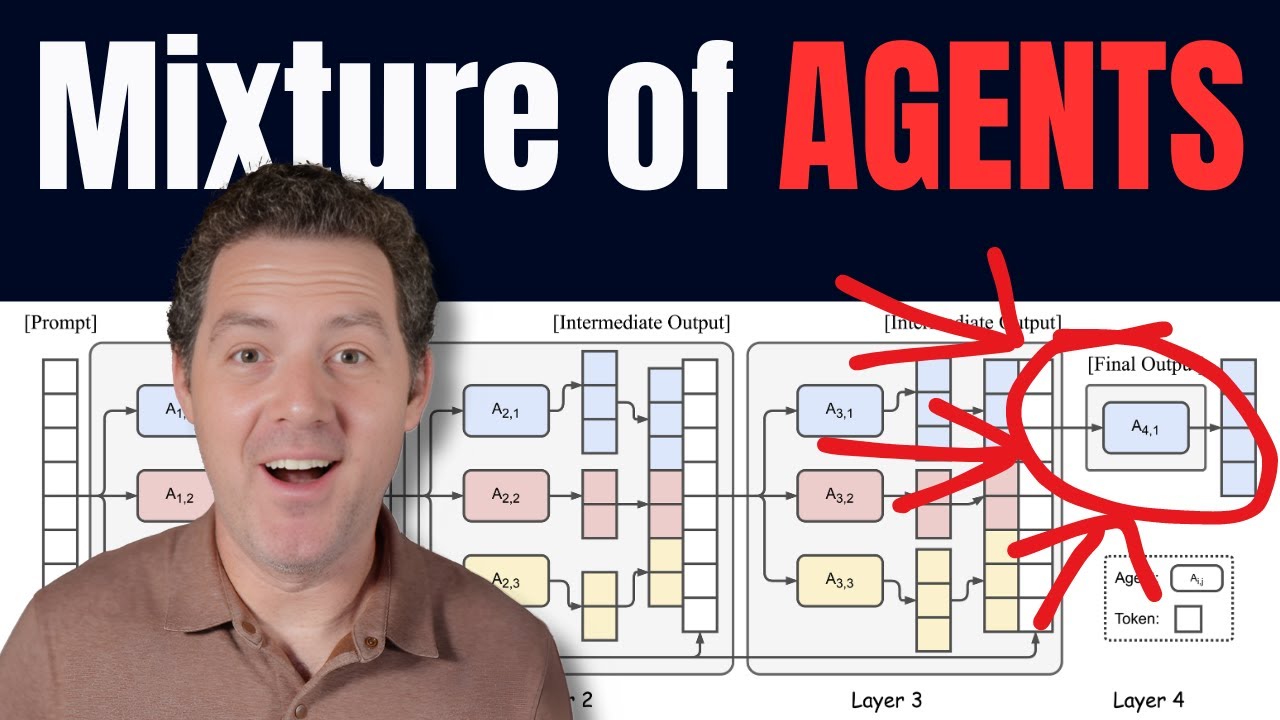

The video discusses a research paper published by Together AI on the concept of Mixture of Agents (MOA), which leverages the collective intelligence of open-source models to enhance language model capabilities. By allowing multiple large language models to work together as agents, the output surpasses the performance of leading models like GPT-4o. The MOA approach involves multiple layers, with each layer consisting of three agents collaborating to generate refined responses to prompts. This collaborative process integrates diverse capabilities and insights from various models, resulting in a more robust and versatile combined model that outperforms GPT-40 on the Alpaca Eval 2.0 benchmark.

The research paper explores the collaborativeness of language models, where an LLM tends to generate better responses when presented with outputs from other models. By categorizing the roles of agents as proposers and aggregators, the MOA framework effectively synthesizes responses from multiple models to produce high-quality outputs. The video highlights the significance of diverse perspectives and capabilities that different models offer when integrated into the MOA approach. The MOA model uses six open-source models as proposers and Quen 1.5 110b chat as the final aggregators, showcasing the power of collaboration among different LLMs.

The video demonstrates a live test of the MOA approach by querying multiple LLMs with a prompt to generate sentences ending in the word “apples.” Despite encountering rate limit errors, the MOA approach successfully produces a correct and high-quality response, showcasing the effectiveness of the collaborative agent-based framework. The video emphasizes the potential of the MOA approach in improving LLM performance through the integration of diverse inputs from multiple models.

The research findings suggest that the MOA approach achieves consistent and monotonic performance gains with each layer, indicating the value of multiple layers in enhancing output quality. Experiments conducted on the influence of the number of proposers demonstrate that integrating a wider variety of inputs from different models significantly enhances the output quality. The video concludes by highlighting the similarities between the collaborative nature of LLMs in the MOA approach and human collaboration, where diverse perspectives lead to enhanced outcomes.

Overall, the video presents the innovative MOA approach as a promising method to harness the collective strengths of multiple LLMs and push the boundaries of language model capabilities. By leveraging the collaborativeness of LLMs and emphasizing the importance of diverse perspectives and capabilities, the MOA approach showcases superior performance compared to leading models like GPT-4o. The live test of the MOA approach demonstrates its effectiveness in generating high-quality responses to prompts and highlights the potential for further research and development in collaborative agent-based frameworks for language models.