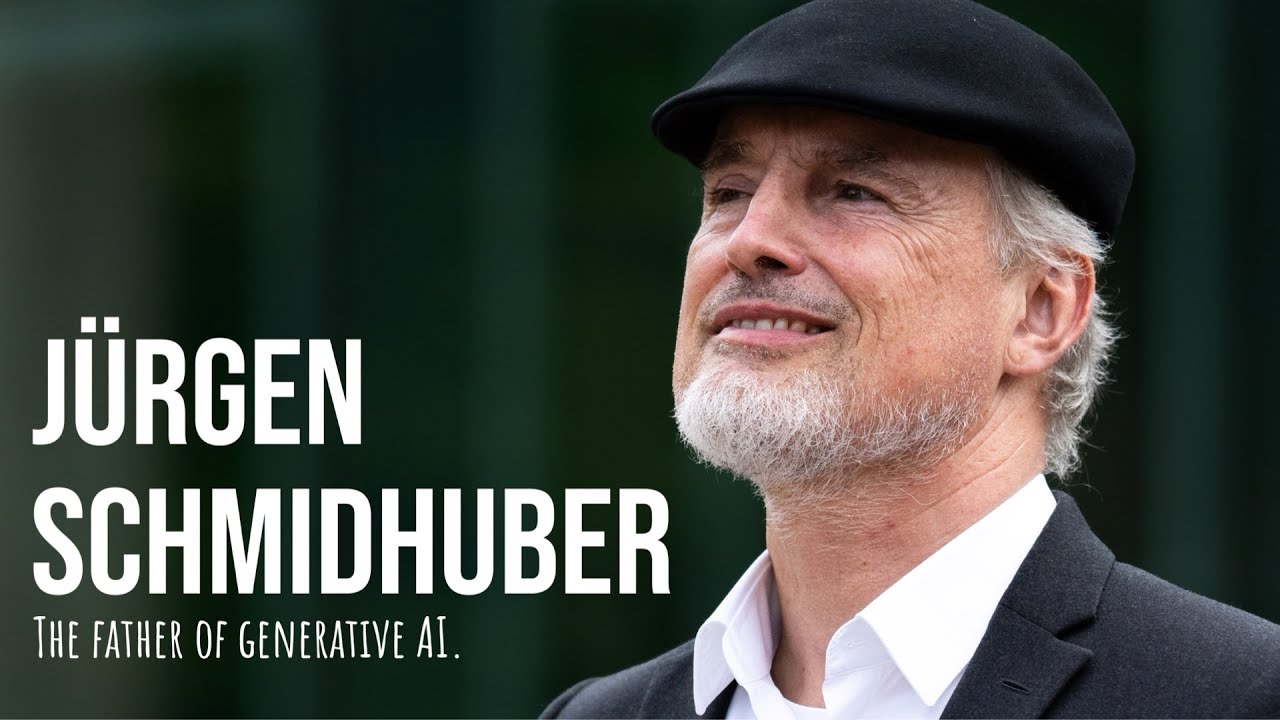

The video features Jürgen Schmidhuber discussing the evolution of AI, emphasizing the limitations of current large language models like ChatGPT in achieving true artificial general intelligence due to their lack of reasoning and creativity. He highlights the significance of foundational algorithms such as LSTMs, the role of data compression in scientific discovery, and expresses optimism for the future of AI, particularly in reinforcement learning and the development of more sophisticated systems.

In the video, the discussion revolves around the evolution of artificial intelligence (AI), particularly focusing on the contributions of Jürgen Schmidhuber, a pioneer in the field. He reflects on the advancements in AI technologies, including large language models like ChatGPT, and emphasizes that many people misunderstand these models’ capabilities and limitations. Schmidhuber argues that while these models have made significant strides, they do not represent the imminent arrival of artificial general intelligence (AGI), as they lack essential cognitive features such as reasoning and creativity.

Schmidhuber highlights the importance of understanding the foundational algorithms behind modern AI, including recurrent neural networks (RNNs) and long short-term memory networks (LSTMs), which he helped develop. He explains that these models are capable of solving problems that current transformer-based models struggle with, such as the parity problem. The conversation also touches on the significance of depth in neural networks, where deeper architectures can lead to better generalization and performance in various tasks.

The video delves into the concept of data compression as a driving force behind scientific discovery and AI development. Schmidhuber posits that the essence of science is to find regularities in data that allow for compression, which in turn leads to better predictions and understanding of the world. He draws parallels between human learning and AI, suggesting that both processes involve discovering and utilizing these regularities to improve performance and knowledge acquisition.

Schmidhuber also discusses the historical context of AI research, noting that many of the foundational techniques used today were developed decades ago, often during periods of reduced interest in the field. He emphasizes the role of public funding in sustaining research during these “neural network winters” and how advancements in computational power have enabled the implementation of these older algorithms in modern applications.

Finally, the conversation touches on the future of AI, with Schmidhuber expressing optimism about the potential for reinforcement learning and the development of more sophisticated AI systems. He envisions a future where AI can explore and learn from the environment without explicit human guidance, ultimately leading to systems that can reason and adapt in complex scenarios. The discussion concludes with a recognition of the interconnectedness of past and present AI research, underscoring the importance of foundational work in shaping the future of the field.