Energy-Based Transformers (EBT) introduce a novel AI approach that replaces traditional token prediction with an iterative optimization guided by a learned energy function, allowing the model to refine outputs dynamically and allocate more “thinking time” to difficult tokens. Although currently slower and more computationally intensive, EBT shows promising improvements in reasoning, generalization, and versatility across tasks like language modeling and image denoising, suggesting a scalable and elegant future direction for AI development.

The video discusses a new AI paradigm called Energy-Based Transformers (EBT), which proposes a different approach to language modeling and reasoning compared to traditional methods. Current large language models (LLMs) rely on generating more words to improve prediction accuracy, but this approach lacks elegance and scalability, especially when it comes to verifying and rewarding reasoning at scale. The video highlights that verification is easier in domains like coding and math, which is why models excel there. The key idea behind EBT is to shift from direct generation to an iterative optimization process guided by a learned verifier, or energy function, that scores predictions and refines them until the best output is found.

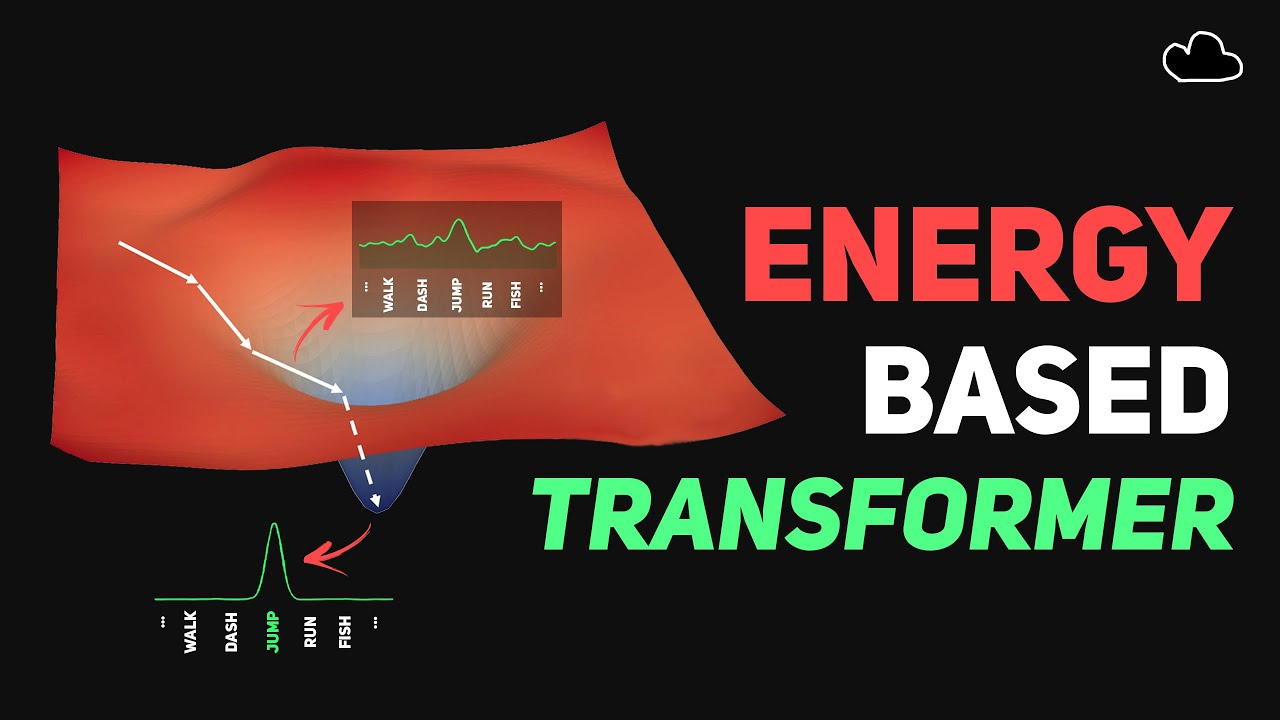

Unlike standard language models that predict the next token by outputting probabilities for all possible tokens at once, EBT starts with a random token embedding and iteratively adjusts it to minimize an energy score, which corresponds to the likelihood of correctness. This iterative process allows the model to allocate more “thinking time” to difficult tokens dynamically, potentially improving accuracy without relying on entropy-based heuristics. The video compares this process to diffusion models and generative adversarial networks (GANs), noting that EBT unifies the generator and critic into a single model that refines its predictions through gradient descent on the energy surface.

The researchers implemented EBT using a decoder-only transformer architecture similar to GPT but replaced the softmax prediction head with a scalar energy head. This allows the model to perform multiple forward passes per token during inference, improving perplexity with more passes. While EBT currently lags behind traditional transformers in some benchmarks, it shows promising scaling behavior and better generalization to out-of-distribution tasks. The model’s ability to “think longer” on challenging tokens leads to improved performance, suggesting that EBT could become more efficient and effective at larger scales.

EBT also demonstrates impressive results in image denoising tasks, outperforming diffusion transformers with far fewer inference steps, highlighting the versatility of the energy-based objective across different modalities. However, the iterative nature of EBT introduces computational overhead, making generation slower and more memory-intensive compared to standard transformers. Training EBT is also challenging due to the need for a smooth energy surface that allows effective gradient descent, especially in high-entropy regions where multiple tokens compete equally.

In conclusion, Energy-Based Transformers represent a fascinating and promising new direction for AI research, offering a more elegant and potentially scalable way to integrate reasoning and generation. While there are still hurdles to overcome, particularly in training stability and efficiency, the paradigm’s ability to dynamically allocate compute and improve out-of-distribution generalization makes it an exciting area to watch. The video encourages viewers to follow ongoing research developments and subscribe to the creator’s newsletter for the latest updates in AI advancements.