The video presentation discusses the evolution of Language Model applications, focusing on in context learning for summarization tasks. The speaker highlights the importance of practical use cases, advocating for the adoption of cost-effective models like Haiku to improve summarization quality and adaptability.

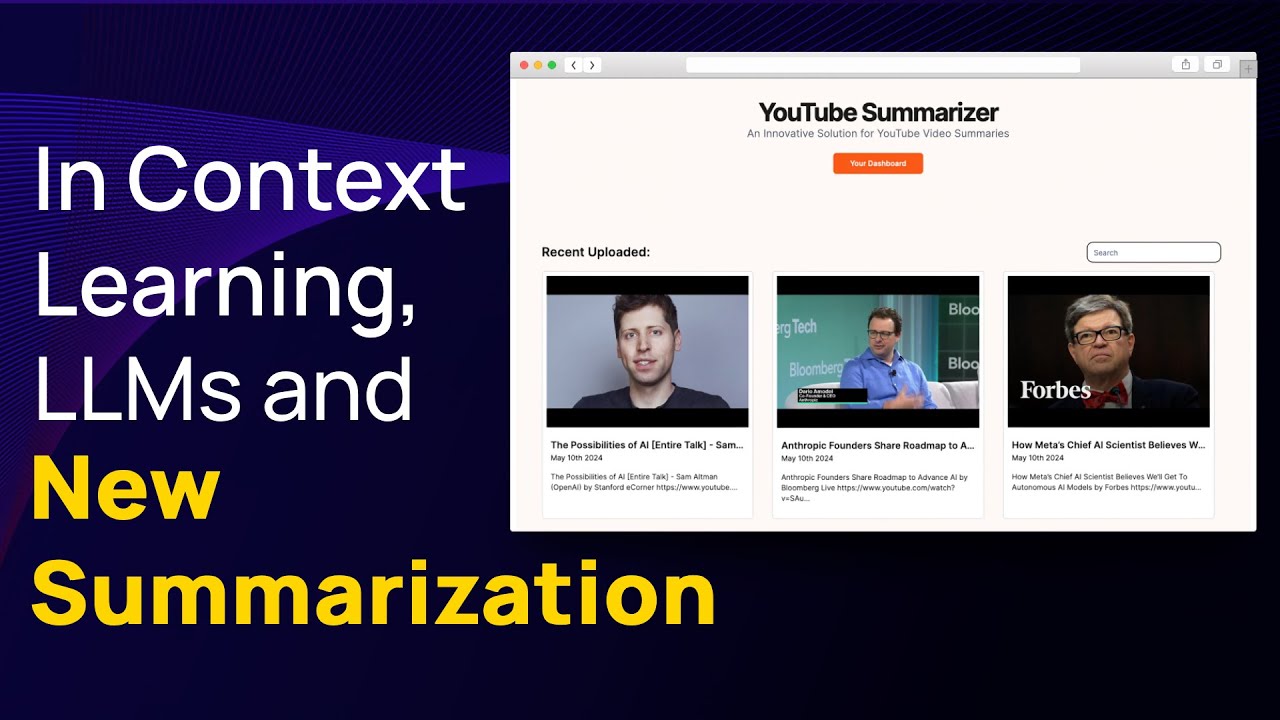

The video presentation discusses the concept of in context learning with a focus on new Language Model (LLM) applications, particularly in the field of summarization. The speaker highlights the evolution of LLMs from GPT-2 in 2018 to OpenAI GPT-4, Gemini, and Llama 3 in recent times. Two key themes in LLM apps are personalization and curation, which allow for tailored user experiences and filtering of information overload, respectively. The speaker demonstrates an app they developed to create high-quality notes from YouTube videos, emphasizing the importance of personalized and curated content.

The speaker delves into the challenges of traditional summarization methods and introduces a new approach using Haiku, a cost-effective and performant model. By leveraging Haiku’s capabilities, the speaker proposes a method of sectioning document content and generating summaries for each section, enabling longer summaries beyond the usual token limits. This approach involves multiple calls to the LLM, which may require careful consideration of quota limitations for production use.

Furthermore, the speaker advocates for evaluating LLM choices based on practical use cases rather than academic benchmarks. They suggest utilizing smaller, proprietary models that offer speed and affordability, such as Haiku, for everyday applications. Emphasizing the importance of context, the speaker encourages considering context over model superiority when building applications. They also stress the benefits of using more tokens for inputs to enhance summarization quality.

The discussion touches on the emergence of families of models like Gemini Ultra 1.0 and Gemini Pro 1.0, offering a suite of options for different use cases. The speaker highlights the significance of in-context learning with smaller models, enabling the incorporation of numerous few-shot examples to guide model responses. This approach enhances the adaptability and intelligence of LLMs, particularly in personalized summarization tasks. The speaker concludes by encouraging viewers to explore these changing dynamics in the LLM space and make informed decisions when selecting models for various applications.

In conclusion, the presentation sheds light on the evolving landscape of LLMs and summarization techniques, emphasizing the practicality of newer, cost-effective models for personalized and curated content creation. By leveraging models like Haiku and embracing in-context learning, developers can enhance summarization quality and adaptability in their applications. The speaker’s insights underscore the importance of context, practicality, and multiple call strategies when utilizing LLMs for diverse use cases, signaling a shift towards more efficient and tailored approaches in natural language processing.