The video introduces Nvidia’s RTX Pro 5000 Blackwell GPU, designed as a cost-effective, high-memory option for local AI enthusiasts and professionals, offering a balance of performance and affordability compared to higher-end models like the RTX 5090. It highlights the GPU’s specifications, enterprise features, and promising performance, suggesting it could be a compelling choice for AI development in 2025, depending on final pricing and availability.

The video discusses Nvidia’s recent release of the RTX Pro 5000 Blackwell GPU, targeting local AI enthusiasts and professionals. The presenter questions what “enthusiast” truly means in Nvidia’s context—whether it refers to gamers who also do AI work or to those who want high-performance AI hardware without overspending. Nvidia’s new GPU appears to be a more accessible and cost-effective option for AI development, especially compared to their higher-end models like the RTX 6000 Blackwell, which can cost around $9,500. The RTX Pro 5000 Blackwell is positioned as a potentially better buy for AI tasks in 2025, offering a balance of performance and price for users who prefer new hardware over used options.

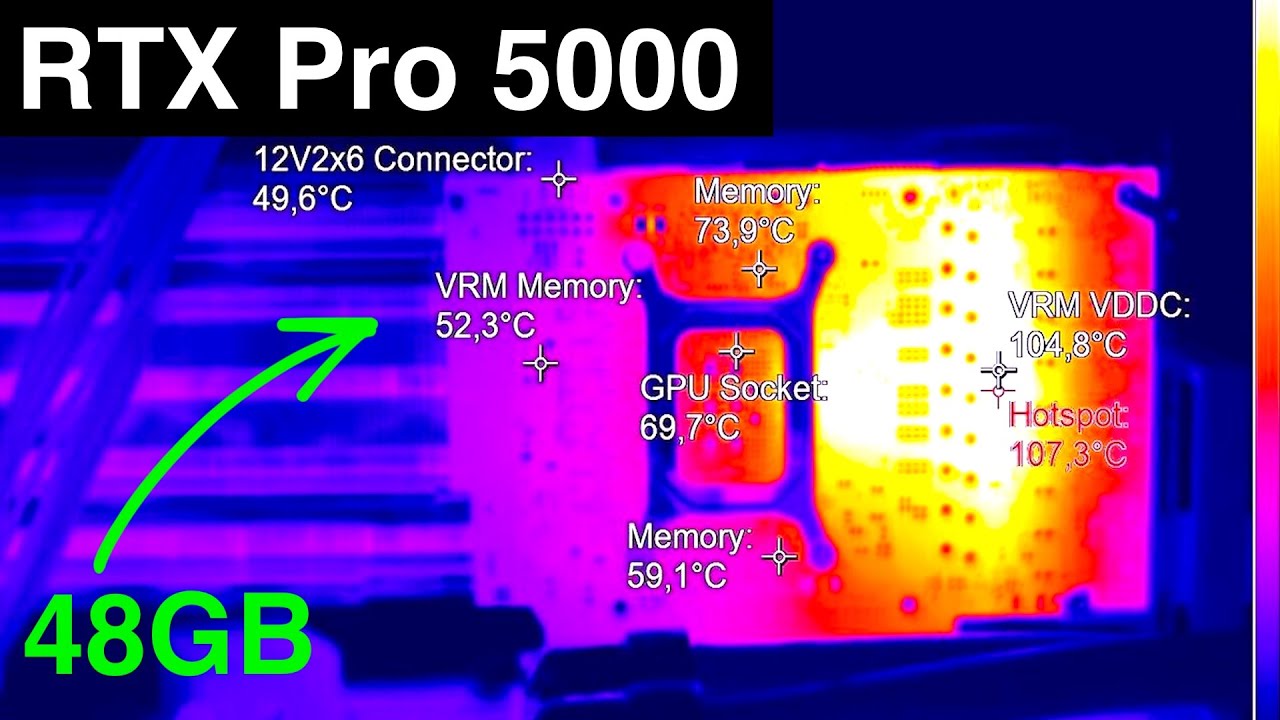

The GPU’s specifications are highlighted, including its architecture based on Blackwell, with features like fifth-generation tensor cores, fourth-generation ray tracing cores, and 48GB of GDDR7 memory with error correction. The card is built on a 384-bit bus and supports driver features that allow stacking RAM without complex software tricks. Nvidia emphasizes its focus on AI performance, neural rendering, LLM inference, and simulations, making it suitable for AI development workflows. Despite some quirks like the 12-pin power connector, the card is designed to be efficient and enterprise-oriented, with support for multi-GPU stacking facilitated by Nvidia’s drivers.

Compared to the RTX 5090, the RTX Pro 5000 Blackwell offers more VRAM (48GB vs. 32GB), which is advantageous for certain AI workloads. While the 5090 has more CUDA cores and tensor cores, the Pro series balances memory capacity and enterprise features, making it a compelling choice for inference and local AI tasks. The presenter notes that the Pro series is likely to be priced around $4,000 to $4,500, which is lower than the 5090’s cost, and is supported by partners like PNY offering warranties and support. This makes the Pro 5000 a more reliable and potentially more cost-effective option compared to buying used hardware like 3090s or other older GPUs.

Benchmark data from Tech PowerUp, though limited due to Nvidia’s embargo, suggests that the RTX Pro 5000 Blackwell performs about 12% better than the RTX 4090 and significantly outperforms previous models like the RTX 5080 and 3090. While it doesn’t match the raw AI compute of the 5090, it offers a good performance-to-price ratio for local AI development. The GPU’s performance metrics, including shader units and overall AI capability, indicate it is suitable for users who need high memory capacity and reliable enterprise features, especially for inference tasks rather than gaming or streaming.

In conclusion, the presenter sees the RTX Pro 5000 Blackwell as an intriguing development for AI enthusiasts and professionals, especially those seeking a single, powerful GPU for local AI workflows. He emphasizes that its value depends on the final pricing and whether its features justify the cost compared to alternatives like multiple 5090s. The video ends with an invitation for viewers to share their opinions on whether this GPU is worth buying, and a note of anticipation for more benchmark data and pricing details once the GPU becomes more widely available.