In the video, Dr. Károly Zsolnai-Fehér discusses a new AI method that generates high-quality 3D geometry from point clouds, significantly reducing the need for manual adjustments and making 3D modeling more accessible. While the technique still has some limitations, such as requiring point clouds as input, it offers improved detail and efficiency, promising exciting advancements for applications in gaming and animation.

In the video, Dr. Károly Zsolnai-Fehér discusses groundbreaking research in AI that aims to generate 3D geometry from minimal input, specifically point clouds. Traditionally, creating 3D models for computer games and animated films requires extensive manual work by skilled artists using software like Blender. However, advancements in AI now allow for the generation of 3D models through text prompts, although the initial results often require significant refinement and editing by artists.

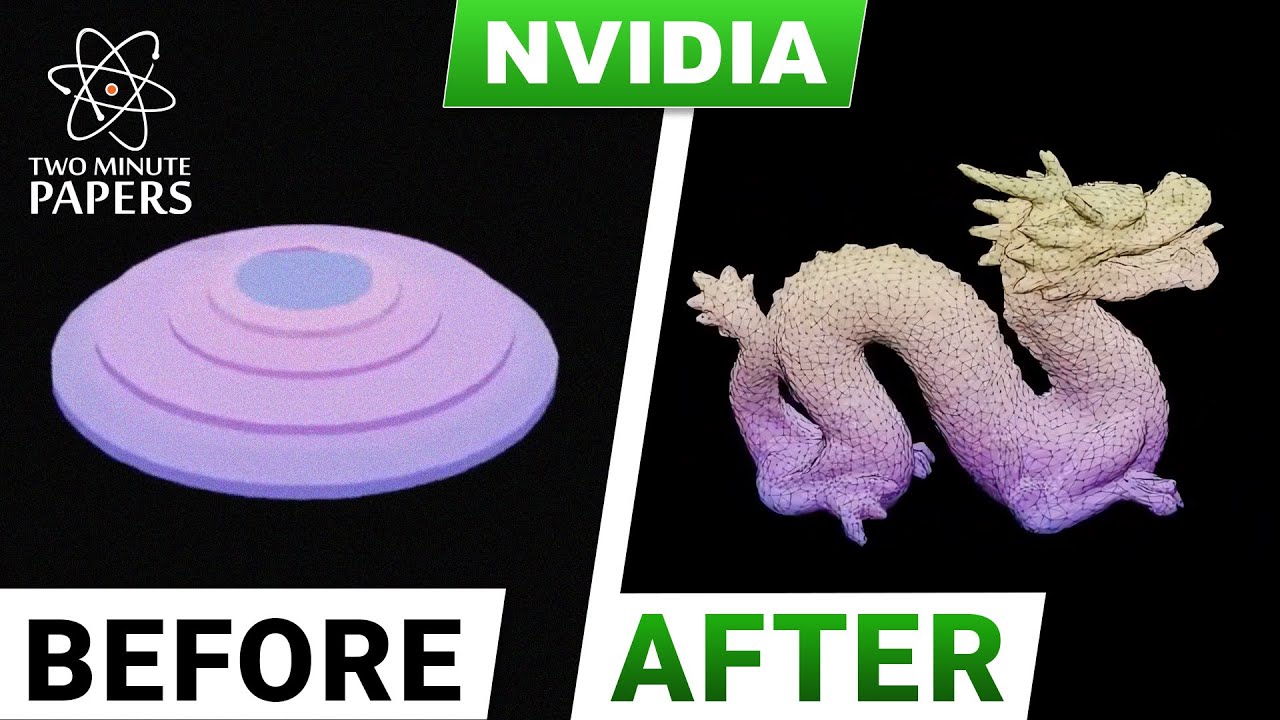

The video highlights the limitations of previous AI methods that produced poorly constructed meshes, often necessitating extensive modifications. Dr. Zsolnai-Fehér expresses excitement about a new paper that promises improved tessellation, resulting in cleaner and more easily editable 3D models. This new approach significantly reduces the need for manual adjustments, making it more accessible for those without advanced artistic skills.

While the new method still relies on point clouds as input rather than direct text prompts, it represents a significant leap forward in quality. The AI can generate high-quality geometry from point clouds, which are easier to create using AI than detailed meshes. This approach allows for the generation of 3D models that are not only visually appealing but also structurally sound, addressing many of the issues faced by previous techniques.

The video also emphasizes the flexibility of the new AI method, which allows users to choose between different levels of detail in the generated models. This adaptability is crucial for various applications, such as real-time gaming versus animated films, where rendering requirements differ. The new technique can produce meshes that are up to 40 times more detailed than earlier methods while being more memory-efficient and faster.

Despite its advancements, the new technique is not without flaws. It still requires a point cloud as input, and users may encounter issues like missing parts or holes in the models. However, Dr. Zsolnai-Fehér remains optimistic about the future of this technology and encourages viewers to explore the research further. He concludes by inviting viewers to engage with him at the upcoming GTC conference, where he will be present to discuss these exciting developments in AI and 3D modeling.